图像标注

作者: A_K_Nain

创建日期: 2021/05/29

最后修改: 2021/10/31

描述: 使用CNN和Transformer实现图像标注模型。

设置

import os

os.environ["KERAS_BACKEND"] = "tensorflow"

import re

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

import keras

from keras import layers

from keras.applications import efficientnet

from keras.layers import TextVectorization

keras.utils.set_random_seed(111)

下载数据集

我们将使用Flickr8K数据集进行本教程。该数据集包含超过8000张图像,每张图像都配有五个不同的标题。

!wget -q https://github.com/jbrownlee/Datasets/releases/download/Flickr8k/Flickr8k_Dataset.zip

!wget -q https://github.com/jbrownlee/Datasets/releases/download/Flickr8k/Flickr8k_text.zip

!unzip -qq Flickr8k_Dataset.zip

!unzip -qq Flickr8k_text.zip

!rm Flickr8k_Dataset.zip Flickr8k_text.zip

# 图像的路径

IMAGES_PATH = "Flicker8k_Dataset"

# 所需的图像尺寸

IMAGE_SIZE = (299, 299)

# 词汇表大小

VOCAB_SIZE = 10000

# 任何序列允许的固定长度

SEQ_LENGTH = 25

# 图像嵌入和标记嵌入的维度

EMBED_DIM = 512

# 前馈网络中的每层单元

FF_DIM = 512

# 其他训练参数

BATCH_SIZE = 64

EPOCHS = 30

AUTOTUNE = tf.data.AUTOTUNE

准备数据集

def load_captions_data(filename):

"""加载标题(文本)数据并将其映射到相应的图像。

Args:

filename: 包含标题数据的文本文件路径。

Returns:

caption_mapping: 将图像名称映射到相应标题的字典

text_data: 包含所有可用标题的列表

"""

with open(filename) as caption_file:

caption_data = caption_file.readlines()

caption_mapping = {}

text_data = []

images_to_skip = set()

for line in caption_data:

line = line.rstrip("\n")

# 图像名称和标题之间用制表符分隔

img_name, caption = line.split("\t")

# 每个图像的标题重复五次。

# 每个图像名称都有一个后缀`#(caption_number)`

img_name = img_name.split("#")[0]

img_name = os.path.join(IMAGES_PATH, img_name.strip())

# 我们将删除过短或过长的标题

tokens = caption.strip().split()

if len(tokens) < 5 or len(tokens) > SEQ_LENGTH:

images_to_skip.add(img_name)

continue

if img_name.endswith("jpg") and img_name not in images_to_skip:

# 我们将为每个标题添加一个开始和结束标记

caption = "<start> " + caption.strip() + " <end>"

text_data.append(caption)

if img_name in caption_mapping:

caption_mapping[img_name].append(caption)

else:

caption_mapping[img_name] = [caption]

for img_name in images_to_skip:

if img_name in caption_mapping:

del caption_mapping[img_name]

return caption_mapping, text_data

def train_val_split(caption_data, train_size=0.8, shuffle=True):

"""将标题数据集拆分为训练和验证集。

Args:

caption_data (dict): 包含映射标题数据的字典

train_size (float): 用作训练数据的完整数据集的比例

shuffle (bool): 是否在拆分前打乱数据集

Returns:

训练和验证数据集作为两个分开的字典

"""

# 1. 获取所有图像名称的列表

all_images = list(caption_data.keys())

# 2. 如果需要则打乱

if shuffle:

np.random.shuffle(all_images)

# 3. 拆分为训练集和验证集

train_size = int(len(caption_data) * train_size)

training_data = {

img_name: caption_data[img_name] for img_name in all_images[:train_size]

}

validation_data = {

img_name: caption_data[img_name] for img_name in all_images[train_size:]

}

# 4. 返回拆分结果

return training_data, validation_data

# 加载数据集

captions_mapping, text_data = load_captions_data("Flickr8k.token.txt")

# 将数据集拆分为训练和验证集

train_data, valid_data = train_val_split(captions_mapping)

print("训练样本数量: ", len(train_data))

print("验证样本数量: ", len(valid_data))

训练样本数量: 6114

验证样本数量: 1529

向文本数据向量化

我们将使用 TextVectorization 层来向量化文本数据,

也就是说,将

原始字符串转换为整数序列,其中每个整数表示一个单词在词汇表中的索引。我们将使用自定义的字符串标准化方案

(去除除 < 和 > 以外的标点符号)和默认的

拆分方案(基于空格拆分)。

def custom_standardization(input_string):

lowercase = tf.strings.lower(input_string)

return tf.strings.regex_replace(lowercase, "[%s]" % re.escape(strip_chars), "")

strip_chars = "!\"#$%&'()*+,-./:;<=>?@[\]^_`{|}~"

strip_chars = strip_chars.replace("<", "")

strip_chars = strip_chars.replace(">", "")

vectorization = TextVectorization(

max_tokens=VOCAB_SIZE,

output_mode="int",

output_sequence_length=SEQ_LENGTH,

standardize=custom_standardization,

)

vectorization.adapt(text_data)

# 图像数据的数据增强

image_augmentation = keras.Sequential(

[

layers.RandomFlip("horizontal"),

layers.RandomRotation(0.2),

layers.RandomContrast(0.3),

]

)

构建 tf.data.Dataset 管道用于训练

我们将使用 tf.data.Dataset 对象生成图像和相应字幕的配对。

该管道由两个步骤组成:

- 从磁盘读取图像

- 为与图像对应的五个字幕进行标记化

def decode_and_resize(img_path):

img = tf.io.read_file(img_path)

img = tf.image.decode_jpeg(img, channels=3)

img = tf.image.resize(img, IMAGE_SIZE)

img = tf.image.convert_image_dtype(img, tf.float32)

return img

def process_input(img_path, captions):

return decode_and_resize(img_path), vectorization(captions)

def make_dataset(images, captions):

dataset = tf.data.Dataset.from_tensor_slices((images, captions))

dataset = dataset.shuffle(BATCH_SIZE * 8)

dataset = dataset.map(process_input, num_parallel_calls=AUTOTUNE)

dataset = dataset.batch(BATCH_SIZE).prefetch(AUTOTUNE)

return dataset

# 传递图像列表和相应字幕列表

train_dataset = make_dataset(list(train_data.keys()), list(train_data.values()))

valid_dataset = make_dataset(list(valid_data.keys()), list(valid_data.values()))

构建模型

我们的图像字幕生成架构由三个模型组成:

- 一个 CNN:用于提取图像特征

- 一个 TransformerEncoder:提取的图像特征被传递到基于 Transformer 的编码器,生成输入的新表示

- 一个 TransformerDecoder:该模型接受编码器的输出和文本数据 (序列)作为输入,并尝试学习生成字幕。

def get_cnn_model():

base_model = efficientnet.EfficientNetB0(

input_shape=(*IMAGE_SIZE, 3),

include_top=False,

weights="imagenet",

)

# We freeze our feature extractor

base_model.trainable = False

base_model_out = base_model.output

base_model_out = layers.Reshape((-1, base_model_out.shape[-1]))(base_model_out)

cnn_model = keras.models.Model(base_model.input, base_model_out)

return cnn_model

class TransformerEncoderBlock(layers.Layer):

def __init__(self, embed_dim, dense_dim, num_heads, **kwargs):

super().__init__(**kwargs)

self.embed_dim = embed_dim

self.dense_dim = dense_dim

self.num_heads = num_heads

self.attention_1 = layers.MultiHeadAttention(

num_heads=num_heads, key_dim=embed_dim, dropout=0.0

)

self.layernorm_1 = layers.LayerNormalization()

self.layernorm_2 = layers.LayerNormalization()

self.dense_1 = layers.Dense(embed_dim, activation="relu")

def call(self, inputs, training, mask=None):

inputs = self.layernorm_1(inputs)

inputs = self.dense_1(inputs)

attention_output_1 = self.attention_1(

query=inputs,

value=inputs,

key=inputs,

attention_mask=None,

training=training,

)

out_1 = self.layernorm_2(inputs + attention_output_1)

return out_1

class PositionalEmbedding(layers.Layer):

def __init__(self, sequence_length, vocab_size, embed_dim, **kwargs):

super().__init__(**kwargs)

self.token_embeddings = layers.Embedding(

input_dim=vocab_size, output_dim=embed_dim

)

self.position_embeddings = layers.Embedding(

input_dim=sequence_length, output_dim=embed_dim

)

self.sequence_length = sequence_length

self.vocab_size = vocab_size

self.embed_dim = embed_dim

self.embed_scale = tf.math.sqrt(tf.cast(embed_dim, tf.float32))

def call(self, inputs):

length = tf.shape(inputs)[-1]

positions = tf.range(start=0, limit=length, delta=1)

embedded_tokens = self.token_embeddings(inputs)

embedded_tokens = embedded_tokens * self.embed_scale

embedded_positions = self.position_embeddings(positions)

return embedded_tokens + embedded_positions

def compute_mask(self, inputs, mask=None):

return tf.math.not_equal(inputs, 0)

class TransformerDecoderBlock(layers.Layer):

def __init__(self, embed_dim, ff_dim, num_heads, **kwargs):

super().__init__(**kwargs)

self.embed_dim = embed_dim

self.ff_dim = ff_dim

self.num_heads = num_heads

self.attention_1 = layers.MultiHeadAttention(

num_heads=num_heads, key_dim=embed_dim, dropout=0.1

)

self.attention_2 = layers.MultiHeadAttention(

num_heads=num_heads, key_dim=embed_dim, dropout=0.1

)

self.ffn_layer_1 = layers.Dense(ff_dim, activation="relu")

self.ffn_layer_2 = layers.Dense(embed_dim)

self.layernorm_1 = layers.LayerNormalization()

self.layernorm_2 = layers.LayerNormalization()

self.layernorm_3 = layers.LayerNormalization()

self.embedding = PositionalEmbedding(

embed_dim=EMBED_DIM,

sequence_length=SEQ_LENGTH,

vocab_size=VOCAB_SIZE,

)

self.out = layers.Dense(VOCAB_SIZE, activation="softmax")

self.dropout_1 = layers.Dropout(0.3)

self.dropout_2 = layers.Dropout(0.5)

self.supports_masking = True

def call(self, inputs, encoder_outputs, training, mask=None):

inputs = self.embedding(inputs)

causal_mask = self.get_causal_attention_mask(inputs)

if mask is not None:

padding_mask = tf.cast(mask[:, :, tf.newaxis], dtype=tf.int32)

combined_mask = tf.cast(mask[:, tf.newaxis, :], dtype=tf.int32)

combined_mask = tf.minimum(combined_mask, causal_mask)

attention_output_1 = self.attention_1(

query=inputs,

value=inputs,

key=inputs,

attention_mask=combined_mask,

training=training,

)

out_1 = self.layernorm_1(inputs + attention_output_1)

attention_output_2 = self.attention_2(

query=out_1,

value=encoder_outputs,

key=encoder_outputs,

attention_mask=padding_mask,

training=training,

)

out_2 = self.layernorm_2(out_1 + attention_output_2)

ffn_out = self.ffn_layer_1(out_2)

ffn_out = self.dropout_1(ffn_out, training=training)

ffn_out = self.ffn_layer_2(ffn_out)

ffn_out = self.layernorm_3(ffn_out + out_2, training=training)

ffn_out = self.dropout_2(ffn_out, training=training)

preds = self.out(ffn_out)

return preds

def get_causal_attention_mask(self, inputs):

input_shape = tf.shape(inputs)

batch_size, sequence_length = input_shape[0], input_shape[1]

i = tf.range(sequence_length)[:, tf.newaxis]

j = tf.range(sequence_length)

mask = tf.cast(i >= j, dtype="int32")

mask = tf.reshape(mask, (1, input_shape[1], input_shape[1]))

mult = tf.concat(

[

tf.expand_dims(batch_size, -1),

tf.constant([1, 1], dtype=tf.int32),

],

axis=0,

)

return tf.tile(mask, mult)

class ImageCaptioningModel(keras.Model):

def __init__(

self,

cnn_model,

encoder,

decoder,

num_captions_per_image=5,

image_aug=None,

):

super().__init__()

self.cnn_model = cnn_model

self.encoder = encoder

self.decoder = decoder

self.loss_tracker = keras.metrics.Mean(name="loss")

self.acc_tracker = keras.metrics.Mean(name="accuracy")

self.num_captions_per_image = num_captions_per_image

self.image_aug = image_aug

def calculate_loss(self, y_true, y_pred, mask):

loss = self.loss(y_true, y_pred)

mask = tf.cast(mask, dtype=loss.dtype)

loss *= mask

return tf.reduce_sum(loss) / tf.reduce_sum(mask)

def calculate_accuracy(self, y_true, y_pred, mask):

accuracy = tf.equal(y_true, tf.argmax(y_pred, axis=2))

accuracy = tf.math.logical_and(mask, accuracy)

accuracy = tf.cast(accuracy, dtype=tf.float32)

mask = tf.cast(mask, dtype=tf.float32)

return tf.reduce_sum(accuracy) / tf.reduce_sum(mask)

def _compute_caption_loss_and_acc(self, img_embed, batch_seq, training=True):

encoder_out = self.encoder(img_embed, training=training)

batch_seq_inp = batch_seq[:, :-1]

batch_seq_true = batch_seq[:, 1:]

mask = tf.math.not_equal(batch_seq_true, 0)

batch_seq_pred = self.decoder(

batch_seq_inp, encoder_out, training=training, mask=mask

)

loss = self.calculate_loss(batch_seq_true, batch_seq_pred, mask)

acc = self.calculate_accuracy(batch_seq_true, batch_seq_pred, mask)

return loss, acc

def train_step(self, batch_data):

batch_img, batch_seq = batch_data

batch_loss = 0

batch_acc = 0

if self.image_aug:

batch_img = self.image_aug(batch_img)

# 1. Get image embeddings

img_embed = self.cnn_model(batch_img)

# 2. Pass each of the five captions one by one to the decoder

# along with the encoder outputs and compute the loss as well as accuracy

# for each caption.

for i in range(self.num_captions_per_image):

with tf.GradientTape() as tape:

loss, acc = self._compute_caption_loss_and_acc(

img_embed, batch_seq[:, i, :], training=True

)

# 3. Update loss and accuracy

batch_loss += loss

batch_acc += acc

# 4. Get the list of all the trainable weights

train_vars = (

self.encoder.trainable_variables + self.decoder.trainable_variables

)

# 5. Get the gradients

grads = tape.gradient(loss, train_vars)

# 6. Update the trainable weights

self.optimizer.apply_gradients(zip(grads, train_vars))

# 7. Update the trackers

batch_acc /= float(self.num_captions_per_image)

self.loss_tracker.update_state(batch_loss)

self.acc_tracker.update_state(batch_acc)

# 8. Return the loss and accuracy values

return {

"loss": self.loss_tracker.result(),

"acc": self.acc_tracker.result(),

}

def test_step(self, batch_data):

batch_img, batch_seq = batch_data

batch_loss = 0

batch_acc = 0

# 1. Get image embeddings

img_embed = self.cnn_model(batch_img)

# 2. Pass each of the five captions one by one to the decoder

# along with the encoder outputs and compute the loss as well as accuracy

# for each caption.

for i in range(self.num_captions_per_image):

loss, acc = self._compute_caption_loss_and_acc(

img_embed, batch_seq[:, i, :], training=False

)

# 3. Update batch loss and batch accuracy

batch_loss += loss

batch_acc += acc

batch_acc /= float(self.num_captions_per_image)

# 4. Update the trackers

self.loss_tracker.update_state(batch_loss)

self.acc_tracker.update_state(batch_acc)

# 5. Return the loss and accuracy values

return {

"loss": self.loss_tracker.result(),

"acc": self.acc_tracker.result(),

}

@property

def metrics(self):

# We need to list our metrics here so the `reset_states()` can be

# called automatically.

return [self.loss_tracker, self.acc_tracker]

cnn_model = get_cnn_model()

encoder = TransformerEncoderBlock(embed_dim=EMBED_DIM, dense_dim=FF_DIM, num_heads=1)

decoder = TransformerDecoderBlock(embed_dim=EMBED_DIM, ff_dim=FF_DIM, num_heads=2)

caption_model = ImageCaptioningModel(

cnn_model=cnn_model,

encoder=encoder,

decoder=decoder,

image_aug=image_augmentation,

)

模型训练

# 定义损失函数

cross_entropy = keras.losses.SparseCategoricalCrossentropy(

from_logits=False,

reduction=None,

)

# 提前停止的标准

early_stopping = keras.callbacks.EarlyStopping(patience=3, restore_best_weights=True)

# 优化器的学习率调度器

class LRSchedule(keras.optimizers.schedules.LearningRateSchedule):

def __init__(self, post_warmup_learning_rate, warmup_steps):

super().__init__()

self.post_warmup_learning_rate = post_warmup_learning_rate

self.warmup_steps = warmup_steps

def __call__(self, step):

global_step = tf.cast(step, tf.float32)

warmup_steps = tf.cast(self.warmup_steps, tf.float32)

warmup_progress = global_step / warmup_steps

warmup_learning_rate = self.post_warmup_learning_rate * warmup_progress

return tf.cond(

global_step < warmup_steps,

lambda: warmup_learning_rate,

lambda: self.post_warmup_learning_rate,

)

# 创建学习率调度

num_train_steps = len(train_dataset) * EPOCHS

num_warmup_steps = num_train_steps // 15

lr_schedule = LRSchedule(post_warmup_learning_rate=1e-4, warmup_steps=num_warmup_steps)

# 编译模型

caption_model.compile(optimizer=keras.optimizers.Adam(lr_schedule), loss=cross_entropy)

# 训练模型

caption_model.fit(

train_dataset,

epochs=EPOCHS,

validation_data=valid_dataset,

callbacks=[early_stopping],

)

时钟 1/30

/opt/conda/envs/keras-tensorflow/lib/python3.10/site-packages/keras/src/layers/layer.py:861: 用户警告: 层 'query'(类型为 EinsumDense)接收到一个附加了掩码的输入。然而,该层不支持掩码,因此将会破坏掩码信息。下游层将无法看到掩码。

warnings.warn(

/opt/conda/envs/keras-tensorflow/lib/python3.10/site-packages/keras/src/layers/layer.py:861: 用户警告: 层 'key'(类型为 EinsumDense)接收到一个附加了掩码的输入。然而,该层不支持掩码,因此将会破坏掩码信息。下游层将无法看到掩码。

warnings.warn(

/opt/conda/envs/keras-tensorflow/lib/python3.10/site-packages/keras/src/layers/layer.py:861: 用户警告: 层 'value'(类型为 EinsumDense)接收到一个附加了掩码的输入。然而,该层不支持掩码,因此将会破坏掩码信息。下游层将无法看到掩码。

warnings.warn(

96/96 ━━━━━━━━━━━━━━━━━━━━ 91s 477ms/步 - 准确性: 0.1324 - 损失: 35.2713 - 精度: 0.2120 - 验证准确性: 0.3117 - 验证损失: 20.4337

时钟 2/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 398ms/步 - 准确性: 0.3203 - 损失: 19.9756 - 精度: 0.3300 - 验证准确性: 0.3517 - 验证损失: 18.0001

时钟 3/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 394ms/步 - 准确性: 0.3533 - 损失: 17.7575 - 精度: 0.3586 - 验证准确性: 0.3694 - 验证损失: 16.9179

时钟 4/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 396ms/步 - 准确性: 0.3721 - 损失: 16.6177 - 精度: 0.3750 - 验证准确性: 0.3781 - 验证损失: 16.3415

时钟 5/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 394ms/步 - 准确性: 0.3840 - 损失: 15.8190 - 精度: 0.3872 - 验证准确性: 0.3876 - 验证损失: 15.8820

时钟 6/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 37s 390ms/步 - 准确性: 0.3959 - 损失: 15.1802 - 精度: 0.3973 - 验证准确性: 0.3933 - 验证损失: 15.6454

时钟 7/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 395ms/步 - 准确性: 0.4035 - 损失: 14.7098 - 精度: 0.4054 - 验证准确性: 0.3956 - 验证损失: 15.4308

时钟 8/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 394ms/步 - 准确性: 0.4128 - 损失: 14.2644 - 精度: 0.4128 - 验证准确性: 0.4001 - 验证损失: 15.2675

时钟 9/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 393ms/步 - 准确性: 0.4180 - 损失: 13.9154 - 精度: 0.4196 - 验证准确性: 0.4034 - 验证损失: 15.1764

时钟 10/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 37s 390ms/步 - 准确性: 0.4256 - 损失: 13.5624 - 精度: 0.4261 - 验证准确性: 0.4040 - 验证损失: 15.1567

时钟 11/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 393ms/步 - 准确性: 0.4310 - 损失: 13.2789 - 精度: 0.4325 - 验证准确性: 0.4053 - 验证损失: 15.0365

时钟 12/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 399ms/步 - 准确性: 0.4366 - 损失: 13.0339 - 精度: 0.4371 - 验证准确性: 0.4084 - 验证损失: 15.0476

时钟 13/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 392ms/步 - 准确性: 0.4417 - 损失: 12.7608 - 精度: 0.4432 - 验证准确性: 0.4090 - 验证损失: 15.0246

时钟 14/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 391ms/步 - 准确性: 0.4481 - 损失: 12.5039 - 精度: 0.4485 - 验证准确性: 0.4083 - 验证损失: 14.9793

时钟 15/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 38s 391ms/步 - 准确性: 0.4533 - 损失: 12.2946 - 精度: 0.4542 - 验证准确性: 0.4109 - 验证损失: 15.0170

时钟 16/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 37s 387ms/步 - 准确性: 0.4583 - 损失: 12.0837 - 精度: 0.4588 - 验证准确性: 0.4099 - 验证损失: 15.1056

时钟 17/30

96/96 ━━━━━━━━━━━━━━━━━━━━ 37s 389ms/步 - 准确性: 0.4617 - 损失: 11.8657 - 精度: 0.4624 - 验证准确性: 0.4088 - 验证损失: 15.1226

<keras.src.callbacks.history.History at 0x7fcd08887fa0>

检查样本预测

vocab = vectorization.get_vocabulary()

index_lookup = dict(zip(range(len(vocab)), vocab))

max_decoded_sentence_length = SEQ_LENGTH - 1

valid_images = list(valid_data.keys())

def generate_caption():

# 从验证数据集中选择一张随机图像

sample_img = np.random.choice(valid_images)

# 从磁盘读取图像

sample_img = decode_and_resize(sample_img)

img = sample_img.numpy().clip(0, 255).astype(np.uint8)

plt.imshow(img)

plt.show()

# 将图像传递给CNN

img = tf.expand_dims(sample_img, 0)

img = caption_model.cnn_model(img)

# 将图像特征传递给Transformer编码器

encoded_img = caption_model.encoder(img, training=False)

# 使用Transformer解码器生成标题

decoded_caption = "<start> "

for i in range(max_decoded_sentence_length):

tokenized_caption = vectorization([decoded_caption])[:, :-1]

mask = tf.math.not_equal(tokenized_caption, 0)

predictions = caption_model.decoder(

tokenized_caption, encoded_img, training=False, mask=mask

)

sampled_token_index = np.argmax(predictions[0, i, :])

sampled_token = index_lookup[sampled_token_index]

if sampled_token == "<end>":

break

decoded_caption += " " + sampled_token

decoded_caption = decoded_caption.replace("<start> ", "")

decoded_caption = decoded_caption.replace(" <end>", "").strip()

print("预测标题: ", decoded_caption)

# 检查几个样本的预测

generate_caption()

generate_caption()

generate_caption()

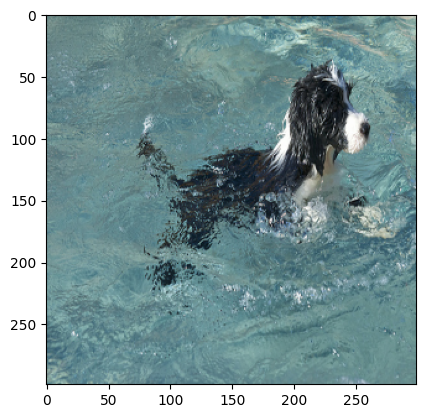

预测标题: 一只黑白色的狗在游泳池里游泳

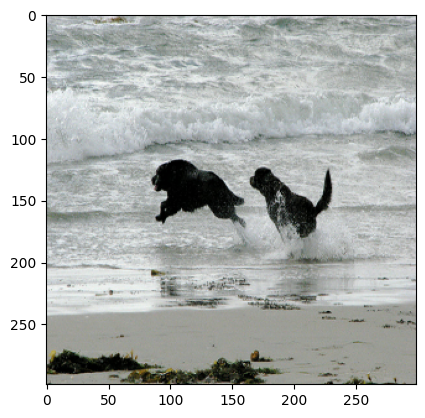

预测标题: 一只黑色的狗在水中奔跑

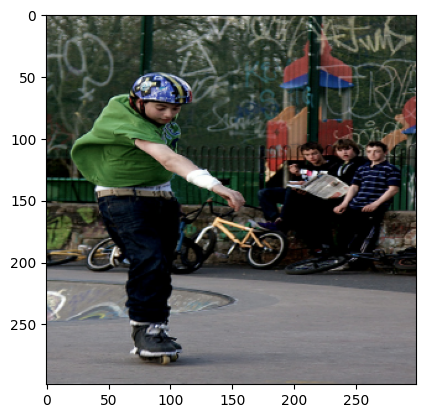

预测标题: 一名穿着绿色衬衫和绿色裤子的男士正在骑自行车

结束说明

我们看到模型在经过几个周期后开始生成合理的标题。为了使这个例子易于运行,我们在训练时施加了一些限制,比如注意力头的最小数量。要改善预测,您可以尝试更改这些训练设置,找到适合您用例的良好模型。