文本数据解释基准测试:抽象摘要

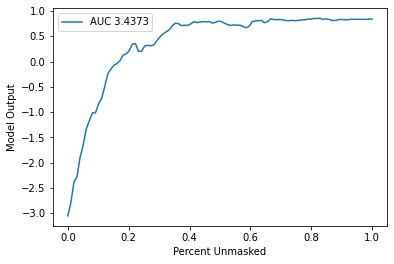

本笔记本演示了如何使用基准测试工具来测试文本数据解释器的性能。在这个演示中,我们展示了在抽象摘要模型上使用分区解释器的解释性能。用于评估的指标是“保留正样本”。使用的掩码器是文本掩码器。

新的基准测试工具使用新的API,以MaskedModel作为用户导入模型的包装器,并评估输入的掩码值。

[1]:

import nlp

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

import shap

import shap.benchmark as benchmark

加载数据和模型

[2]:

tokenizer = AutoTokenizer.from_pretrained("sshleifer/distilbart-xsum-12-6")

model = AutoModelForSeq2SeqLM.from_pretrained("sshleifer/distilbart-xsum-12-6")

[3]:

dataset = nlp.load_dataset("xsum", split="train")

Using custom data configuration default

[4]:

s = dataset["document"][0:1]

创建解释器对象

[5]:

explainer = shap.Explainer(model, tokenizer)

explainers.Partition is still in an alpha state, so use with caution...

运行 SHAP 解释

[6]:

shap_values = explainer(s)

Partition explainer: 2it [00:43, 21.70s/it]

定义指标(排序 & 扰动方法)

[7]:

sort_order = "positive"

perturbation = "keep"

基准测试解释器

[8]:

sp = benchmark.perturbation.SequentialPerturbation(

explainer.model, explainer.masker, sort_order, perturbation

)

xs, ys, auc = sp.model_score(shap_values, s)

sp.plot(xs, ys, auc)