时间序列深度编码器¶

本笔记本演示了如何使用 Darts 的 TiDEModel 并将其与 NHiTSModel 进行基准测试。

TiDE(时间序列密集编码器)是一种纯深度学习编码器-解码器架构。它的特殊之处在于,时间解码器可以帮助减轻异常样本对预测的影响(论文中的图4)。

请参阅原始论文和模型描述:http://arxiv.org/abs/2304.08424。

[1]:

# fix python path if working locally

from utils import fix_pythonpath_if_working_locally

fix_pythonpath_if_working_locally()

%matplotlib inline

[2]:

%load_ext autoreload

%autoreload 2

%matplotlib inline

[3]:

import torch

import numpy as np

import pandas as pd

import shutil

from darts.models import NHiTSModel, TiDEModel

from darts.datasets import AusBeerDataset

from darts.dataprocessing.transformers.scaler import Scaler

from pytorch_lightning.callbacks.early_stopping import EarlyStopping

from darts.metrics import mae, mse

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore")

import logging

logging.disable(logging.CRITICAL)

模型参数设置¶

样板代码毫无乐趣,尤其是在训练多个模型以比较性能的背景下。为了避免这种情况,我们使用一个通用配置,该配置可以与任何 Darts TorchForecastingModel 一起使用。

关于这些参数的一些有趣的事情:

梯度裁剪: 通过为一批数据设置梯度上限,来缓解反向传播过程中的梯度爆炸问题。

学习率: 模型的大部分学习发生在早期的epochs。随着训练的进行,通常有助于降低学习率以微调模型。尽管如此,这也可能导致显著的过拟合。

早停法: 为了避免过拟合,我们可以使用早停法。它监控验证集上的一个指标,并根据自定义条件在指标不再改善时停止训练。

[4]:

optimizer_kwargs = {

"lr": 1e-3,

}

# PyTorch Lightning Trainer arguments

pl_trainer_kwargs = {

"gradient_clip_val": 1,

"max_epochs": 200,

"accelerator": "auto",

"callbacks": [],

}

# learning rate scheduler

lr_scheduler_cls = torch.optim.lr_scheduler.ExponentialLR

lr_scheduler_kwargs = {

"gamma": 0.999,

}

# early stopping (needs to be reset for each model later on)

# this setting stops training once the the validation loss has not decreased by more than 1e-3 for 10 epochs

early_stopping_args = {

"monitor": "val_loss",

"patience": 10,

"min_delta": 1e-3,

"mode": "min",

}

#

common_model_args = {

"input_chunk_length": 12, # lookback window

"output_chunk_length": 12, # forecast/lookahead window

"optimizer_kwargs": optimizer_kwargs,

"pl_trainer_kwargs": pl_trainer_kwargs,

"lr_scheduler_cls": lr_scheduler_cls,

"lr_scheduler_kwargs": lr_scheduler_kwargs,

"likelihood": None, # use a likelihood for probabilistic forecasts

"save_checkpoints": True, # checkpoint to retrieve the best performing model state,

"force_reset": True,

"batch_size": 256,

"random_state": 42,

}

数据加载与准备¶

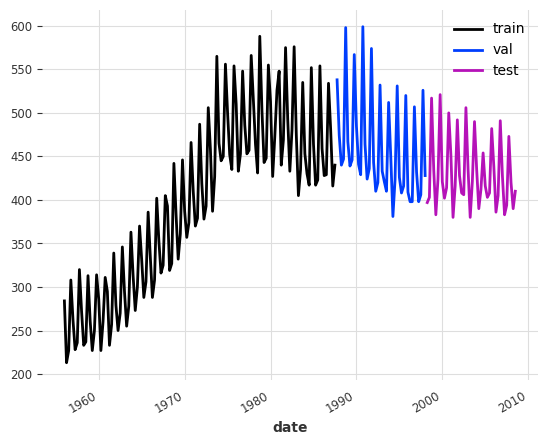

我们考虑澳大利亚每季度的啤酒销售量,单位为兆升。

在训练之前,我们将数据分为训练集、验证集和测试集。模型将从训练集学习,使用验证集来决定何时停止训练,最后在测试集上进行评估。

为了避免验证集和测试集的信息泄露,我们根据训练集的属性来缩放数据。

[5]:

series = AusBeerDataset().load()

train, temp = series.split_after(0.6)

val, test = temp.split_after(0.5)

[6]:

train.plot(label="train")

val.plot(label="val")

test.plot(label="test")

[7]:

scaler = Scaler() # default uses sklearn's MinMaxScaler

train = scaler.fit_transform(train)

val = scaler.transform(val)

test = scaler.transform(test)

模型配置¶

使用已经建立的共享参数,我们可以看到NHiTS和TiDE的默认参数被使用。唯一的例外是,TiDE在有无 Reversible Instance Normalization 的情况下都进行了测试。

然后我们遍历模型字典并训练所有模型。在使用提前停止时,保存检查点非常重要。这使我们能够继续超越最佳模型配置,然后在训练完成后恢复最佳权重。

[8]:

# create the models

model_nhits = NHiTSModel(**common_model_args, model_name="hi")

model_tide = TiDEModel(

**common_model_args, use_reversible_instance_norm=False, model_name="tide0"

)

model_tide_rin = TiDEModel(

**common_model_args, use_reversible_instance_norm=True, model_name="tide1"

)

models = {

"NHiTS": model_nhits,

"TiDE": model_tide,

"TiDE+RIN": model_tide_rin,

}

[9]:

# train the models and load the model from its best state/checkpoint

for name, model in models.items():

# early stopping needs to get reset for each model

pl_trainer_kwargs["callbacks"] = [

EarlyStopping(

**early_stopping_args,

)

]

model.fit(

series=train,

val_series=val,

verbose=False,

)

# load from checkpoint returns a new model object, we store it in the models dict

models[name] = model.load_from_checkpoint(model_name=model.model_name, best=True)

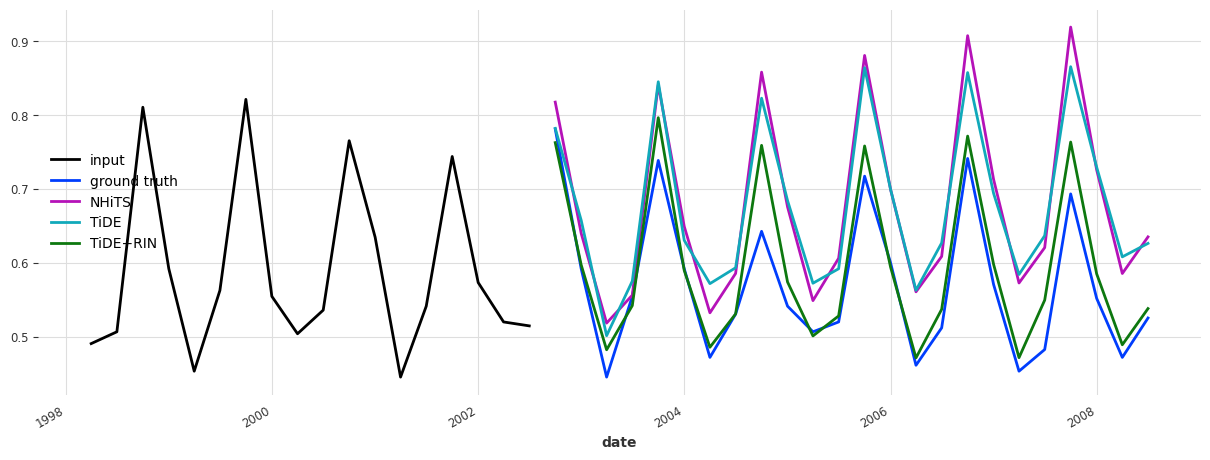

[10]:

# we will predict the next `pred_steps` points after the end of `pred_input`

pred_steps = common_model_args["output_chunk_length"] * 2

pred_input = test[:-pred_steps]

fig, ax = plt.subplots(figsize=(15, 5))

pred_input.plot(label="input")

test[-pred_steps:].plot(label="ground truth", ax=ax)

result_accumulator = {}

# predict with each model and compute/store the metrics against the test sets

for model_name, model in models.items():

pred_series = model.predict(n=pred_steps, series=pred_input)

pred_series.plot(label=model_name, ax=ax)

result_accumulator[model_name] = {

"mae": mae(test, pred_series),

"mse": mse(test, pred_series),

}

结果¶

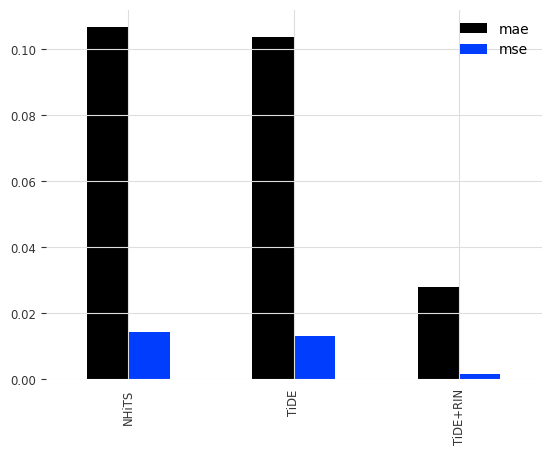

在这种情况下,普通 TiDE 与 NHiTs 同样准确。包含可逆实例归一化 (RINorm) 极大地帮助改进了 TiDE 的预测(请记住,这只是一个例子,并不总是保证能提高性能)。

[11]:

results_df = pd.DataFrame.from_dict(result_accumulator, orient="index")

results_df.plot.bar()

[11]:

<Axes: >