Aim

Aim 使得可视化和调试 LangChain 执行变得非常简单。Aim 跟踪 LLMs 和工具的输入和输出,以及代理的操作。

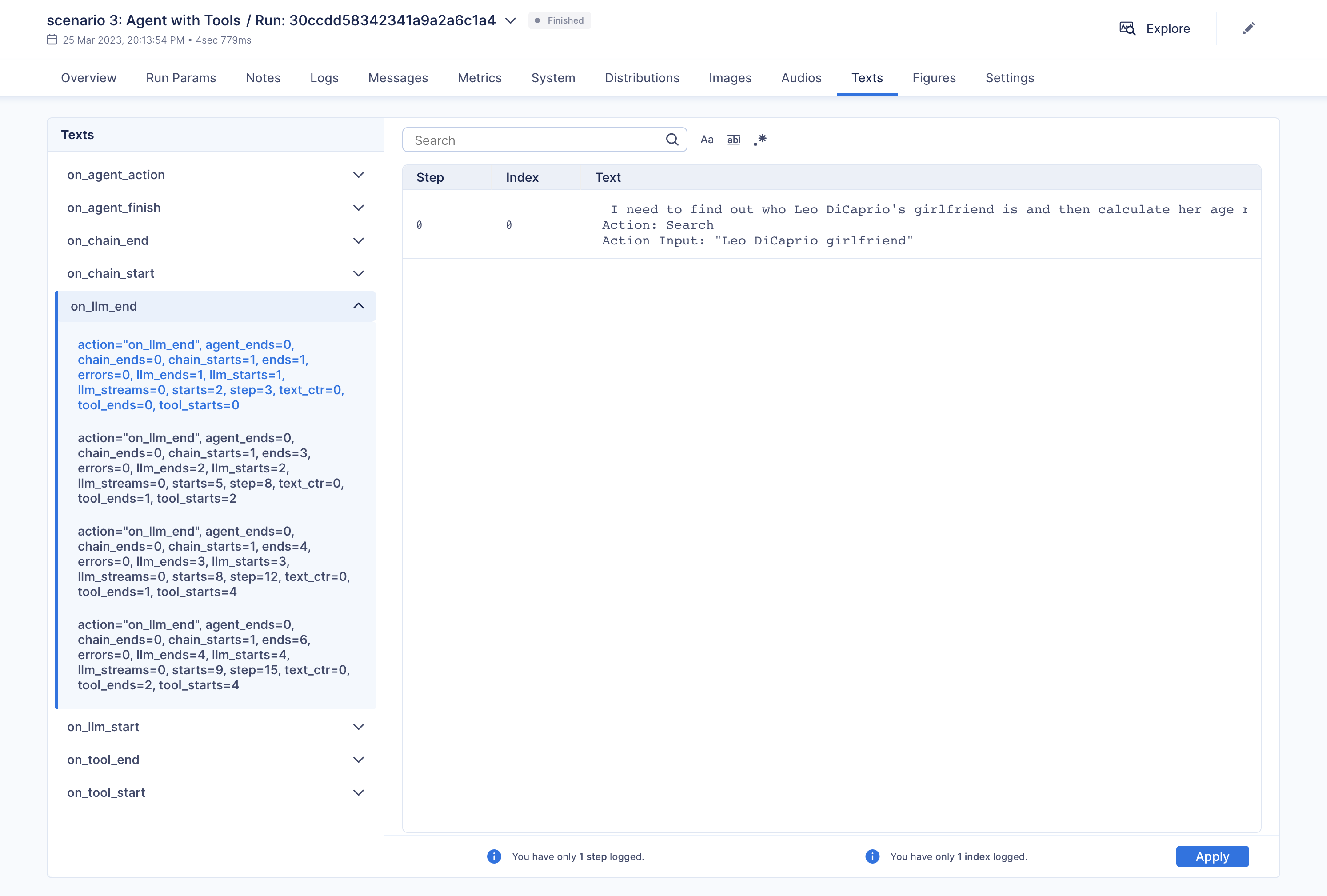

使用 Aim,您可以轻松调试和检查单个执行:

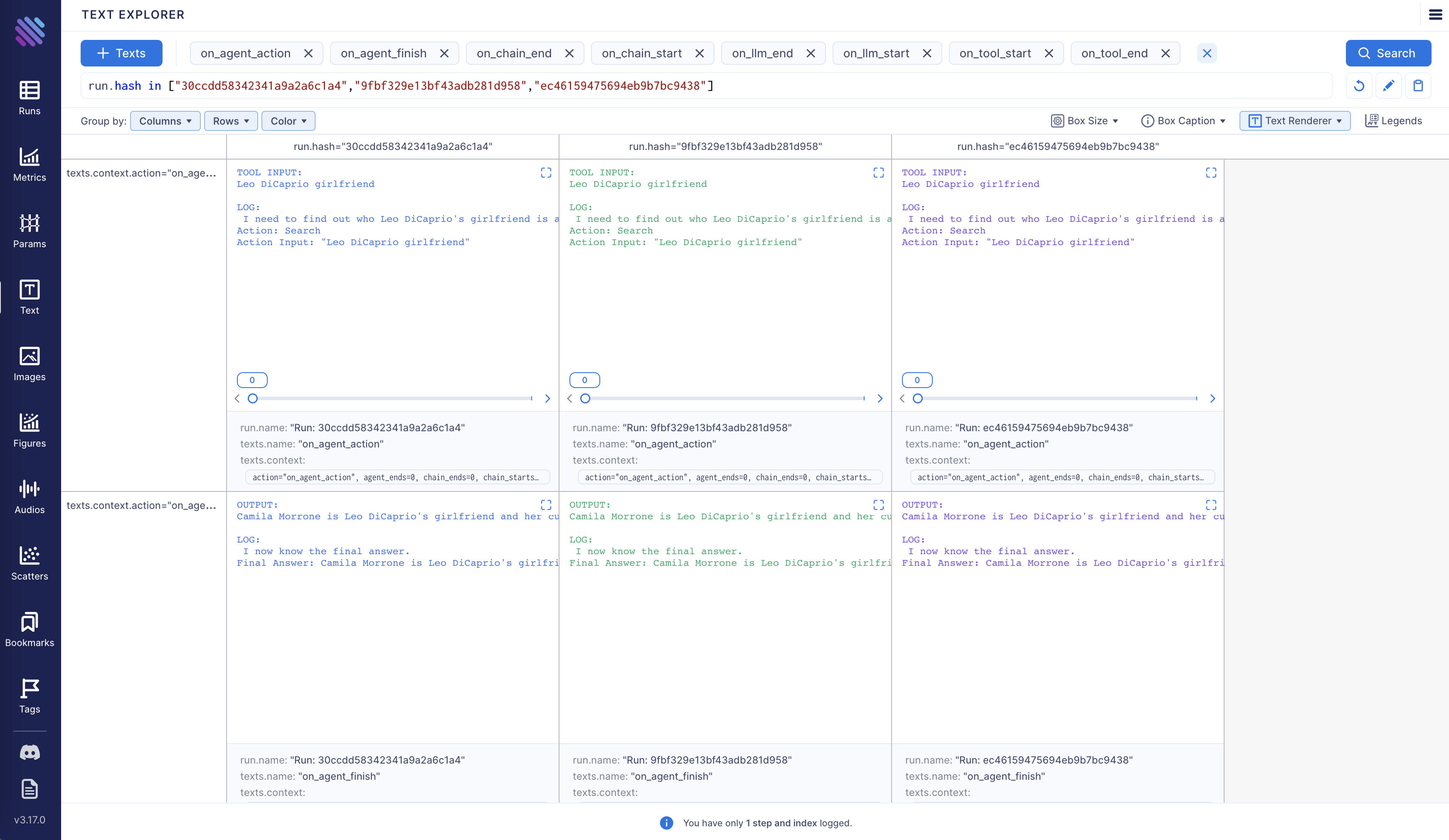

此外,您可以选择并排比较多个执行:

Aim是完全开源的,了解更多关于Aim在GitHub上的信息。

让我们继续前进,看看如何启用和配置Aim回调。

使用Aim跟踪LangChain执行

在本笔记本中,我们将探索三种使用场景。首先,我们将安装必要的包并导入某些模块。随后,我们将配置两个环境变量,这些变量可以在Python脚本中或通过终端设置。

%pip install --upgrade --quiet aim

%pip install --upgrade --quiet langchain

%pip install --upgrade --quiet langchain-openai

%pip install --upgrade --quiet google-search-results

import os

from datetime import datetime

from langchain_community.callbacks import AimCallbackHandler

from langchain_core.callbacks import StdOutCallbackHandler

from langchain_openai import OpenAI

我们的示例使用GPT模型作为LLM,OpenAI为此提供了一个API。您可以从以下链接获取密钥:https://platform.openai.com/account/api-keys。

我们将使用SerpApi从Google检索搜索结果。要获取SerpApi密钥,请访问https://serpapi.com/manage-api-key。

os.environ["OPENAI_API_KEY"] = "..."

os.environ["SERPAPI_API_KEY"] = "..."

AimCallbackHandler 的事件方法接受 LangChain 模块或代理作为输入,并至少记录提示和生成的结果,以及 LangChain 模块的序列化版本,到指定的 Aim 运行中。

session_group = datetime.now().strftime("%m.%d.%Y_%H.%M.%S")

aim_callback = AimCallbackHandler(

repo=".",

experiment_name="scenario 1: OpenAI LLM",

)

callbacks = [StdOutCallbackHandler(), aim_callback]

llm = OpenAI(temperature=0, callbacks=callbacks)

flush_tracker 函数用于在 Aim 上记录 LangChain 资产。默认情况下,会话会被重置而不是直接终止。

场景 1

In the first scenario, we will use OpenAI LLM.# scenario 1 - LLM

llm_result = llm.generate(["Tell me a joke", "Tell me a poem"] * 3)

aim_callback.flush_tracker(

langchain_asset=llm,

experiment_name="scenario 2: Chain with multiple SubChains on multiple generations",

)

场景 2

Scenario two involves chaining with multiple SubChains across multiple generations.from langchain.chains import LLMChain

from langchain_core.prompts import PromptTemplate

API Reference:LLMChain | PromptTemplate

# scenario 2 - Chain

template = """You are a playwright. Given the title of play, it is your job to write a synopsis for that title.

Title: {title}

Playwright: This is a synopsis for the above play:"""

prompt_template = PromptTemplate(input_variables=["title"], template=template)

synopsis_chain = LLMChain(llm=llm, prompt=prompt_template, callbacks=callbacks)

test_prompts = [

{

"title": "documentary about good video games that push the boundary of game design"

},

{"title": "the phenomenon behind the remarkable speed of cheetahs"},

{"title": "the best in class mlops tooling"},

]

synopsis_chain.apply(test_prompts)

aim_callback.flush_tracker(

langchain_asset=synopsis_chain, experiment_name="scenario 3: Agent with Tools"

)

场景 3

The third scenario involves an agent with tools.from langchain.agents import AgentType, initialize_agent, load_tools

# scenario 3 - Agent with Tools

tools = load_tools(["serpapi", "llm-math"], llm=llm, callbacks=callbacks)

agent = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

callbacks=callbacks,

)

agent.run(

"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?"

)

aim_callback.flush_tracker(langchain_asset=agent, reset=False, finish=True)

[1m> Entering new AgentExecutor chain...[0m

[32;1m[1;3m I need to find out who Leo DiCaprio's girlfriend is and then calculate her age raised to the 0.43 power.

Action: Search

Action Input: "Leo DiCaprio girlfriend"[0m

Observation: [36;1m[1;3mLeonardo DiCaprio seemed to prove a long-held theory about his love life right after splitting from girlfriend Camila Morrone just months ...[0m

Thought:[32;1m[1;3m I need to find out Camila Morrone's age

Action: Search

Action Input: "Camila Morrone age"[0m

Observation: [36;1m[1;3m25 years[0m

Thought:[32;1m[1;3m I need to calculate 25 raised to the 0.43 power

Action: Calculator

Action Input: 25^0.43[0m

Observation: [33;1m[1;3mAnswer: 3.991298452658078

[0m

Thought:[32;1m[1;3m I now know the final answer

Final Answer: Camila Morrone is Leo DiCaprio's girlfriend and her current age raised to the 0.43 power is 3.991298452658078.[0m

[1m> Finished chain.[0m