! [ -e /content ] && pip install -Uqq fastai # 在Colab上升级fastai优化器

from __future__ import annotations

from fastai.torch_basics import *from nbdev.showdoc import *定义通用的fastai优化器及其变体

_BaseOptimizer -

class _BaseOptimizer():

"Common functionality between `Optimizer` and `OptimWrapper`"

def all_params(self,

n:slice|int=slice(None), # 对优化器 `param_lists` 进行扩展切片

with_grad:bool=False # 获取所有参数元组。如果为 `True`,则仅选择那些具有梯度的参数。

):

res = L((p,pg,self.state[p],hyper) for pg,hyper in zip(self.param_lists[n],self.hypers[n]) for p in pg)

return L(o for o in res if hasattr(o[0], 'grad') and o[0].grad is not None) if with_grad else res

def _set_require_grad(self,

rg:bool, # Requires grad: if `True` sets gradient for parameters, else uses state `state["force_train"]`

p:Tensor, # 设置梯度的参数

pg, # 参数组(未使用但因解包 *o 而需要)

state: dict,

h # 超参数(未使用但因解包 *o 而需要)

):

p.requires_grad_(rg or state.get('force_train', False))

def freeze_to(self,

n:int # 冻结最多 `n` 层

):

self.frozen_idx = n if n >= 0 else len(self.param_lists) + n

if self.frozen_idx >= len(self.param_lists):

warn(f"Freezing {self.frozen_idx} groups; model has {len(self.param_lists)}; whole model is frozen.")

for o in self.all_params(slice(n, None)): self._set_require_grad(True, *o)

for o in self.all_params(slice(None, n)): self._set_require_grad(False, *o)

def freeze(self):

assert(len(self.param_lists)>1)

self.freeze_to(-1)

def set_hypers(self, **kwargs): L(kwargs.items()).starmap(self.set_hyper)

def _set_hyper(self,

k, # 超参数键

v # 超参数值

):

for v_,h in zip(v, self.hypers): h[k] = v_

def set_hyper(self,

k, # 超参数键 or slice of keys

v # 超参数值或值的切片

):

if isinstance(v, slice):

if v.start: v = even_mults(v.start, v.stop, len(self.param_lists))

else: v = [v.stop/10]*(len(self.param_lists)-1) + [v.stop]

v = L(v, use_list=None)

if len(v)==1: v = v*len(self.param_lists)

assert len(v) == len(self.hypers), f"Trying to set {len(v)} values for {k} but there are {len(self.param_lists)} parameter groups."

self._set_hyper(k, v)

def unfreeze(self): self.freeze_to(0)

@property

def param_groups(self): return [{**{'params': pg}, **hp} for pg,hp in zip(self.param_lists, self.hypers)]

@param_groups.setter

def param_groups(self,

v:dict # 将字典列表设置为 `params` 和其他超参数

):

for pg,v_ in zip(self.param_lists,v): pg = v_['params']

for hyper,v_ in zip(self.hypers,v):

for k,t in v_.items():

if k != 'params': hyper[k] = tadd_docs(_BaseOptimizer,

all_params="List of param_groups, parameters, and hypers",

freeze_to="Freeze parameter groups up to `n`",

freeze="Freeze up to last parameter group",

unfreeze="Unfreeze the entire model",

set_hypers="`set_hyper` for all `kwargs`",

set_hyper="Set the value(s) in `v` for hyper-parameter `k`")def _update(

state:dict,

new=None # 更新`state`字典的新值

):

if new is None: return state

if isinstance(new, dict): state.update(new)

return state优化器 -

class Optimizer(_BaseOptimizer):

"Base optimizer class for the fastai library, updating `params` with `cbs`"

_keep_on_clear = ['force_train', 'do_wd']

def __init__(self,

params:Tensor|Iterable, # 模型参数

cbs:callable|MutableSequence, # `优化器`步骤回调

**defaults # 超参数默认值

):

if 'train_bn' in defaults.keys():

_ = defaults.pop('train_bn')

warn('Setting `train_bn` in `Optimizer` has no effect. Set `train_bn` on `Learner` init instead')

params = L(params)

self.cbs,self.state = L(cbs),defaultdict(dict)

defaults = merge(*self.cbs.attrgot('defaults'), defaults)

self.param_lists = L(L(p) for p in params) if isinstance(params[0], (L,list)) else L([params])

self.hypers = L({} for _ in range_of(self.param_lists))

self.set_hypers(**defaults)

self.frozen_idx = 0

def zero_grad(self):

for p,*_ in self.all_params(with_grad=True):

p.grad.detach_()

p.grad.zero_()

def step(self, closure=None):

if closure is not None: raise NotImplementedError("fastai optimizers currently do not support closure")

for p,pg,state,hyper in self.all_params(with_grad=True):

for cb in self.cbs: state = _update(state, cb(p, **{**state, **hyper}))

self.state[p] = state

def clear_state(self):

for p,pg,state,hyper in self.all_params():

self.state[p] = {k: state[k] for k in self._keep_on_clear if k in state}

def state_dict(self):

state = [self.state[p] for p,*_ in self.all_params()]

return {'state': state, 'hypers': self.hypers}

def load_state_dict(self,

sd:dict # 将 `hypers` 和 `state` 状态字典加载到优化器中

):

assert len(sd["hypers"]) == len(self.param_lists)

assert len(sd["state"]) == sum([len(pg) for pg in self.param_lists])

self.hypers = sd['hypers']

self.state = {p: s for p,s in zip(self.all_params().itemgot(0), sd['state'])}add_docs(Optimizer,

zero_grad="Standard PyTorch API: Zero all the grad attributes of the parameters",

step="Standard PyTorch API: Update the stats and execute the steppers in on all parameters that have a grad",

state_dict="Return the state of the optimizer in a dictionary",

load_state_dict="Load the content of `sd`",

clear_state="Reset the state of the optimizer")初始化优化器

params 将用于创建优化器的 param_groups。如果它是参数的集合(或生成器),它将是一个 L,其中包含一个包含所有参数的 L。要定义多个参数组,params 应作为 L 的集合(或生成器)传递。

在 PyTorch 中,model.parameters() 返回一个包含所有参数的生成器,您可以直接将其传递给 Optimizer。

opt = Optimizer([1,2,3], noop)

test_eq(opt.param_lists, [[1,2,3]])

opt = Optimizer(range(3), noop)

test_eq(opt.param_lists, [[0,1,2]])

opt = Optimizer([[1,2],[3]], noop)

test_eq(opt.param_lists, [[1,2],[3]])

opt = Optimizer(([o,o+1] for o in range(0,4,2)), noop)

test_eq(opt.param_lists, [[0,1],[2,3]])cbs 是一个函数列表,它们将在应用步骤时进行组合。例如,您可以将执行 SGD 步骤的函数与应用权重衰减的函数组合。此外,每个 cb 可以具有一个 defaults 属性,该属性包含超参数及其默认值。所有这些在初始化时被收集,并且可以通过 defaults 关键字参数传递新的值以覆盖这些默认值。步骤将通过 Optimizer.step 调用(这是 PyTorch 的标准名称),并且可以通过 Optimizer.zero_grad 清除梯度(这也是标准的 PyTorch 名称)。

一旦所有默认值被提取出来,它们会根据 param_groups 的数量被复制,并存储在 hypers 中。要对不同组应用不同的超参数(例如差异学习率,或某些层没有权重衰减),您需要在初始化后调整这些值。

def tst_arg(p, lr=0, **kwargs): return p

tst_arg.defaults = dict(lr=1e-2)

def tst_arg2(p, lr2=0, **kwargs): return p

tst_arg2.defaults = dict(lr2=1e-3)

def tst_arg3(p, mom=0, **kwargs): return p

tst_arg3.defaults = dict(mom=0.9)

def tst_arg4(p, **kwargs): return p

opt = Optimizer([1,2,3], [tst_arg,tst_arg2, tst_arg3])

test_eq(opt.hypers, [{'lr2': 1e-3, 'mom': 0.9, 'lr': 1e-2}])

opt = Optimizer([1,2,3], tst_arg, lr=0.1)

test_eq(opt.hypers, [{'lr': 0.1}])

opt = Optimizer([[1,2],[3]], tst_arg)

test_eq(opt.hypers, [{'lr': 1e-2}, {'lr': 1e-2}])

opt = Optimizer([[1,2],[3]], tst_arg, lr=0.1)

test_eq(opt.hypers, [{'lr': 0.1}, {'lr': 0.1}])对于每个超参数,您可以传递一个切片或一个集合来进行设置,如果有多个参数组。切片将被转换为从开始到结束的对数均匀集合,或者如果它仅有一个结束值 e,则转换为与参数组数量相同的多个值的集合,值为 ...,e/10,e/10,e。

使用与优化器参数组数量不同的元素数量的集合来设置超参数将引发错误。

opt = Optimizer([[1,2],[3]], tst_arg, lr=[0.1,0.2])

test_eq(opt.hypers, [{'lr': 0.1}, {'lr': 0.2}])

opt = Optimizer([[1,2],[3],[4]], tst_arg, lr=slice(1e-2))

test_eq(opt.hypers, [{'lr': 1e-3}, {'lr': 1e-3}, {'lr': 1e-2}])

opt = Optimizer([[1,2],[3],[4]], tst_arg, lr=slice(1e-4,1e-2))

test_eq(opt.hypers, [{'lr': 1e-4}, {'lr': 1e-3}, {'lr': 1e-2}])

test_eq(opt.param_groups, [{'params': [1,2], 'lr': 1e-4}, {'params': [3], 'lr': 1e-3}, {'params': [4], 'lr': 1e-2}])

test_fail(lambda: Optimizer([[1,2],[3],[4]], tst_arg, lr=np.array([0.1,0.2])))基础步进器

为了能够给出优化器步骤的示例,我们需要一些步进器,像下面这样:

def sgd_step(p, lr, **kwargs):

p.data.add_(p.grad.data, alpha=-lr)def tst_param(val, grad=None):

"Create a tensor with `val` and a gradient of `grad` for testing"

res = tensor([val]).float()

res.grad = tensor([val/10 if grad is None else grad]).float()

return resp = tst_param(1., 0.1)

sgd_step(p, 1.)

test_eq(p, tensor([0.9]))

test_eq(p.grad, tensor([0.1]))def weight_decay(p, lr, wd, do_wd=True, **kwargs):

"Weight decay as decaying `p` with `lr*wd`"

if do_wd and wd!=0: p.data.mul_(1 - lr*wd)

weight_decay.defaults = dict(wd=0.)p = tst_param(1., 0.1)

weight_decay(p, 1., 0.1)

test_eq(p, tensor([0.9]))

test_eq(p.grad, tensor([0.1]))def l2_reg(p, lr, wd, do_wd=True, **kwargs):

"L2 regularization as adding `wd*p` to `p.grad`"

if do_wd and wd!=0: p.grad.data.add_(p.data, alpha=wd)

l2_reg.defaults = dict(wd=0.)p = tst_param(1., 0.1)

l2_reg(p, 1., 0.1)

test_eq(p, tensor([1.]))

test_eq(p.grad, tensor([0.2]))权重衰减和L2正则化在基本的SGD中是同一种东西,但对于更复杂的优化器,它们是非常不同的。

迈出一步

show_doc(Optimizer.step)

Optimizer.step[source]

Optimizer.step(closure=None)

Standard PyTorch API: Update the stats and execute the steppers in on all parameters that have a grad

该方法将遍历所有参数组,然后遍历所有 grad 不为 None 的参数,并调用 stepper 中的每个函数,将参数 p 和相应字典 hypers 中的超参数传递给它。

#测试基本步骤

r = L.range(4)

def tst_params(): return r.map(tst_param)

params = tst_params()

opt = Optimizer(params, sgd_step, lr=0.1)

opt.step()

test_close([p.item() for p in params], r.map(mul(0.99)))#测试两步

params = tst_params()

opt = Optimizer(params, [weight_decay, sgd_step], lr=0.1, wd=0.1)

opt.step()

test_close([p.item() for p in params], r.map(mul(0.98)))#测试 无梯度被忽略

params = tst_params()

opt = Optimizer(params, sgd_step, lr=0.1)

params[-1].grad = None

opt.step()

test_close([p.item() for p in params], [0., 0.99, 1.98, 3.])#测试判别学习率

params = tst_params()

opt = Optimizer([params[:2], params[2:]], sgd_step, lr=0.1)

opt.hypers[0]['lr'] = 0.01

opt.step()

test_close([p.item() for p in params], [0., 0.999, 1.98, 2.97])show_doc(Optimizer.zero_grad)

Optimizer.zero_grad[source]

Optimizer.zero_grad()

Standard PyTorch API: Zero all the grad attributes of the parameters

params = tst_params()

opt = Optimizer(params, [weight_decay, sgd_step], lr=0.1, wd=0.1)

opt.zero_grad()

[test_eq(p.grad, tensor([0.])) for p in params];一些 Optimizer 的 cbs 可以是更新与参数相关的状态的函数。该状态可以被任何步进器使用。最好的例子是动量计算。

def tst_stat(p, **kwargs):

s = kwargs.get('sum', torch.zeros_like(p)) + p.data

return {'sum': s}

tst_stat.defaults = {'mom': 0.9}

#测试优化器初始化

opt = Optimizer([1,2,3], tst_stat)

test_eq(opt.hypers, [{'mom': 0.9}])

opt = Optimizer([1,2,3], tst_stat, mom=0.99)

test_eq(opt.hypers, [{'mom': 0.99}])

#检验统计量

x = torch.randn(4,5)

state = tst_stat(x)

assert 'sum' in state

test_eq(x, state['sum'])

state = tst_stat(x, **state)

test_eq(state['sum'], 2*x)统计学

def average_grad(p, mom, dampening=False, grad_avg=None, **kwargs):

"Keeps track of the avg grads of `p` in `state` with `mom`."

if grad_avg is None: grad_avg = torch.zeros_like(p.grad.data)

damp = 1-mom if dampening else 1.

grad_avg.mul_(mom).add_(p.grad.data, alpha=damp)

return {'grad_avg': grad_avg}

average_grad.defaults = dict(mom=0.9)dampening=False 给出了SGD中动量的经典公式:

new_val = old_val * mom + grad而 dampening=True 则使其成为指数移动平均:

new_val = old_val * mom + grad * (1-mom)p = tst_param([1,2,3], [4,5,6])

state = {}

state = average_grad(p, mom=0.9, **state)

test_eq(state['grad_avg'], p.grad)

state = average_grad(p, mom=0.9, **state)

test_eq(state['grad_avg'], p.grad * 1.9)

#测试阻尼

state = {}

state = average_grad(p, mom=0.9, dampening=True, **state)

test_eq(state['grad_avg'], 0.1*p.grad)

state = average_grad(p, mom=0.9, dampening=True, **state)

test_close(state['grad_avg'], (0.1*0.9+0.1)*p.grad)def average_sqr_grad(p, sqr_mom, dampening=True, sqr_avg=None, **kwargs):

if sqr_avg is None: sqr_avg = torch.zeros_like(p.grad.data)

damp = 1-sqr_mom if dampening else 1.

sqr_avg.mul_(sqr_mom).addcmul_(p.grad.data, p.grad.data, value=damp)

return {'sqr_avg': sqr_avg}

average_sqr_grad.defaults = dict(sqr_mom=0.99)dampening=False 给出了 SGD 中动量的经典公式:

new_val = old_val * mom + grad**2而 dampening=True 将其变为指数移动平均:

new_val = old_val * mom + (grad**2) * (1-mom)p = tst_param([1,2,3], [4,5,6])

state = {}

state = average_sqr_grad(p, sqr_mom=0.99, dampening=False, **state)

test_eq(state['sqr_avg'], p.grad.pow(2))

state = average_sqr_grad(p, sqr_mom=0.99, dampening=False, **state)

test_eq(state['sqr_avg'], p.grad.pow(2) * 1.99)

#测试阻尼

state = {}

state = average_sqr_grad(p, sqr_mom=0.99, **state)

test_close(state['sqr_avg'], 0.01*p.grad.pow(2))

state = average_sqr_grad(p, sqr_mom=0.99, **state)

test_close(state['sqr_avg'], (0.01*0.99+0.01)*p.grad.pow(2))冻结模型的一部分

show_doc(Optimizer.freeze, name="Optimizer.freeze")show_doc(Optimizer.freeze_to, name="Optimizer.freeze_to")

Optimizer.freeze_to[source]

Optimizer.freeze_to(n:int)

Freeze parameter groups up to n

| Type | Default | Details | |

|---|---|---|---|

n |

int |

Freeze up to n layers |

show_doc(Optimizer.unfreeze, name="Optimizer.unfreeze")#冻结第一层

params = [tst_params(), tst_params(), tst_params()]

opt = Optimizer(params, sgd_step, lr=0.1)

opt.freeze_to(1)

req_grad = Self.requires_grad()

test_eq(L(params[0]).map(req_grad), [False]*4)

for i in {1,2}: test_eq(L(params[i]).map(req_grad), [True]*4)

#解冻

opt.unfreeze()

for i in range(2): test_eq(L(params[i]).map(req_grad), [True]*4)

#待办:测试警告

# opt.freeze_to(3)参数如批量归一化的权重/偏置可以被标记为始终处于训练模式,只需在它们的状态中设置 force_train=true。

params = [tst_params(), tst_params(), tst_params()]

opt = Optimizer(params, sgd_step, lr=0.1)

for p in L(params[1])[[1,3]]: opt.state[p] = {'force_train': True}

opt.freeze()

test_eq(L(params[0]).map(req_grad), [False]*4)

test_eq(L(params[1]).map(req_grad), [False, True, False, True])

test_eq(L(params[2]).map(req_grad), [True]*4)序列化

show_doc(Optimizer.state_dict)

Optimizer.state_dict[source]

Optimizer.state_dict()

Return the state of the optimizer in a dictionary

show_doc(Optimizer.load_state_dict)

Optimizer.load_state_dict[source]

Optimizer.load_state_dict(sd:dict)

Load the content of sd

| Type | Default | Details | |

|---|---|---|---|

sd |

dict |

State dict with hypers and state to load on the optimizer |

p = tst_param([1,2,3], [4,5,6])

opt = Optimizer(p, average_grad)

opt.step()

test_eq(opt.state[p]['grad_avg'], tensor([[4., 5., 6.]]))

sd = opt.state_dict()

p1 = tst_param([10,20,30], [40,50,60])

opt = Optimizer(p1, average_grad, mom=0.99)

test_eq(opt.hypers[0]['mom'], 0.99)

test_eq(opt.state, {})

opt.load_state_dict(sd)

test_eq(opt.hypers[0]['mom'], 0.9)

test_eq(opt.state[p1]['grad_avg'], tensor([[4., 5., 6.]]))show_doc(Optimizer.clear_state)p = tst_param([1,2,3], [4,5,6])

opt = Optimizer(p, average_grad)

opt.state[p] = {'force_train': True}

opt.step()

test_eq(opt.state[p]['grad_avg'], tensor([[4., 5., 6.]]))

opt.clear_state()

test_eq(opt.state[p], {'force_train': True})优化器

带动量的随机梯度下降(SGD)

def momentum_step(p, lr, grad_avg, **kwargs):

"Step for SGD with momentum with `lr`"

p.data.add_(grad_avg, alpha=-lr)def SGD(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0., # 梯度移动平均(β1)系数

wd:Real=0., # 可选的权重衰减(true 或 L2)

decouple_wd:bool=True # 应用真正的权重衰减或L2正则化(SGD)

) -> Optimizer:

"A SGD `Optimizer`"

cbs = [weight_decay] if decouple_wd else [l2_reg]

if mom != 0: cbs.append(average_grad)

cbs.append(sgd_step if mom==0 else momentum_step)

return Optimizer(params, cbs, lr=lr, mom=mom, wd=wd)可选的权重衰减 wd 被应用,如果 decouple_wd=True 则采用真正的权重衰减(直接衰减权重),否则作为L2正则化(将衰减添加到梯度中)。

#普通随机梯度下降

params = tst_params()

opt = SGD(params, lr=0.1)

opt.step()

test_close([p.item() for p in params], [i*0.99 for i in range(4)])

opt.step()

test_close([p.item() for p in params], [i*0.98 for i in range(4)])#带有动量的随机梯度下降

params = tst_params()

opt = SGD(params, lr=0.1, mom=0.9)

assert isinstance(opt, Optimizer)

opt.step()

test_close([p.item() for p in params], [i*0.99 for i in range(4)])

opt.step()

test_close([p.item() for p in params], [i*(1 - 0.1 * (0.1 + 0.1*1.9)) for i in range(4)])

for i,p in enumerate(params): test_close(opt.state[p]['grad_avg'].item(), i*0.19)测试权重衰减,注意我们可以看到,即使对于简单的带动量的SGD,L2正则化也与权重衰减不同。

params = tst_params()

#权重衰减

opt = SGD(params, lr=0.1, mom=0.9, wd=0.1)

opt.step()

test_close([p.item() for p in params], [i*0.98 for i in range(4)])

#L2正则化

opt = SGD(params, lr=0.1, mom=0.9, wd=0.1, decouple_wd=False)

opt.step()

#待办:修正此公式,因其有误

#测试关闭([参数中各项的值],[从0开始,每次增加0.97的四个值])RMSProp

def rms_prop_step(p, lr, sqr_avg, eps, grad_avg=None, **kwargs):

"Step for RMSProp with momentum with `lr`"

denom = sqr_avg.sqrt().add_(eps)

p.data.addcdiv_((grad_avg if grad_avg is not None else p.grad), denom, value=-lr)

rms_prop_step.defaults = dict(eps=1e-8)def RMSProp(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0., # 梯度移动平均(β1)系数

sqr_mom:float=0.99, # 梯度平方移动平均系数(β2)

eps:float=1e-8, # 为了数值稳定性而添加

wd:Real=0., # 可选的权重衰减(true 或 L2)

decouple_wd:bool=True # 应用真正的权重衰减或L2正则化(RMSProp)

) -> Optimizer:

"A RMSProp `Optimizer`"

cbs = [weight_decay] if decouple_wd else [l2_reg]

cbs += ([average_sqr_grad] if mom==0. else [average_grad, average_sqr_grad])

cbs.append(rms_prop_step)

return Optimizer(params, cbs, lr=lr, mom=mom, sqr_mom=sqr_mom, wd=wd)RMSProp是由Geoffrey Hinton在他的课程中提出的。这里命名为sqr_mom的就是课程中的alpha。如果decouple_wd=True,那么可选的权重衰减wd将作为真正的权重衰减(直接衰减权重)应用,否则将作为L2正则化(将衰减添加到梯度中)。

#没有动力

params = tst_param([1,2,3], [0.1,0.2,0.3])

opt = RMSProp(params, lr=0.1)

opt.step()

test_close(params[0], tensor([0.,1.,2.]))

opt.step()

step = - 0.1 * 0.1 / (math.sqrt((0.01*0.99+0.01) * 0.1**2) + 1e-8)

test_close(params[0], tensor([step, 1+step, 2+step]))#带着势头

params = tst_param([1,2,3], [0.1,0.2,0.3])

opt = RMSProp(params, lr=0.1, mom=0.9)

opt.step()

test_close(params[0], tensor([0.,1.,2.]))

opt.step()

step = - 0.1 * (0.1 + 0.9*0.1) / (math.sqrt((0.01*0.99+0.01) * 0.1**2) + 1e-8)

test_close(params[0], tensor([step, 1+step, 2+step]))亚当

def step_stat(p, step=0, **kwargs):

"Register the number of steps done in `state` for `p`"

step += 1

return {'step' : step}p = tst_param(1,0.1)

state = {}

state = step_stat(p, **state)

test_eq(state['step'], 1)

for _ in range(5): state = step_stat(p, **state)

test_eq(state['step'], 6)def debias(mom, damp, step): return damp * (1 - mom**step) / (1-mom)def adam_step(p, lr, mom, step, sqr_mom, grad_avg, sqr_avg, eps, **kwargs):

"Step for Adam with `lr` on `p`"

debias1 = debias(mom, 1-mom, step)

debias2 = debias(sqr_mom, 1-sqr_mom, step)

p.data.addcdiv_(grad_avg, (sqr_avg/debias2).sqrt() + eps, value = -lr / debias1)

return p

adam_step._defaults = dict(eps=1e-5)def Adam(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0.9, # 梯度移动平均(β1)系数

sqr_mom:float=0.99, # 梯度平方移动平均系数(β2)

eps:float=1e-5, # 为提高数值稳定性而添加

wd:Real=0.01, # 可选的权重衰减(true 或 L2)

decouple_wd:bool=True # 应用真正的权重衰减(AdamW)或L2正则化(Adam)

) -> Optimizer:

"A Adam/AdamW `Optimizer`"

cbs = [weight_decay] if decouple_wd else [l2_reg]

cbs += [partial(average_grad, dampening=True), average_sqr_grad, step_stat, adam_step]

return Optimizer(params, cbs, lr=lr, mom=mom, sqr_mom=sqr_mom, eps=eps, wd=wd)Adam是由Diederik P. Kingma和Jimmy Ba在Adam: A Method for Stochastic Optimization中提出的。为了确保各优化器之间的一致性,我们在论文中将beta1和beta2重命名为mom和sqr_mom。请注意,我们的默认设置也与论文不同(sqr_mom或beta2为0.99,eps为1e-5)。这些值在我们广泛的实验中似乎表现更好。

可选的权重衰减wd被应用,如果decouple_wd=True,则作为真正的权重衰减(直接衰减权重),否则作为L2正则化(将衰减添加到梯度中)。

不要忘记,eps是一个您可以更改的超参数。一些模型在没有非常高的eps(如0.1)的情况下无法训练(直观地说,eps越高,我们越接近常规SGD)。通常默认的1e-8在某种意义上过于极端,我们的结果不如使用SGD时好。

params = tst_param([1,2,3], [0.1,0.2,0.3])

opt = Adam(params, lr=0.1, wd=0)

opt.step()

step = -0.1 * 0.1 / (math.sqrt(0.1**2) + 1e-8)

test_close(params[0], tensor([1+step, 2+step, 3+step]))

opt.step()

test_close(params[0], tensor([1+2*step, 2+2*step, 3+2*step]), eps=1e-3)RAdam

RAdam(修正的Adam)由Zhang等人在自适应学习率的方差及其更进一步中提出,旨在对Adam优化器进行略微修改,以使其在训练初期更加稳定(因此不需要长时间的预热)。他们使用平方梯度移动平均的方差估计(传统Adam中分母的项),并在执行更新之前通过该项重新缩放此移动平均值。

此版本还结合了SAdam;设置beta以启用此功能(定义与论文中的相同)。

def radam_step(p, lr, mom, step, sqr_mom, grad_avg, sqr_avg, eps, beta, **kwargs):

"Step for RAdam with `lr` on `p`"

debias1 = debias(mom, 1-mom, step)

debias2 = debias(sqr_mom, 1-sqr_mom, step)

r_inf = 2/(1-sqr_mom) - 1

r = r_inf - 2*step*sqr_mom**step/(1-sqr_mom**step)

if r > 5:

v = math.sqrt(((r-4) * (r-2) * r_inf)/((r_inf-4)*(r_inf-2)*r))

denom = (sqr_avg/debias2).sqrt()

if eps: denom += eps

if beta: denom = F.softplus(denom, beta)

p.data.addcdiv_(grad_avg, denom, value = -lr*v / debias1)

else: p.data.add_(grad_avg, alpha=-lr / debias1)

return p

radam_step._defaults = dict(eps=1e-5)def RAdam(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0.9, # 梯度移动平均(β1)系数

sqr_mom:float=0.99, # 梯度平方移动平均系数(β2)

eps:float=1e-5, # 为了提高数值稳定性而添加

wd:Real=0., # 可选的权重衰减(true 或 L2)

beta:float=0., # 设置为启用SAdam

decouple_wd:bool=True # 应用真正的权重衰减(RAdamW)或L2正则化(RAdam)

) -> Optimizer:

"A RAdam/RAdamW `Optimizer`"

cbs = [weight_decay] if decouple_wd else [l2_reg]

cbs += [partial(average_grad, dampening=True), average_sqr_grad, step_stat, radam_step]

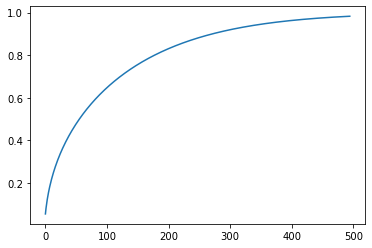

return Optimizer(params, cbs, lr=lr, mom=mom, sqr_mom=sqr_mom, eps=eps, wd=wd, beta=beta)这是在RAdam中报告的针对500次迭代的adam步骤的有效修正。我们可以看到它从0到1的变化,模拟了预热的效果。

beta = 0.99

r_inf = 2/(1-beta) - 1

rs = np.array([r_inf - 2*s*beta**s/(1-beta**s) for s in range(5,500)])

v = np.sqrt(((rs-4) * (rs-2) * r_inf)/((r_inf-4)*(r_inf-2)*rs))

plt.plot(v);

params = tst_param([1,2,3], [0.1,0.2,0.3])

opt = RAdam(params, lr=0.1)

#在前5步中,r因子低于5,因此更新使用梯度的平均值(所有梯度相同)

r_inf = 2/(1-0.99) - 1

for i in range(5):

r = r_inf - 2*(i+1)*0.99**(i+1)/(1-0.99**(i+1))

assert r <= 5

opt.step()

p = tensor([0.95, 1.9, 2.85])

test_close(params[0], p)

#第六步的r因子大于5,因此我们使用RAdam进行更新

r = r_inf - 2*6*0.99**6/(1-0.99**6)

assert r > 5

opt.step()

v = math.sqrt(((r-4) * (r-2) * r_inf)/((r_inf-4)*(r_inf-2)*r))

step = -0.1*0.1*v/(math.sqrt(0.1**2) + 1e-8)

test_close(params[0], p+step)QHAdam

QHAdam(准双曲阿达姆优化器)由Ma和Yarats在准双曲动量和深度学习中的阿达姆中提出,作为一种“计算成本低,易于理解,易于实现”的优化器。更多代码可以在他们的qhoptim库中找到。QHAdam基于QH动量,后者引入了立即折扣因子nu,涵盖了普通SGD(nu = 0)和动量(nu = 1)。QH动量定义如下,其中g_t+1是动量的更新。QHM的一个解释是动量更新步和普通SGD更新步的nu加权平均。

θ_t+1 ← θ_t − lr * [(1 − nu) · ∇L_t(θ_t) + nu · g_t+1]

QHAdam将上述QHM的概念应用于Adam,使用准双曲项替换了Adam的两个动量估计器。

论文建议的默认参数为mom = 0.999,sqr_mom = 0.999,nu_1 = 0.7和nu_2 = 1.0。当训练不稳定时,可以通过将nu_2 < 1来改善稳定性,从而施加更严格的步长限制。请注意,当nu_1 = nu_2 = 1.0时,QHAdam恢复为Adam。当nu_1 = 0且nu_2 = 1时,QHAdam恢复为RMSProp(Hinton等,2012);当nu_1 = mom且nu_2 = 1时,恢复为NAdam(Dozat,2016)。

可选的权重衰减wd被应用,如果decouple_wd=True,则作为真实的权重衰减(直接衰减权重),否则作为L2正则化(将衰减添加到梯度中)。

def qhadam_step(p, lr, mom, sqr_mom, sqr_avg, nu_1, nu_2, step, grad_avg, eps, **kwargs):

debias1 = debias(mom, 1-mom, step)

debias2 = debias(sqr_mom, 1-sqr_mom, step)

p.data.addcdiv_(((1-nu_1) * p.grad.data) + (nu_1 * (grad_avg / debias1)),

(((1 - nu_2) * (p.grad.data)**2) + (nu_2 * (sqr_avg / debias2))).sqrt() + eps,

value = -lr)

return p

qhadam_step._defaults = dict(eps=1e-8)def QHAdam(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0.999, # 梯度移动平均(β1)系数

sqr_mom:float=0.999, # 梯度平方移动平均系数(β2)

nu_1:float=0.7, # QH即时贴现因子

nu_2:float=1.0, # QH动量折现因子

eps:float=1e-8, # 为了提高数值稳定性而添加

wd:Real=0., # 可选的权重衰减(true 或 L2)

decouple_wd:bool=True, # 应用真正的权重衰减(QHAdamW)或L2正则化(QHAdam)

) -> Optimizer:

"A QHAdam/QHAdamW `Optimizer`"

cbs = [weight_decay] if decouple_wd else [l2_reg]

cbs += [partial(average_grad, dampening=True), partial(average_sqr_grad, dampening=True), step_stat, qhadam_step]

return Optimizer(params, cbs, lr=lr, nu_1=nu_1, nu_2=nu_2 ,

mom=mom, sqr_mom=sqr_mom, eps=eps, wd=wd)params = tst_param([1,2,3], [0.1,0.2,0.3])

opt = QHAdam(params, lr=0.1)

opt.step()

step = -0.1 * (((1-0.7) * 0.1) + (0.7 * 0.1)) / (

math.sqrt(((1-1.0) * 0.1**2) + (1.0 * 0.1**2)) + 1e-8)

test_close(params[0], tensor([1+step, 2+step, 3+step]))

opt.step()

test_close(params[0], tensor([1+2*step, 2+2*step, 3+2*step]), eps=1e-3)LARS/LARC

def larc_layer_lr(p, lr, trust_coeff, wd, eps, clip=True, **kwargs):

"Computes the local lr before weight decay is applied"

p_norm,g_norm = torch.norm(p.data),torch.norm(p.grad.data)

local_lr = lr*trust_coeff * (p_norm) / (g_norm + p_norm * wd + eps)

return {'local_lr': min(lr, local_lr) if clip else local_lr}

larc_layer_lr.defaults = dict(trust_coeff=0.02, wd=0., eps=1e-8)def larc_step(p, local_lr, grad_avg=None, **kwargs):

"Step for LARC `local_lr` on `p`"

p.data.add_(p.grad.data if grad_avg is None else grad_avg, alpha = -local_lr)def Larc(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0.9, # 梯度移动平均(β1)系数

clip:bool=True, # 如果clip=True,则使用LARC;如果clip=False,则使用LARS。

trust_coeff:float=0.02, # 用于计算逐层学习率的信任系数

eps:float=1e-8, # 为了提高数值稳定性而添加

wd:Real=0., # 可选的权重衰减(true 或 L2)

decouple_wd:bool=True # 应用真正的权重衰减或L2正则化

) -> Optimizer:

"A LARC/LARS `Optimizer`"

cbs = [weight_decay] if decouple_wd else [l2_reg]

if mom!=0.: cbs.append(average_grad)

cbs += [partial(larc_layer_lr, clip=clip), larc_step]

return Optimizer(params, cbs, lr=lr, mom=mom, trust_coeff=trust_coeff, eps=eps, wd=wd)LARS优化器首次在大批量训练卷积网络中提出,然后在其LARC变体中进行了改进(原始LARS是clip=False)。为每个单独的层计算一个学习率,并使用特定的trust_coefficient,然后将其裁剪为始终小于lr。

可选的权重衰减wd被应用,如果decouple_wd=True则以真实的权重衰减(直接衰减权重),否则以L2正则化(将衰减添加到梯度中)。

params = [tst_param([1,2,3], [0.1,0.2,0.3]), tst_param([1,2,3], [0.01,0.02,0.03])]

opt = Larc(params, lr=0.1)

opt.step()

#First param local lr is 0.02 < lr so it's not clipped

test_close(opt.state[params[0]]['local_lr'], 0.02)

#Second param local lr is 0.2 > lr so it's clipped

test_eq(opt.state[params[1]]['local_lr'], 0.1)

test_close(params[0], tensor([0.998,1.996,2.994]))

test_close(params[1], tensor([0.999,1.998,2.997]))params = [tst_param([1,2,3], [0.1,0.2,0.3]), tst_param([1,2,3], [0.01,0.02,0.03])]

opt = Larc(params, lr=0.1, clip=False)

opt.step()

#无剪辑

test_close(opt.state[params[0]]['local_lr'], 0.02)

test_close(opt.state[params[1]]['local_lr'], 0.2)

test_close(params[0], tensor([0.998,1.996,2.994]))

test_close(params[1], tensor([0.998,1.996,2.994]))LAMB

def lamb_step(p, lr, mom, step, sqr_mom, grad_avg, sqr_avg, eps, **kwargs):

"Step for LAMB with `lr` on `p`"

debias1 = debias(mom, 1-mom, step)

debias2 = debias(sqr_mom, 1-sqr_mom, step)

r1 = p.data.pow(2).mean().sqrt()

step = (grad_avg/debias1) / ((sqr_avg/debias2).sqrt()+eps)

r2 = step.pow(2).mean().sqrt()

q = 1 if r1 == 0 or r2 == 0 else min(r1/r2,10)

p.data.add_(step, alpha = -lr * q)

lamb_step._defaults = dict(eps=1e-6, wd=0.)def Lamb(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0.9, # 梯度移动平均(β1)系数

sqr_mom:float=0.99, # 梯度平方移动平均系数(β2)

eps:float=1e-5, # 为了提高数值稳定性而添加

wd:Real=0., # 可选的权重衰减(true 或 L2)

decouple_wd:bool=True # 应用真正的权重衰减或L2正则化

) -> Optimizer:

"A LAMB `Optimizer`"

cbs = [weight_decay] if decouple_wd else [l2_reg]

cbs += [partial(average_grad, dampening=True), average_sqr_grad, step_stat, lamb_step]

return Optimizer(params, cbs, lr=lr, mom=mom, sqr_mom=sqr_mom, eps=eps, wd=wd)LAMB在大批量优化深度学习:76分钟训练BERT中被引入。直观上,它是LARC应用于Adam。与Adam一样,我们在论文中将beta1和beta2重命名为mom和sqr_mom。请注意,我们的默认值也与论文中的不同(sqr_mom或beta2为0.99,eps为1e-5)。这些值在我们广泛的实验中似乎表现更好。

可选的权重衰减wd被应用,如果decouple_wd=True,则作为真实的权重衰减(直接衰减权重),否则作为L2正则化(将衰减添加到梯度中)。

params = tst_param([1,2,3], [0.1,0.2,0.3])

opt = Lamb(params, lr=0.1)

opt.step()

test_close(params[0], tensor([0.7840,1.7840,2.7840]), eps=1e-3)前瞻 -

Lookahead 是由 Zhang 等人在 Lookahead Optimizer: k steps forward, 1 step back 中提出的。它可以在任何优化器之上运行,其工作原理是使模型的最终权重成为移动平均。在实际操作中,我们使用内部优化器更新模型,但保持一份旧权重的副本,每经过 k 步,就通过快速权重(由内部优化器更新的权重)和慢速权重(旧权重的副本)的移动平均来改变权重。这些慢速权重像是一个稳定机制。

class Lookahead(Optimizer, GetAttr):

"Wrap `opt` in a lookahead optimizer"

_default='opt'

def __init__(self,

opt:Optimizer, # 用`Lookahead`包装的`优化器`

k:int=6, # 进行前瞻步骤的频率

alpha:float=0.5, # 慢速权重移动平均系数

):

store_attr('opt,k,alpha')

self._init_state()

def step(self, closure=None):

if closure is not None: raise NotImplementedError("fastai optimizers currently do not support closure")

if self.slow_weights is None: self._copy_weights()

self.opt.step()

self.count += 1

if self.count%self.k != 0: return

for slow_pg,fast_pg in zip(self.slow_weights,self.param_lists):

for slow_p,fast_p in zip(slow_pg,fast_pg):

slow_p.data.add_(fast_p.data-slow_p.data, alpha=self.alpha)

fast_p.data.copy_(slow_p.data)

def clear_state(self):

self.opt.clear_state()

self._init_state()

def state_dict(self):

state = self.opt.state_dict()

state.update({'count': self.count, 'slow_weights': self.slow_weights})

return state

def load_state_dict(self, sd):

self.count = sd.pop('count')

self.slow_weights = sd.pop('slow_weights')

self.opt.load_state_dict(sd)

def _init_state(self): self.count,self.slow_weights = 0,None

def _copy_weights(self): self.slow_weights = L(L(p.clone().detach() for p in pg) for pg in self.param_lists)

@property

def param_lists(self): return self.opt.param_lists

@param_lists.setter

def param_lists(self, v): self.opt.param_lists = vparams = tst_param([1,2,3], [0.1,0.2,0.3])

p,g = params[0].data.clone(),tensor([0.1,0.2,0.3])

opt = Lookahead(SGD(params, lr=0.1))

for k in range(5): opt.step()

#前5步是正常的SGD步骤

test_close(params[0], p - 0.5*g)

#由于k=6,第六步是初始权重下6次SGD步骤的移动平均值

opt.step()

test_close(params[0], p * 0.5 + (p-0.6*g) * 0.5)@delegates(RAdam)

def ranger(

params:Tensor|Iterable, # 模型参数

lr:float|slice, # 默认学习率

mom:float=0.95, # 梯度移动平均(β1)系数

wd:Real=0.01, # 可选的权重衰减(true 或 L2)

eps:float=1e-6, # 为提高数值稳定性而添加

k:int=6, # 多久进行一次前瞻步骤

alpha:float=0.5, # 慢速权重移动平均系数

**kwargs

) -> Lookahead:

"Convenience method for `Lookahead` with `RAdam`"

return Lookahead(RAdam(params, lr=lr, mom=mom, wd=wd, eps=eps, **kwargs), k=k, alpha=alpha)优化器包装器 -

OptimWrapper 提供了简单的功能来使用已构建的现有优化器,这些优化器是使用 torch.optim.Optimizer 构造的。

def detuplify_pg(d):

res = {}

for k,v in d.items():

if k == 'params': continue

if is_listy(v): res.update(**{f'{k}__{i}': v_ for i,v_ in enumerate(v)})

else: res[k] = v

return restst = {'lr': 1e-2, 'mom': 0.9, 'params':[0,1,2]}

test_eq(detuplify_pg(tst), {'lr': 1e-2, 'mom': 0.9})

tst = {'lr': 1e-2, 'betas': (0.9,0.999), 'params':[0,1,2]}

test_eq(detuplify_pg(tst), {'lr': 1e-2, 'betas__0': 0.9, 'betas__1': 0.999})def set_item_pg(pg, k, v):

if '__' not in k: pg[k] = v

else:

name,idx = k.split('__')

pg[name] = tuple(v if i==int(idx) else pg[name][i] for i in range_of(pg[name]))

return pgtst = {'lr': 1e-2, 'mom': 0.9, 'params':[0,1,2]}

test_eq(set_item_pg(tst, 'lr', 1e-3), {'lr': 1e-3, 'mom': 0.9, 'params':[0,1,2]})

tst = {'lr': 1e-2, 'betas': (0.9,0.999), 'params':[0,1,2]}

test_eq(set_item_pg(tst, 'betas__0', 0.95), {'lr': 1e-2, 'betas': (0.95,0.999), 'params':[0,1,2]})pytorch_hp_map = {'momentum': 'mom', 'weight_decay': 'wd', 'alpha': 'sqr_mom', 'betas__0': 'mom',

'betas__1': 'sqr_mom'}def _convert_params(o:list) -> list:

splitter = []

for group in o:

if isinstance(group, dict): splitter.append(group)

else: splitter.append({'params':group})

return splitterclass OptimWrapper(_BaseOptimizer, GetAttr):

"A wrapper class for existing PyTorch optimizers"

_xtra=['zero_grad', 'step', 'state_dict', 'load_state_dict']

_default='opt'

def __init__(self,

params:Tensor|Iterable=None, # Model parameters. Don't set if using a built optimizer

opt:callable|torch.optim.Optimizer=None, # A torch optimizer constructor, or an already built optimizer

hp_map:dict=None, # A dictionary converting PyTorch optimizer keys to fastai's `Optimizer` keys. Defaults to `pytorch_hp_map`

convert_groups:bool=True, # 将参数组从拆分器转换或不加修改地传递给 `opt`

**kwargs

):

if params is None and opt is None: raise ValueError("Both `params` and `opt` cannot be None.")

if callable(opt):

if convert_groups:

params = L(params)

convert_groups = isinstance(params[0], (L,list))

self.opt = opt(_convert_params(params), **kwargs) if convert_groups else opt(params, **kwargs)

else:

if params is not None: raise ValueError("Tried using both `params` and a built optimizer. Just pass in `opt`.")

self.opt = opt

if hp_map is None: hp_map = pytorch_hp_map

self.fwd_map = {k: hp_map[k] if k in hp_map else k for k in detuplify_pg(self.opt.param_groups[0]).keys()}

self.bwd_map = {v:k for k,v in self.fwd_map.items()}

self.state = defaultdict(dict, {})

self.frozen_idx = 0

@property

def hypers(self):

return [{self.fwd_map.get(k, k):v for k,v in detuplify_pg(pg).items() if k != 'params'} for pg in self.opt.param_groups]

def _set_hyper(self, k, v):

for pg,v_ in zip(self.opt.param_groups,v): pg = set_item_pg(pg, self.bwd_map[k], v_)

def clear_state(self): self.opt.state = defaultdict(dict, {})

@property

def param_lists(self): return [pg['params'] for pg in self.opt.param_groups]

@param_lists.setter

def param_lists(self, v):

for pg,v_ in zip(self.opt.param_groups,v): pg['params'] = v_要使用现有的 PyTorch 优化器,可以像这样定义一个优化器函数:

opt_func = partial(OptimWrapper, opt=torch.optim.SGD)或者如果你已经构建了优化器,只需传入 opt:

opt = torch.optim.SGD([tensor([1,2,3])], lr=1e-2)

opt_func = OptimWrapper(opt=opt)当将构建好的优化器传递给Learner时,Learner.fit将清除优化器状态(如果reset_opt=True)或在第一次调用Learner.fit时。

为了防止Learner在首次调用Learner.fit时清除优化器状态,可以直接将优化器赋值给Learner.opt:

opt = torch.optim.SGD([tensor([1,2,3])], lr=1e-2)

learn = Learner(..., opt_func=None)

learn.opt = OptimWrapper(opt=opt)def test_dict_in(a,b):

"Test that dictionary a is in b"

if is_listy(a) or is_listy(b):

for i,j in zip(a,b):

test_dict_in(i,j)

else:

for k,v in zip(a.keys(),a.values()):

test_eq(b[k], v)sgd = SGD([tensor([1,2,3])], lr=1e-3, mom=0.9, wd=1e-2)

tst_sgd = OptimWrapper([tensor([1,2,3])], torch.optim.SGD, lr=1e-3, momentum=0.9, weight_decay=1e-2)

#访问param_groups

test_eq(tst_sgd.param_lists, sgd.param_lists)

#设置参数组

tst_sgd.param_lists = [[tensor([4,5,6])]]

test_eq(tst_sgd.opt.param_groups[0]['params'], [tensor(4,5,6)])

#超链接访问

test_dict_in(sgd.hypers, tst_sgd.hypers)

#设置超参数

tst_sgd.set_hyper('mom', 0.95)

test_eq(tst_sgd.opt.param_groups[0]['momentum'], 0.95)tst_sgd = OptimWrapper([{'params': [tensor([1,2,3])], 'lr': 1e-3},

{'params': [tensor([4,5,6])], 'lr': 1e-2}], torch.optim.SGD, momentum=0.9, weight_decay=1e-2)

sgd = SGD([[tensor([1,2,3])], [tensor([4,5,6])]], lr=[1e-3, 1e-2], mom=0.9, wd=1e-2)

#访问param_groups

test_eq(tst_sgd.param_lists, sgd.param_lists)

#设置参数组

tst_sgd.param_lists = [[tensor([4,5,6])], [tensor([1,2,3])]]

test_eq(tst_sgd.opt.param_groups[0]['params'], [tensor(4,5,6)])

test_eq(tst_sgd.opt.param_groups[1]['params'], [tensor(1,2,3)])

#超链接访问

test_dict_in(sgd.hypers, tst_sgd.hypers)

#设置超参数

tst_sgd.set_hyper('mom', 0.95)

test_eq([pg['momentum'] for pg in tst_sgd.opt.param_groups], [0.95,0.95])

tst_sgd.set_hyper('lr', [1e-4,1e-3])

test_eq([pg['lr'] for pg in tst_sgd.opt.param_groups], [1e-4,1e-3])# 确保我们能够使用一个已经制作好的优化器。

tst_sgd = torch.optim.SGD([{'params': [tensor([1,2,3])], 'lr': 1e-3},

{'params': [tensor([4,5,6])], 'lr': 1e-2}])

tst_sgd = OptimWrapper(opt = tst_sgd)

sgd = SGD([[tensor([1,2,3])], [tensor([4,5,6])]], lr=[1e-3, 1e-2])

#访问param_groups

test_eq(tst_sgd.param_lists, sgd.param_lists)

#设置参数组

tst_sgd.param_lists = [[tensor([4,5,6])], [tensor([1,2,3])]]

test_eq(tst_sgd.opt.param_groups[0]['params'], [tensor(4,5,6)])

test_eq(tst_sgd.opt.param_groups[1]['params'], [tensor(1,2,3)])

#超链接访问

test_dict_in(sgd.hypers, tst_sgd.hypers)

#设置超参数

tst_sgd.set_hyper('mom', 0.95)

test_eq([pg['momentum'] for pg in tst_sgd.opt.param_groups], [0.95,0.95])

tst_sgd.set_hyper('lr', [1e-4,1e-3])

test_eq([pg['lr'] for pg in tst_sgd.opt.param_groups], [1e-4,1e-3])#检查它是否适用于像亚当中那样的双姓氏

tst_adam = OptimWrapper([tensor([1,2,3])], torch.optim.Adam, lr=1e-2, betas=(0.9, 0.99))

tst_hypers = {'lr': 0.01, 'mom': 0.9, 'sqr_mom': 0.99, 'eps': 1e-08, 'wd': 0, 'amsgrad': False, 'maximize':False}

test_dict_in([tst_hypers], tst_adam.hypers)

tst_adam.set_hyper('mom', 0.95)

test_eq(tst_adam.opt.param_groups[0]['betas'], (0.95, 0.99))

tst_adam.set_hyper('sqr_mom', 0.9)

test_eq(tst_adam.opt.param_groups[0]['betas'], (0.95, 0.9))

tst_adam = torch.optim.Adam([tensor([1,2,3])], lr=1e-2, betas=(0.9, 0.99))

tst_adam = OptimWrapper(opt=tst_adam)

test_dict_in([tst_hypers], tst_adam.hypers)

tst_adam.set_hyper('mom', 0.95)

test_eq(tst_adam.opt.param_groups[0]['betas'], (0.95, 0.99))

tst_adam.set_hyper('sqr_mom', 0.9)

test_eq(tst_adam.opt.param_groups[0]['betas'], (0.95, 0.9))def _mock_train(m, x, y, opt):

m.train()

for i in range(0, 100, 25):

z = m(x[i:i+25])

loss = F.mse_loss(z, y[i:i+25])

loss.backward()

opt.step()

opt.zero_grad()m = nn.Linear(4,5)

x = torch.randn(100, 3, 4)

y = torch.randn(100, 3, 5)

try:

torch.save(m.state_dict(), 'tmp.pth')

wgt,bias = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt1 = OptimWrapper(m.parameters(), torch.optim.AdamW, betas=(0.9, 0.99), eps=1e-5, weight_decay=1e-2)

_mock_train(m, x.clone(), y.clone(), opt1)

wgt1,bias1 = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt2 = Adam(m.parameters(), 1e-3, wd=1e-2)

_mock_train(m, x.clone(), y.clone(), opt2)

wgt2,bias2 = m.weight.data.clone(),m.bias.data.clone()

test_close(wgt1,wgt2,eps=1e-3)

test_close(bias1,bias2,eps=1e-3)

finally: os.remove('tmp.pth')m = nn.Linear(4,5)

x = torch.randn(100, 3, 4)

y = torch.randn(100, 3, 5)

try:

torch.save(m.state_dict(), 'tmp.pth')

wgt,bias = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt1 = torch.optim.AdamW(m.parameters(), betas=(0.9, 0.99), eps=1e-5, weight_decay=1e-2)

opt1 = OptimWrapper(opt=opt1)

_mock_train(m, x.clone(), y.clone(), opt1)

wgt1,bias1 = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt2 = Adam(m.parameters(), 1e-3, wd=1e-2)

_mock_train(m, x.clone(), y.clone(), opt2)

wgt2,bias2 = m.weight.data.clone(),m.bias.data.clone()

test_close(wgt1,wgt2,eps=1e-3)

test_close(bias1,bias2,eps=1e-3)

finally: os.remove('tmp.pth')m = nn.Linear(4,5)

x = torch.randn(100, 3, 4)

y = torch.randn(100, 3, 5)

try:

torch.save(m.state_dict(), 'tmp.pth')

wgt,bias = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt1 = OptimWrapper(m.parameters(), torch.optim.Adam, betas=(0.9, 0.99), eps=1e-5, weight_decay=1e-2)

_mock_train(m, x.clone(), y.clone(), opt1)

wgt1,bias1 = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt2 = Adam(m.parameters(), 1e-3, wd=1e-2, decouple_wd=False)

_mock_train(m, x.clone(), y.clone(), opt2)

wgt2,bias2 = m.weight.data.clone(),m.bias.data.clone()

test_close(wgt1,wgt2,eps=1e-3)

test_close(bias1,bias2,eps=1e-3)

finally: os.remove('tmp.pth')m = nn.Linear(4,5)

x = torch.randn(100, 3, 4)

y = torch.randn(100, 3, 5)

try:

torch.save(m.state_dict(), 'tmp.pth')

wgt,bias = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt1 = torch.optim.Adam(m.parameters(), betas=(0.9, 0.99), eps=1e-5, weight_decay=1e-2)

opt1 = OptimWrapper(opt=opt1)

_mock_train(m, x.clone(), y.clone(), opt1)

wgt1,bias1 = m.weight.data.clone(),m.bias.data.clone()

m.load_state_dict(torch.load('tmp.pth'))

opt2 = Adam(m.parameters(), 1e-3, wd=1e-2, decouple_wd=False)

_mock_train(m, x.clone(), y.clone(), opt2)

wgt2,bias2 = m.weight.data.clone(),m.bias.data.clone()

test_close(wgt1,wgt2,eps=1e-3)

test_close(bias1,bias2,eps=1e-3)

finally: os.remove('tmp.pth')导出 -

from nbdev import *

nbdev_export()Converted 00_torch_core.ipynb.

Converted 01_layers.ipynb.

Converted 01a_losses.ipynb.

Converted 02_data.load.ipynb.

Converted 03_data.core.ipynb.

Converted 04_data.external.ipynb.

Converted 05_data.transforms.ipynb.

Converted 06_data.block.ipynb.

Converted 07_vision.core.ipynb.

Converted 08_vision.data.ipynb.

Converted 09_vision.augment.ipynb.

Converted 09b_vision.utils.ipynb.

Converted 09c_vision.widgets.ipynb.

Converted 10_tutorial.pets.ipynb.

Converted 10b_tutorial.albumentations.ipynb.

Converted 11_vision.models.xresnet.ipynb.

Converted 12_optimizer.ipynb.

Converted 13_callback.core.ipynb.

Converted 13a_learner.ipynb.

Converted 13b_metrics.ipynb.

Converted 14_callback.schedule.ipynb.

Converted 14a_callback.data.ipynb.

Converted 15_callback.hook.ipynb.

Converted 15a_vision.models.unet.ipynb.

Converted 16_callback.progress.ipynb.

Converted 17_callback.tracker.ipynb.

Converted 18_callback.fp16.ipynb.

Converted 18a_callback.training.ipynb.

Converted 18b_callback.preds.ipynb.

Converted 19_callback.mixup.ipynb.

Converted 20_interpret.ipynb.

Converted 20a_distributed.ipynb.

Converted 20b_tutorial.distributed.ipynb.

Converted 21_vision.learner.ipynb.

Converted 22_tutorial.imagenette.ipynb.

Converted 23_tutorial.vision.ipynb.

Converted 24_tutorial.image_sequence.ipynb.

Converted 24_tutorial.siamese.ipynb.

Converted 24_vision.gan.ipynb.

Converted 30_text.core.ipynb.

Converted 31_text.data.ipynb.

Converted 32_text.models.awdlstm.ipynb.

Converted 33_text.models.core.ipynb.

Converted 34_callback.rnn.ipynb.

Converted 35_tutorial.wikitext.ipynb.

Converted 37_text.learner.ipynb.

Converted 38_tutorial.text.ipynb.

Converted 39_tutorial.transformers.ipynb.

Converted 40_tabular.core.ipynb.

Converted 41_tabular.data.ipynb.

Converted 42_tabular.model.ipynb.

Converted 43_tabular.learner.ipynb.

Converted 44_tutorial.tabular.ipynb.

Converted 45_collab.ipynb.

Converted 46_tutorial.collab.ipynb.

Converted 50_tutorial.datablock.ipynb.

Converted 60_medical.imaging.ipynb.

Converted 61_tutorial.medical_imaging.ipynb.

Converted 65_medical.text.ipynb.

Converted 70_callback.wandb.ipynb.

Converted 70a_callback.tensorboard.ipynb.

Converted 70b_callback.neptune.ipynb.

Converted 70c_callback.captum.ipynb.

Converted 70d_callback.comet.ipynb.

Converted 74_huggingface.ipynb.

Converted 97_test_utils.ipynb.

Converted 99_pytorch_doc.ipynb.

Converted dev-setup.ipynb.

Converted app_examples.ipynb.

Converted camvid.ipynb.

Converted distributed_app_examples.ipynb.

Converted migrating_catalyst.ipynb.

Converted migrating_ignite.ipynb.

Converted migrating_lightning.ipynb.

Converted migrating_pytorch.ipynb.

Converted migrating_pytorch_verbose.ipynb.

Converted ulmfit.ipynb.

Converted index.ipynb.

Converted quick_start.ipynb.

Converted tutorial.ipynb.