! [ -e /content ] && pip install -Uqq fastai # 在Colab上升级fastai数据块教程

from nbdev.showdoc import show_doc在所有应用程序中使用数据块

在本教程中,我们将看到如何在各种任务中使用数据块API,以及如何调试数据块。数据块API的名称来源于其设计方式:构建DataLoaders对象所需的每个部分(输入类型、目标、标签方式、拆分等)都被封装在一个块中,您可以组合和匹配这些块。

从头构建一个 DataBlock

本教程的其余部分将提供许多示例,但让我们首先在我们在视觉教程中看到的狗与猫问题上从头构建一个DataBlock。首先,我们导入视觉中所需的所有内容。

from fastai.data.all import *

from fastai.vision.all import *第一步是下载并解压我们的数据(如果还没有完成),并获取其位置:

path = untar_data(URLs.PETS)正如我们所见,所有的文件名都在“images”文件夹中。get_image_files函数有助于获取子文件夹中的所有图像:

fnames = get_image_files(path/"images")让我们从一个空的 DataBlock 开始。

dblock = DataBlock()单独来看,DataBlock 只是一个组装数据的蓝图。它在您传递给它一个源之前不执行任何操作。然后您可以选择通过使用 DataBlock.datasets 或 DataBlock.dataloaders 方法将该源转换为 Datasets 或 DataLoaders。由于我们还没有对数据进行任何准备以获取批次,因此 dataloaders 方法在这里会失败,但我们可以查看它是如何在 Datasets 中转换的。这是我们传递数据来源的地方,所有的文件名都在这里:

dsets = dblock.datasets(fnames)

dsets.train[0](Path('/home/jhoward/.fastai/data/oxford-iiit-pet/images/Birman_82.jpg'),

Path('/home/jhoward/.fastai/data/oxford-iiit-pet/images/Birman_82.jpg'))默认情况下,数据块 API 假设我们有一个输入和一个目标,这就是我们看到文件名重复两次的原因。

我们可以做的第一件事是使用 get_items 函数来实际组装我们在数据块中的项:

dblock = DataBlock(get_items = get_image_files)区别在于您传递给源的是包含图像的文件夹,而不是所有的文件名:

dsets = dblock.datasets(path/"images")

dsets.train[0](Path('/home/jhoward/.fastai/data/oxford-iiit-pet/images/english_cocker_spaniel_76.jpg'),

Path('/home/jhoward/.fastai/data/oxford-iiit-pet/images/english_cocker_spaniel_76.jpg'))我们的输入已经准备好以图像的形式进行处理(因为图像可以从文件名构建),但我们的目标尚未准备好。由于我们正在处理猫与狗的问题,我们需要将文件名转换为“猫”与“狗”(或True与False)。让我们为此构建一个函数:

def label_func(fname):

return "cat" if fname.name[0].isupper() else "dog"然后,我们可以告诉我们的数据块使用它来标注我们的目标,通过将其作为 get_y 传递:

dblock = DataBlock(get_items = get_image_files,

get_y = label_func)

dsets = dblock.datasets(path/"images")

dsets.train[0](Path('/home/jhoward/.fastai/data/oxford-iiit-pet/images/staffordshire_bull_terrier_77.jpg'),

'dog')现在我们的输入和目标已经准备好了,我们可以指定类型来告诉数据块 API 我们的输入是图像,目标是类别。类型通过数据块 API 中的块表示,这里我们使用 ImageBlock 和 CategoryBlock:

dblock = DataBlock(blocks = (ImageBlock, CategoryBlock),

get_items = get_image_files,

get_y = label_func)

dsets = dblock.datasets(path/"images")

dsets.train[0](PILImage mode=RGB size=361x500, TensorCategory(1))我们可以看到 DataBlock 是如何自动添加必要的变换以打开图像,或者它是如何将名称 “dog” 改为索引 1(使用特殊的张量类型 TensorCategory(1))。为此,它创建了一个从类别到索引的映射,称为 “vocab”,我们可以通过以下方式访问它:

dsets.vocab['cat', 'dog']请注意,您可以将任何输入和目标块混合搭配,这就是为什么该API被命名为数据块API。您还可以拥有超过两个块(如果您有多个输入和/或目标),您只需将n_inp传递给DataBlock以告知库有多少个输入(其余的将是目标),并将一个函数列表传递给get_x和/或get_y(以说明如何处理每个项目以适应该类型)。请参见下面的目标检测示例。

下一步是控制我们的验证集是如何创建的。我们通过将一个splitter传递给DataBlock来做到这一点。例如,下面是如何进行随机拆分的。

dblock = DataBlock(blocks = (ImageBlock, CategoryBlock),

get_items = get_image_files,

get_y = label_func,

splitter = RandomSplitter())

dsets = dblock.datasets(path/"images")

dsets.train[0](PILImage mode=RGB size=320x480, TensorCategory(0))最后一步是指定项转换和批量转换(与我们在ImageDataLoaders工厂方法中所做的相同):

dblock = DataBlock(blocks = (ImageBlock, CategoryBlock),

get_items = get_image_files,

get_y = label_func,

splitter = RandomSplitter(),

item_tfms = Resize(224))通过这个调整,我们现在能够将项目批量处理在一起,并最终可以调用 dataloaders 将我们的 DataBlock 转换为 DataLoaders 对象:

dls = dblock.dataloaders(path/"images")

dls.show_batch()

我们通常通过回答一系列问题来一次性构建数据块:

- 你的输入/目标是什么类型?这里是图像和类别

- 你的数据在哪里?这里在子文件夹中的文件名中

- 输入需要应用什么吗?这里不需要

- 目标需要应用什么吗?这里是

label_func函数 - 如何分割数据?这里是随机分割

- 我们需要对形成的项应用什么吗?这里是调整大小

- 我们需要对形成的批次应用什么吗?这里不需要

这给我们这个设计:

dblock = DataBlock(blocks = (ImageBlock, CategoryBlock),

get_items = get_image_files,

get_y = label_func,

splitter = RandomSplitter(),

item_tfms = Resize(224))对于两个答案为“否”的问题,如果答案不同,我们将传递的相应参数是 get_x 和 batch_tfms。

图像分类

让我们开始讨论图像分类问题的例子。图像分类问题分为两种类型:单标签问题(每个图像只有一个给定标签)和多标签问题(每个图像可以有多个标签或根本没有标签)。我们将在这里介绍这两种类型。

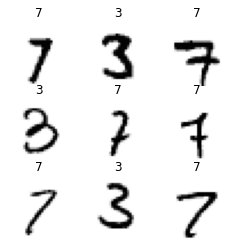

from fastai.vision.all import *MNIST(单标签)

MNIST 是一个包含从 0 到 9 的手写数字的数据集。我们可以通过回答以下问题非常容易地在数据块 API 中加载它:

- 我们的输入和目标是什么类型?黑白图像和标签。

- 数据在哪里?在子文件夹中。

- 我们如何知道一个样本是训练集还是验证集?通过查看祖父文件夹。

- 我们如何知道一张图像的标签?通过查看父文件夹。

在 API 中,这些答案可以转化为:

mnist = DataBlock(blocks=(ImageBlock(cls=PILImageBW), CategoryBlock),

get_items=get_image_files,

splitter=GrandparentSplitter(),

get_y=parent_label)我们的类型变成了块:一个用于图像(使用黑白 PILImageBW 类),一个用于类别。通过 get_image_files 函数在子文件夹中搜索所有图像文件名。使用 GrandparentSplitter 进行训练/验证集的拆分。获取我们的目标(通常称为 y)的函数是 parent_label。

要了解 fastai 库提供的用于读取、标记或拆分的对象,可以查看 data.transforms 模块。

数据块本身只是一个蓝图。它不执行任何操作,也不检查错误。您必须将数据的源提供给它以实际收集内容。这是通过 .dataloaders 方法完成的:

dls = mnist.dataloaders(untar_data(URLs.MNIST_TINY))

dls.show_batch(max_n=9, figsize=(4,4))

如果在上一步出现了问题,或者你只想了解幕后发生了什么,可以使用 summary 方法。它将详细逐步展示,你将看到流程在哪个点上失败。

mnist.summary(untar_data(URLs.MNIST_TINY))Setting-up type transforms pipelines

Collecting items from /home/jhoward/.fastai/data/mnist_tiny

Found 2856 items

2 datasets of sizes 1418,1398

Setting up Pipeline: PILBase.create

Setting up Pipeline: parent_label -> Categorize -- {'vocab': None, 'sort': True, 'add_na': False}

Building one sample

Pipeline: PILBase.create

starting from

/home/jhoward/.fastai/data/mnist_tiny/mnist_tiny/train/7/7420.png

applying PILBase.create gives

PILImageBW mode=L size=28x28

Pipeline: parent_label -> Categorize -- {'vocab': None, 'sort': True, 'add_na': False}

starting from

/home/jhoward/.fastai/data/mnist_tiny/mnist_tiny/train/7/7420.png

applying parent_label gives

7

applying Categorize -- {'vocab': None, 'sort': True, 'add_na': False} gives

TensorCategory(1)

Final sample: (PILImageBW mode=L size=28x28, TensorCategory(1))

Collecting items from /home/jhoward/.fastai/data/mnist_tiny

Found 2856 items

2 datasets of sizes 1418,1398

Setting up Pipeline: PILBase.create

Setting up Pipeline: parent_label -> Categorize -- {'vocab': None, 'sort': True, 'add_na': False}

Setting up after_item: Pipeline: ToTensor

Setting up before_batch: Pipeline:

Setting up after_batch: Pipeline: IntToFloatTensor -- {'div': 255.0, 'div_mask': 1}

Building one batch

Applying item_tfms to the first sample:

Pipeline: ToTensor

starting from

(PILImageBW mode=L size=28x28, TensorCategory(1))

applying ToTensor gives

(TensorImageBW of size 1x28x28, TensorCategory(1))

Adding the next 3 samples

No before_batch transform to apply

Collating items in a batch

Applying batch_tfms to the batch built

Pipeline: IntToFloatTensor -- {'div': 255.0, 'div_mask': 1}

starting from

(TensorImageBW of size 4x1x28x28, TensorCategory([1, 1, 1, 1], device='cuda:0'))

applying IntToFloatTensor -- {'div': 255.0, 'div_mask': 1} gives

(TensorImageBW of size 4x1x28x28, TensorCategory([1, 1, 1, 1], device='cuda:0'))让我们来看看另一个例子!

宠物(单标签)

牛津IIIT宠物数据集是一个包含狗和猫图片的数据集,共有37种不同的品种。与MNIST的一个小但非常重要的区别是,这里的图像并不都是相同的大小。在MNIST中,它们都是28x28像素,而这里的图像具有不同的宽高比或尺寸。因此,我们需要添加一些操作,使它们都变为相同的大小,以便可以将它们一起组合到一个批次中。我们还将学习如何添加数据增强。

让我们回顾一下之前的问题,并添加两个新问题:

- 我们的输入和目标是什么类型?图像和标签。

- 数据存放在哪里?在子文件夹中。

- 我们如何知道一个样本是在训练集还是验证集中?我们将进行随机划分。

- 我们如何知道图像的标签?通过查看父文件夹。

- 我们是否想对给定的样本应用一个函数?是的,我们需要将所有图像调整为给定的大小。

- 我们是否想在批次创建后对其应用一个函数?是的,我们想要数据增强。

pets = DataBlock(blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(),

get_y=Pipeline([attrgetter("name"), RegexLabeller(pat = r'^(.*)_\d+.jpg$')]),

item_tfms=Resize(128),

batch_tfms=aug_transforms())与MNIST类似,我们可以看到这些问题的答案如何直接体现在API中。我们的类型变为块:一个用于图像,一个用于类别。搜索子文件夹中所有图像文件名的操作由get_image_files函数完成。使用RandomSplitter进行训练/验证的拆分。获取目标(通常称为y)的函数是两个变换的组合:我们获取Path文件名的名称属性,然后应用正则表达式以获取类别。为了将这两个变换组合成一个,我们使用Pipeline。

最后,我们在项目级别应用调整大小,并在批处理级别应用aug_transforms()。

dls = pets.dataloaders(untar_data(URLs.PETS)/"images")

dls.show_batch(max_n=9)

现在让我们看看如何使用相同的API来解决多标签问题。

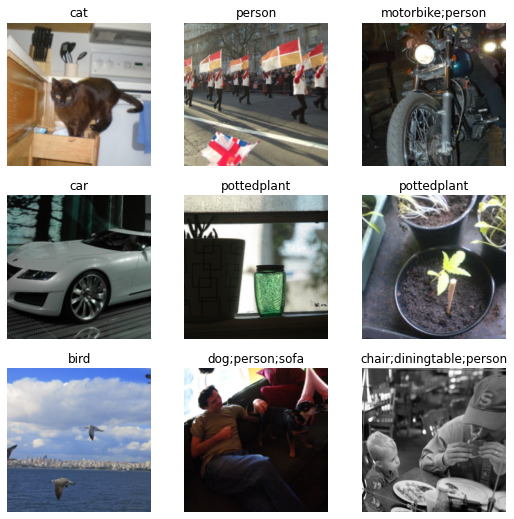

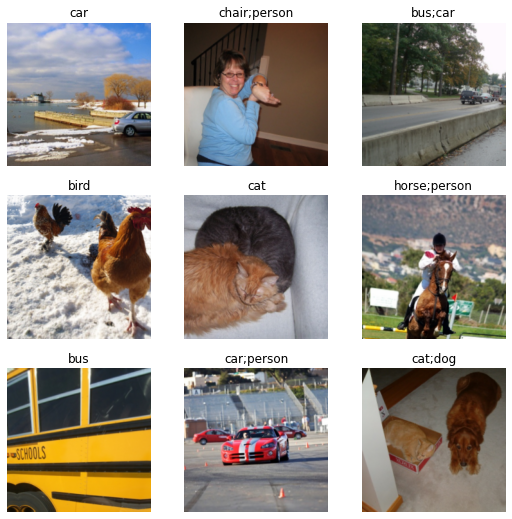

Pascal(多标签)

Pascal数据集最初是一个目标检测数据集(我们必须预测图像中某些物体的位置)。但它包含许多包含各种物体的图片,因此为多标签问题提供了很好的例子。让我们下载它并查看数据:

pascal_source = untar_data(URLs.PASCAL_2007)

df = pd.read_csv(pascal_source/"train.csv")df.head()| fname | labels | is_valid | |

|---|---|---|---|

| 0 | 000005.jpg | chair | True |

| 1 | 000007.jpg | car | True |

| 2 | 000009.jpg | horse person | True |

| 3 | 000012.jpg | car | False |

| 4 | 000016.jpg | bicycle | True |

看起来我们有一列是文件名,一列是标签(用空格分隔),还有一列告诉我们该文件名是否应该放入验证集。

将其放入 DataBlock 有多种方法,先让我们回顾一下,但首先,回答我们常见的问答:

- 我们的输入和目标是什么类型?图像和多个标签。

- 数据在哪里?在一个数据框中。

- 我们如何知道样本是属于训练集还是验证集?我们数据框的一列。

- 我们如何获取图像?通过查看 fname 列。

- 我们如何知道图像的标签?通过查看 labels 列。

- 我们想对给定样本应用一个函数吗?是的,我们需要将所有内容调整为给定的大小。

- 我们想对创建后的批次应用一个函数吗?是的,我们想进行数据增强。

请注意,与之前相比多了一个问题:我们不需要在这里使用 get_items 函数,因为我们已经将所有数据集中在一个地方。但我们需要对原始数据框做一些处理,以获取我们的输入,读取第一列,并在文件名前添加适当的文件夹。这就是我们作为 get_x 传递的内容。

pascal = DataBlock(blocks=(ImageBlock, MultiCategoryBlock),

splitter=ColSplitter(),

get_x=ColReader(0, pref=pascal_source/"train"),

get_y=ColReader(1, label_delim=' '),

item_tfms=Resize(224),

batch_tfms=aug_transforms())再次,我们可以看到问题的答案直接在API中转换。我们的类型变成了块:一个用于图像,一个用于多类别。分割是通过ColSplitter完成的(默认使用名为is_valid的列)。获取我们的输入(通常称为x)的函数是带有前缀的第一列的ColReader,获取我们的目标(通常称为y)的函数是第二列的ColReader,使用空格作为分隔符。我们在项目级别应用调整大小,并在批处理级别应用aug_transforms()。

dls = pascal.dataloaders(df)

dls.show_batch()

另一种方法是直接使用 get_x 和 get_y 函数:

pascal = DataBlock(blocks=(ImageBlock, MultiCategoryBlock),

splitter=ColSplitter(),

get_x=lambda x:pascal_source/"train"/f'{x[0]}',

get_y=lambda x:x[1].split(' '),

item_tfms=Resize(224),

batch_tfms=aug_transforms())

dls = pascal.dataloaders(df)

dls.show_batch()

或者,我们可以使用列的名称作为属性(因为数据框的行是pandas系列)。

pascal = DataBlock(blocks=(ImageBlock, MultiCategoryBlock),

splitter=ColSplitter(),

get_x=lambda o:f'{pascal_source}/train/'+o.fname,

get_y=lambda o:o.labels.split(),

item_tfms=Resize(224),

batch_tfms=aug_transforms())

dls = pascal.dataloaders(df)

dls.show_batch()

避免遍历数据框的行(这可能需要很长时间)最有效的方法是使用 from_columns 方法。它将使用 get_items 将列转换为 numpy 数组。缺点是,由于在提取相关列后我们失去了数据框,无法再使用 ColSplitter。在这里,我们在手动从数据框中提取验证集的索引后使用了 IndexSplitter:

def _pascal_items(x): return (

f'{pascal_source}/train/'+x.fname, x.labels.str.split())

valid_idx = df[df['is_valid']].index.values

pascal = DataBlock.from_columns(blocks=(ImageBlock, MultiCategoryBlock),

get_items=_pascal_items,

splitter=IndexSplitter(valid_idx),

item_tfms=Resize(224),

batch_tfms=aug_transforms())dls = pascal.dataloaders(df)

dls.show_batch()

图像定位

图像定位类别中有各种问题:图像分割(这是一个任务,在该任务中您必须预测图像中每个像素的类别)、坐标预测(预测图像上的一个或多个关键点)和物体检测(在物体周围绘制一个框以进行检测)。

让我们看看每种情况的示例,以及如何在每种情况下使用数据块 API。

分割

我们将使用 CamVid 数据集 的一个小子集作为我们的示例。

path = untar_data(URLs.CAMVID_TINY)让我们回顾一下我们通常的问卷:

- 我们的输入和目标类型是什么?图像和分割掩码。

- 数据在哪里?在子文件夹中。

- 我们如何知道一个样本是在训练集还是验证集中?我们将进行随机拆分。

- 我们如何知道图像的标签?通过查看“labels”文件夹中的对应文件。

- 我们想在创建批处理后对其应用函数吗?是的,我们想进行数据增强。

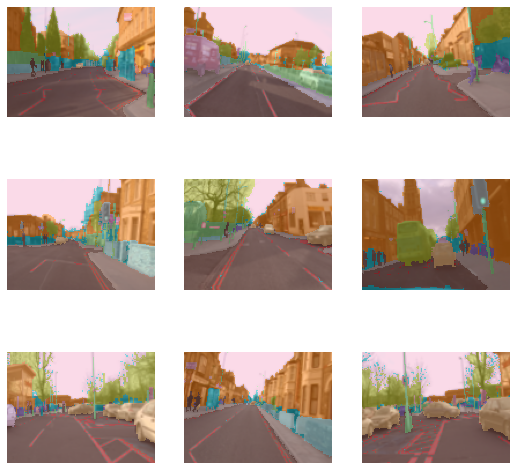

camvid = DataBlock(blocks=(ImageBlock, MaskBlock(codes = np.loadtxt(path/'codes.txt', dtype=str))),

get_items=get_image_files,

splitter=RandomSplitter(),

get_y=lambda o: path/'labels'/f'{o.stem}_P{o.suffix}',

batch_tfms=aug_transforms())MaskBlock 是由 codes 生成的,codes 映射了掩膜的像素值与它们对应的对象(如汽车、道路、行人等)之间的关系。其余部分现在应该看起来很熟悉了。

dls = camvid.dataloaders(path/"images")

dls.show_batch()/home/jhoward/mambaforge/lib/python3.9/site-packages/torch/_tensor.py:1142: UserWarning: __floordiv__ is deprecated, and its behavior will change in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values. To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor').

ret = func(*args, **kwargs)

要点

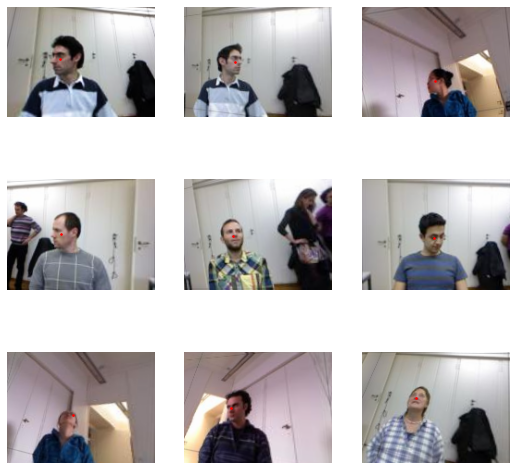

对于这个例子,我们将使用 BiWi Kinect 头部姿态数据集 的一个小样本。它包含了人们的照片,任务是预测他们头部的中心位置。我们已将这个小数据集保存为字典文件,命名为 center:

biwi_source = untar_data(URLs.BIWI_SAMPLE)

fn2ctr = load_pickle(biwi_source/'centers.pkl')然后我们可以讨论我们常见的问题:

- 我们的输入和目标类型是什么?图像和点。

- 数据在哪里?在子文件夹中。

- 我们如何知道样本是在训练集还是验证集中?我们将进行随机划分。

- 我们如何知道图像的标签?通过使用

fn2ctr字典。 - 我们需要在创建批量后应用一个函数吗?是的,我们希望进行数据增强。

biwi = DataBlock(blocks=(ImageBlock, PointBlock),

get_items=get_image_files,

splitter=RandomSplitter(),

get_y=lambda o:fn2ctr[o.name].flip(0),

batch_tfms=aug_transforms())我们可以用它来创建一个 DataLoaders:

dls = biwi.dataloaders(biwi_source)

dls.show_batch(max_n=9)

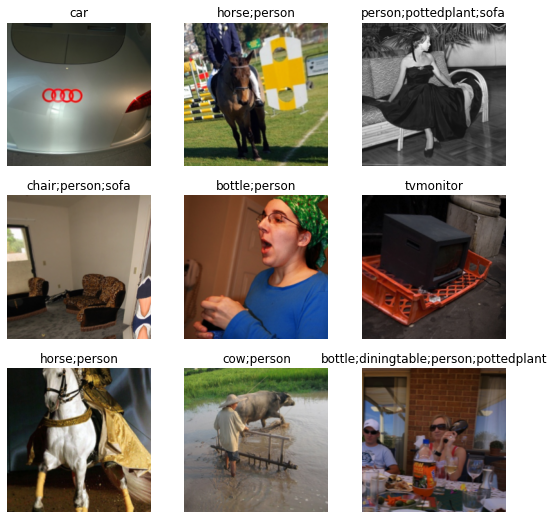

边界框

对于这个任务,我们将使用COCO数据集的一个小子集。它包含日常物品的图片,目标是通过绘制矩形来预测物品的位置。

fastai库提供了一个名为get_annotations的函数,该函数将解析train.json的内容,并给我们一个字典,包含文件名到(边界框,标签)的映射。

coco_source = untar_data(URLs.COCO_TINY)

images, lbl_bbox = get_annotations(coco_source/'train.json')

img2bbox = dict(zip(images, lbl_bbox))那么我们可以讨论我们通常的问题:

- 我们的输入和目标的类型是什么?图像和边界框。

- 数据在哪里?在子文件夹中。

- 我们如何知道一个样本是在训练集还是验证集中?我们将采取随机划分。

- 我们如何知道图像的标签是什么?通过使用

img2bbox字典。 - 我们想对给定样本应用一个函数吗?是的,我们需要将所有内容调整为给定大小。

- 我们想在创建批次后对批次应用函数吗?是的,我们希望进行数据增强。

coco = DataBlock(blocks=(ImageBlock, BBoxBlock, BBoxLblBlock),

get_items=get_image_files,

splitter=RandomSplitter(),

get_y=[lambda o: img2bbox[o.name][0], lambda o: img2bbox[o.name][1]],

item_tfms=Resize(128),

batch_tfms=aug_transforms(),

n_inp=1)注意,我们提供三种类型,因为我们有两个目标:边界框和标签。这就是为什么我们在最后传递 n_inp=1,以告知库输入在哪里结束,目标从哪里开始。

这也是为什么我们传递一个列表给 get_y:由于我们有两个目标,我们必须告诉库如何为每个目标进行标记(如果你不想为其之一做任何事情,可以使用 noop)。

dls = coco.dataloaders(coco_source)

dls.show_batch(max_n=9)文本

我们将展示两个示例:语言模型和文本分类。请注意,使用数据块 API,您可以将之前的多标签示例调整为输入为文本的问题。

from fastai.text.all import *语言模型

我们将使用一个由IMDb电影评论组成的数据集。和往常一样,我们可以使用一行代码通过untar_data下载它。

path = untar_data(URLs.IMDB_SAMPLE)

df = pd.read_csv(path/'texts.csv')

df.head()| label | text | is_valid | |

|---|---|---|---|

| 0 | negative | Un-bleeping-believable! Meg Ryan doesn't even look her usual pert lovable self in this, which normally makes me forgive her shallow ticky acting schtick. Hard to believe she was the producer on this dog. Plus Kevin Kline: what kind of suicide trip has his career been on? Whoosh... Banzai!!! Finally this was directed by the guy who did Big Chill? Must be a replay of Jonestown - hollywood style. Wooofff! | False |

| 1 | positive | This is a extremely well-made film. The acting, script and camera-work are all first-rate. The music is good, too, though it is mostly early in the film, when things are still relatively cheery. There are no really superstars in the cast, though several faces will be familiar. The entire cast does an excellent job with the script.<br /><br />But it is hard to watch, because there is no good end to a situation like the one presented. It is now fashionable to blame the British for setting Hindus and Muslims against each other, and then cruelly separating them into two countries. There is som... | False |

| 2 | negative | Every once in a long while a movie will come along that will be so awful that I feel compelled to warn people. If I labor all my days and I can save but one soul from watching this movie, how great will be my joy.<br /><br />Where to begin my discussion of pain. For starters, there was a musical montage every five minutes. There was no character development. Every character was a stereotype. We had swearing guy, fat guy who eats donuts, goofy foreign guy, etc. The script felt as if it were being written as the movie was being shot. The production value was so incredibly low that it felt li... | False |

| 3 | positive | Name just says it all. I watched this movie with my dad when it came out and having served in Korea he had great admiration for the man. The disappointing thing about this film is that it only concentrate on a short period of the man's life - interestingly enough the man's entire life would have made such an epic bio-pic that it is staggering to imagine the cost for production.<br /><br />Some posters elude to the flawed characteristics about the man, which are cheap shots. The theme of the movie "Duty, Honor, Country" are not just mere words blathered from the lips of a high-brassed offic... | False |

| 4 | negative | This movie succeeds at being one of the most unique movies you've seen. However this comes from the fact that you can't make heads or tails of this mess. It almost seems as a series of challenges set up to determine whether or not you are willing to walk out of the movie and give up the money you just paid. If you don't want to feel slighted you'll sit through this horrible film and develop a real sense of pity for the actors involved, they've all seen better days, but then you realize they actually got paid quite a bit of money to do this and you'll lose pity for them just like you've alr... | False |

我们可以看到它由标记为正面或负面的(相当长的!)评论组成。让我们来回顾一下我们通常的问题:

- 我们的输入和目标类型是什么?文本,我们实际上没有目标,因为目标是从输入中衍生出来的。

- 数据在哪里?在一个数据框中。

- 我们如何知道一个样本是在训练集中还是验证集中?我们有一个

is_valid列。 - 我们如何获取我们的输入?在

text列中。

imdb_lm = DataBlock(blocks=TextBlock.from_df('text', is_lm=True),

get_x=ColReader('text'),

splitter=ColSplitter())由于这里没有目标,我们只需要指定一个块。TextBlock 与其他 TransformBlock 有一点不同:为了能够在设置过程中高效地对所有文本进行分词,您需要使用类方法 from_folder 或 from_df。

注意:TestBlock 的分词过程将分词输入放入名为 text 的列中。get_x 的 ColReader 将始终引用 text,即使原始文本输入在数据框中有另一个名称的列。

然后,我们可以通过将数据框传递给 dataloaders 方法来将数据放入 DataLoaders 中:

dls = imdb_lm.dataloaders(df, bs=64, seq_len=72)

dls.show_batch(max_n=6)| text | text_ | |

|---|---|---|

| 0 | xxbos xxmaj not sure if it was right or wrong , but i read thru the other comments before watching the xxunk have to say i disagree with most of the negative comments or problems people have had with it . \n\n xxmaj as a first time " lone xxmaj wolf " director / producer , i like to see things that i can xxunk to , not necessarily from the pro | xxmaj not sure if it was right or wrong , but i read thru the other comments before watching the xxunk have to say i disagree with most of the negative comments or problems people have had with it . \n\n xxmaj as a first time " lone xxmaj wolf " director / producer , i like to see things that i can xxunk to , not necessarily from the pro 's |

| 1 | and each and every actor . xxmaj it 's like they all think they 're the main part of the movie and scream " notice xxup me ! " over and over again . xxmaj the bad guy has his bad - guy music going on and says sinister bad - guy - like things , just in case you did n't quite catch on . xxmaj the good guy does brave | each and every actor . xxmaj it 's like they all think they 're the main part of the movie and scream " notice xxup me ! " over and over again . xxmaj the bad guy has his bad - guy music going on and says sinister bad - guy - like things , just in case you did n't quite catch on . xxmaj the good guy does brave and |

| 2 | innocently helps the xxmaj confederate hide . xxmaj later , when he returns to kill her father , the little girl 's xxunk is remembered . a sweet , small story from director xxup xxunk . xxmaj griffith . xxmaj location footage and humanity are xxunk displayed . \n\n▁ xxrep 4 * xxmaj in the xxmaj border xxmaj states ( 6 / 13 / 10 ) xxup xxunk . xxmaj griffith ~ | helps the xxmaj confederate hide . xxmaj later , when he returns to kill her father , the little girl 's xxunk is remembered . a sweet , small story from director xxup xxunk . xxmaj griffith . xxmaj location footage and humanity are xxunk displayed . \n\n▁ xxrep 4 * xxmaj in the xxmaj border xxmaj states ( 6 / 13 / 10 ) xxup xxunk . xxmaj griffith ~ xxmaj |

| 3 | when they real winner should of been xxmaj xxunk xxmaj fiennes for " sunshine " . xxmaj if you have n't seen this movie yet , watch it and you 'll agree . " eyes xxmaj wide xxmaj shut " when released xxunk no nominations . xxmaj and as far as this year goes , well , the bad choices were all over the place ! xxmaj xxunk xxmaj xxunk gets no | they real winner should of been xxmaj xxunk xxmaj fiennes for " sunshine " . xxmaj if you have n't seen this movie yet , watch it and you 'll agree . " eyes xxmaj wide xxmaj shut " when released xxunk no nominations . xxmaj and as far as this year goes , well , the bad choices were all over the place ! xxmaj xxunk xxmaj xxunk gets no " |

| 4 | xxmaj in this case , however , when combined with the moody atmosphere , and the fact that the small town of xxmaj red xxmaj rock seems almost empty of normal daily life , the coincidences and unlikely timing suggest a story that , beyond " xxunk " , is … surreal . xxmaj it 's almost as if fate deliberately xxunk with improbable events so as to force xxmaj michael to | in this case , however , when combined with the moody atmosphere , and the fact that the small town of xxmaj red xxmaj rock seems almost empty of normal daily life , the coincidences and unlikely timing suggest a story that , beyond " xxunk " , is … surreal . xxmaj it 's almost as if fate deliberately xxunk with improbable events so as to force xxmaj michael to come |

| 5 | is not over the top and enough twists and turns to keep you interested until the end . \n\n xxmaj well directed , well acted and a good story . xxbos xxmaj not the worst movie xxmaj i 've seen but definitely not very good either . i myself am a paintball player , used to play airball a lot and going from woods to airball is quite a large change . | not over the top and enough twists and turns to keep you interested until the end . \n\n xxmaj well directed , well acted and a good story . xxbos xxmaj not the worst movie xxmaj i 've seen but definitely not very good either . i myself am a paintball player , used to play airball a lot and going from woods to airball is quite a large change . xxmaj |

文本分类

对于文本分类,让我们回顾一下我们通常的问题:

- 输入和目标的类型是什么?文本和类别。

- 数据在哪里?在一个数据框中。

- 我们如何知道一个样本是在训练集还是验证集中?我们有一个

is_valid列。 - 我们如何获取输入?在

text列中。 - 我们如何获取目标?在

label列中。

imdb_clas = DataBlock(blocks=(TextBlock.from_df('text', seq_len=72, vocab=dls.vocab), CategoryBlock),

get_x=ColReader('text'),

get_y=ColReader('label'),

splitter=ColSplitter())如前面的示例中,我们使用类方法构建一个 TextBlock。我们可以传入语言模型的词汇(对于 ULMFit 方法非常有用)。我们还展示了 seq_len 参数(默认为 72),只是因为你需要确保在这里和你的 text_classifier_learner 中使用相同的值。

您需要确保在稍后定义的 TextBlock 和 Learner 中使用相同的 seq_len 。

dls = imdb_clas.dataloaders(df, bs=64)

dls.show_batch()| text | category | |

|---|---|---|

| 0 | xxbos xxmaj raising xxmaj victor xxmaj vargas : a xxmaj review \n\n xxmaj you know , xxmaj raising xxmaj victor xxmaj vargas is like sticking your hands into a big , xxunk bowl of xxunk . xxmaj it 's warm and gooey , but you 're not sure if it feels right . xxmaj try as i might , no matter how warm and gooey xxmaj raising xxmaj victor xxmaj vargas became i was always aware that something did n't quite feel right . xxmaj victor xxmaj vargas suffers from a certain xxunk on the director 's part . xxmaj apparently , the director thought that the ethnic backdrop of a xxmaj latino family on the lower east side , and an xxunk storyline would make the film critic proof . xxmaj he was right , but it did n't fool me . xxmaj raising xxmaj victor xxmaj vargas is | negative |

| 1 | xxbos xxup the xxup shop xxup around xxup the xxup corner is one of the xxunk and most feel - good romantic comedies ever made . xxmaj there 's just no getting around that , and it 's hard to actually put one 's feeling for this film into words . xxmaj it 's not one of those films that tries too hard , nor does it come up with the xxunk possible scenarios to get the two protagonists together in the end . xxmaj in fact , all its charm is xxunk , contained within the characters and the setting and the plot … which is highly believable to xxunk . xxmaj it 's easy to think that such a love story , as beautiful as any other ever told , * could * happen to you … a feeling you do n't often get from other romantic comedies | positive |

| 2 | xxbos xxmaj now that xxmaj che(2008 ) has finished its relatively short xxmaj australian cinema run ( extremely limited xxunk screen in xxmaj xxunk , after xxunk ) , i can xxunk join both xxunk of " at xxmaj the xxmaj movies " in taking xxmaj steven xxmaj soderbergh to task . \n\n xxmaj it 's usually satisfying to watch a film director change his style / subject , but xxmaj soderbergh 's most recent stinker , xxmaj the xxmaj girlfriend xxmaj xxunk ) , was also missing a story , so narrative ( and editing ? ) seem to suddenly be xxmaj soderbergh 's main challenge . xxmaj strange , after 20 - odd years in the business . xxmaj he was probably never much good at narrative , just xxunk it well inside " edgy " projects . \n\n xxmaj none of this excuses him this present , | negative |

| 3 | xxbos xxmaj this film sat on my xxmaj xxunk for weeks before i watched it . i xxunk a self - indulgent xxunk flick about relationships gone bad . i was wrong ; this was an xxunk xxunk into the screwed - up xxunk of xxmaj new xxmaj xxunk . \n\n xxmaj the format is the same as xxmaj max xxmaj xxunk ' " la xxmaj xxunk , " based on a play by xxmaj arthur xxmaj xxunk , who is given an " inspired by " credit . xxmaj it starts from one person , a prostitute , standing on a street corner in xxmaj brooklyn . xxmaj she is picked up by a home contractor , who has sex with her on the hood of a car , but ca n't come . xxmaj he refuses to pay her . xxmaj when he 's off xxunk , she | positive |

| 4 | xxbos i really wanted to love this show . i truly , honestly did . \n\n xxmaj for the first time , gay viewers get their own version of the " the xxmaj bachelor " . xxmaj with the help of his obligatory " hag " xxmaj xxunk , xxmaj james , a good looking , well - to - do thirty - something has the chance of love with 15 suitors ( or " mates " as they are referred to in the show ) . xxmaj the only problem is half of them are straight and xxmaj james does n't know this . xxmaj if xxmaj james picks a gay one , they get a trip to xxmaj new xxmaj zealand , and xxmaj if he picks a straight one , straight guy gets $ 25 , xxrep 3 0 . xxmaj how can this not be fun | negative |

| 5 | xxbos xxmaj many neglect that this is n't just a classic due to the fact that it 's the first 3d game , or even the first xxunk - up . xxmaj it 's also one of the first xxunk games , one of the xxunk definitely the first ) truly claustrophobic games , and just a pretty well - xxunk gaming experience in general . xxmaj with graphics that are terribly dated today , the game xxunk you into the role of xxunk even * think * xxmaj i 'm going to attempt spelling his last name ! ) , an xxmaj american xxup xxunk . caught in an underground bunker . xxmaj you fight and search your way through xxunk in order to achieve different xxunk for the six xxunk , let 's face it , most of them are just an excuse to hand you a weapon | positive |

| 6 | xxbos xxmaj the xxmaj blob starts with one of the most bizarre theme songs ever , xxunk by an uncredited xxmaj burt xxmaj xxunk of all people ! xxmaj you really have to hear it to believe it , xxmaj the xxmaj blob may be worth watching just for this song alone & my user comment summary is just a little taste of the classy lyrics … xxmaj after this xxunk opening credits sequence xxmaj the xxmaj blob introduces us , the viewer that is , to xxmaj steve xxmaj xxunk ( steve mcqueen as xxmaj steven mcqueen ) & his girlfriend xxmaj jane xxmaj martin ( xxunk xxmaj xxunk ) who are xxunk on their own somewhere & witness what looks like a meteorite falling to xxmaj earth in nearby woods . xxmaj an old man ( xxunk xxmaj xxunk as xxmaj xxunk xxmaj xxunk ) who lives in | negative |

| 7 | xxbos xxmaj the year 2005 saw no xxunk than 3 filmed productions of xxup h. xxup g. xxmaj wells ' great novel , " war of the xxmaj worlds " . xxmaj this is perhaps the least well - known and very probably the best of them . xxmaj no other version of xxunk has ever attempted not only to present the story very much as xxmaj wells wrote it , but also to create the atmosphere of the time in which it was supposed to take place : the last year of the 19th xxmaj century , 1900 …▁ using xxmaj wells ' original setting , in and near xxmaj xxunk , xxmaj england . \n\n imdb seems xxunk to what they regard as " spoilers " . xxmaj that might apply with some films , where the ending might actually be a surprise , but with regard to | positive |

| 8 | xxbos xxmaj well , what can i say . \n\n " what the xxmaj xxunk do we xxmaj know " has achieved the nearly impossible - leaving behind such masterpieces of the genre as " the xxmaj xxunk " , " the xxmaj xxunk xxmaj master " , " xxunk " , and so fourth , it will go down in history as the single worst movie i have ever seen in its xxunk . xxmaj and that , ladies and gentlemen , is impressive indeed , for i have seen many a bad movie . \n\n xxmaj this masterpiece of modern cinema consists of two xxunk parts , xxunk between a silly and contrived plot about an extremely annoying photographer , abandoned by her husband and forced to take anti - xxunk to survive , and a bunch of talking heads going on about how quantum physics supposedly xxunk | negative |

表格数据

表格数据并不真正使用数据块API,因为它依赖于另一个API,即TabularPandas,用于高效的预处理和批处理(未来将会有一些与数据块API兼容但效率较低的API添加)。您仍然可以为目标使用不同的块。

from fastai.tabular.core import *在我们的示例中,我们将查看成人数据集的一个子集,该数据集包含一些普查数据,任务是预测某人是否赚超过50k。

adult_source = untar_data(URLs.ADULT_SAMPLE)

df = pd.read_csv(adult_source/'adult.csv')

df.head()| age | workclass | fnlwgt | education | education-num | marital-status | occupation | relationship | race | sex | capital-gain | capital-loss | hours-per-week | native-country | salary | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 49 | Private | 101320 | Assoc-acdm | 12.0 | Married-civ-spouse | NaN | Wife | White | Female | 0 | 1902 | 40 | United-States | >=50k |

| 1 | 44 | Private | 236746 | Masters | 14.0 | Divorced | Exec-managerial | Not-in-family | White | Male | 10520 | 0 | 45 | United-States | >=50k |

| 2 | 38 | Private | 96185 | HS-grad | NaN | Divorced | NaN | Unmarried | Black | Female | 0 | 0 | 32 | United-States | <50k |

| 3 | 38 | Self-emp-inc | 112847 | Prof-school | 15.0 | Married-civ-spouse | Prof-specialty | Husband | Asian-Pac-Islander | Male | 0 | 0 | 40 | United-States | >=50k |

| 4 | 42 | Self-emp-not-inc | 82297 | 7th-8th | NaN | Married-civ-spouse | Other-service | Wife | Black | Female | 0 | 0 | 50 | United-States | <50k |

在一个表格问题中,我们需要将列分为表示连续变量的列(例如年龄)和表示分类变量的列(例如教育):

cat_names = ['workclass', 'education', 'marital-status', 'occupation', 'relationship', 'race']

cont_names = ['age', 'fnlwgt', 'education-num']在fastai中的标准预处理,使用以下预处理器:

procs = [Categorify, FillMissing, Normalize]Categorify 将把分类列转换为索引,FillMissing 将填充连续列中的缺失值(如果有的话),并在必要时添加一个缺失的分类列。Normalize 将对连续列进行归一化处理(减去均值并除以标准差)。

我们仍然可以使用任何划分器根据需要创建划分:

splits = RandomSplitter()(range_of(df))然后所有内容都放入一个 TabularPandas 对象中:

to = TabularPandas(df, procs, cat_names, cont_names, y_names="salary", splits=splits, y_block=CategoryBlock)我们将 y_block=CategoryBlock 放在这里是为了向您展示如何自定义目标的块,但通常它是从数据中推断出来的,因此通常无需传递它。

dls = to.dataloaders()

dls.show_batch()| workclass | education | marital-status | occupation | relationship | race | education-num_na | age | fnlwgt | education-num | salary | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Self-emp-not-inc | Prof-school | Never-married | Prof-specialty | Not-in-family | White | False | 34.0 | 204374.999924 | 15.0 | >=50k |

| 1 | Private | Some-college | Never-married | Adm-clerical | Not-in-family | White | False | 62.0 | 141307.999756 | 10.0 | <50k |

| 2 | Private | Assoc-acdm | Never-married | Other-service | Not-in-family | White | False | 23.0 | 152188.999004 | 12.0 | <50k |

| 3 | Private | HS-grad | Divorced | Craft-repair | Unmarried | White | False | 38.0 | 27407.999090 | 9.0 | <50k |

| 4 | Private | Bachelors | Never-married | Prof-specialty | Not-in-family | White | False | 32.0 | 340917.004812 | 13.0 | >=50k |

| 5 | Private | Bachelors | Never-married | Prof-specialty | Not-in-family | White | False | 22.0 | 153515.999598 | 13.0 | <50k |

| 6 | Self-emp-not-inc | Doctorate | Never-married | Prof-specialty | Not-in-family | White | False | 46.0 | 165754.000335 | 16.0 | <50k |

| 7 | Private | Masters | Married-civ-spouse | Prof-specialty | Husband | White | False | 33.0 | 202050.999896 | 14.0 | <50k |

| 8 | Private | Assoc-acdm | Divorced | Sales | Unmarried | White | False | 40.0 | 197919.000079 | 12.0 | <50k |

| 9 | ? | Some-college | Never-married | ? | Own-child | White | False | 18.0 | 264924.000434 | 10.0 | <50k |

完 -