处理生成数据集的多样性

在之前的章节 (在新标签页中打开)中,我们讨论了使用LLM进行合成数据集生成的潜力,以进一步微调本地检索器模型。这种方法之所以可行,是因为有大量未标记文档的可用性。每个文档用于生成一个或多个合成查询,并形成一个查询-文档对。

但如果信息检索不是你的任务呢?假设你正在处理一个法律文档分类问题,但不允许将任何数据发送到外部API。在这种情况下,你需要训练一个本地模型。然而,收集数据可能会成为一个重大障碍,导致产品开发延迟。

为了简化,让我们假设目标是生成儿童故事。这个任务是Eldan等人(2023年)(在新标签页中打开)研究的起点。每个故事由2-3个段落组成,遵循简单的情节和主题,而整个数据集涵盖了儿童的词汇和事实知识。

语言不仅仅是一个规则和符号的系统;它传达和解释意义。使用大型语言模型生成训练数据的主要挑战是确保数据集的多样性。即使有较高的生成温度 (在新标签页中打开),模型也可能产生缺乏必要多样性的重复数据集(即使是儿童语言)。连贯性和相关性是自然语言生成的其他挑战。

为了解决多样性问题,作者准备了一个包含约1500个基本单词的词汇表,这些单词反映了一个典型儿童的词汇量,分为名词、动词和形容词。在每一代中,随机选择一个动词、一个名词和一个形容词。然后,模型生成一个整合这些随机单词的故事。

该技术显著扩大了数据集的多样性,确保故事涵盖儿童的整个词汇和多样化的概念组合。此外,作者还整合了潜在的故事特征(如对话、情节转折、坏结局或道德教训)。每个故事都随机选择了这些特征的一个子集,并提示模型将其包含在内。

提示:

Write a short story (3-5 paragraphs) which only uses very simple words that a 3 year old child would likely understand. The story should use the verb ”{random.choice(verbs_list)}”, the noun ”{random.choice(nouns_list)}” and the adjective ”{random.choice(adjectives_list)}”. The story should have the following features: {random.choice(features_list)}, {random.choice(features_list)}. Remember to only use simple words!假设间隙填充如下:

提示:

Write a short story (3-5 paragraphs) which only uses very simple words that a 3 year old child would likely understand. The story should use the verb ”decorate”, the noun ”thunder” and the adjective ”ancient”. The story should have the following features: the story should contain at least one dialogue, the story has a bad ending. Remember to only use simple words!输出:

Once upon a time, in an ancient house, there lived a girl named Lily. She loved to decorate her room with pretty things. One day, she found a big box in the attic. She opened it and saw many shiny decorations. Lily was very happy and decided to use them in her room. As Lily was decorating her room, the sky outside became dark. There was a loud thunder sound, and Lily got scared. She ran to her mom and said, ”Mommy, the thunder is so loud!” Her mom hugged her and said, ”Don’t worry, it will pass soon.” But the thunder did not stop. It got louder and louder, and the ancient house started to shake. Suddenly, the roof fell down on the pretty decorations. Lily was sad because her room was not pretty anymore. The end.在这里,我们依赖于最新的文本生成模型(GPT-3.5和GPT-4),它们可以根据指令生成大量的合成内容。由于我们每次随机制定提示,并且模型严格遵守它,因此故事变得极其多样化。即使有一两个词保持不变,叙述也会完全不同。本质上,我们应用这种技术将随机性注入提示中,生成多样化的数据集。

你明白了:

- 识别在您的合成数据集中不同样本之间可能变化的参数/实体;

- 生成或手动编译这些实体的集合以填补空白;

- 通过随机选择实体进行插入来生成数据集。最好将生成温度设置为高于默认值但低于最大值;

- 在ChatGPT/GPT-4的生成结果上训练一个本地模型。

需要注意的是,用于生成的一个实体可能是一个类别标签。例如,在情感分类任务中,你可以直接在提示中提到“正面”或“负面”,以接收带有相应标签的生成文本。然后,使用这些数据训练一个本地分类器。

迭代(分层)合成数据生成

格式可以通过使用更多的实体来变得更加复杂,其中一些实体是由LLM本身预先生成的。例如,可以从生成故事摘要的请求开始,并写下必须出现在故事中的一句话。然后,在最终请求中使用生成的中间数据。

提示:

Summary: {a short summary generated by LLM, using the approach above}

Features: {copy the features from the initial prompt}

Sentence: {a sentence generated by LLM, which should be present in the story}

Words: {copy the words from the initial prompt}

Story:填写提示的示例:

提示:

Summary: Lily and Timmy build a sandcastle together and learn to compromise, but it gets knocked over by a gust of wind. They find beauty in the broken sandcastle and play happily with a butterfly.

Features: Dialogue, Foreshadowing, Twist

Sentence: One day, she went to the park and saw a beautiful butterfly.

Words: disagree, network, beautiful

Story:输出:

Once upon a time, there was a little girl named Lily. She loved to play with her toys and her friends. One day, she went to the park and saw a beautiful butterfly. She tried to catch it, but it flew away. Lily’s friend, Timmy, came to play with her. They decided to build a sandcastle, but they disagreed on how to build it. Lily wanted to make it tall, while Timmy wanted to make it wide. They argued for a while, but finally agreed to make it both tall and wide. As they were building the sandcastle, they noticed a group of ants working together. They realized that the ants were building a network of tunnels. Suddenly, a gust of wind came and knocked over their sandcastle. They were sad, but then they saw the butterfly again. It landed on the remains of their sandcastle and they realized that it was still beautiful, even in its broken state. They smiled and played together happily.因此,可以生成数十万个非常多样化的样本来训练模型。假设你需要训练一个分类器,用于确定文本是否包含对话或情节转折。由于初始提示包含标签,因此知道每个生成的样本需要预测的目标值。

教科书就是你所需要的一切

这种方法引发的一个关键问题是,在训练网络用于实际应用时,数据集的合成是否真的能带来好处。幸运的是,作者通过他们的研究解决了这个问题,并验证了使用从最先进的LLMs中获得的合成数据训练较小语言模型的有效性。

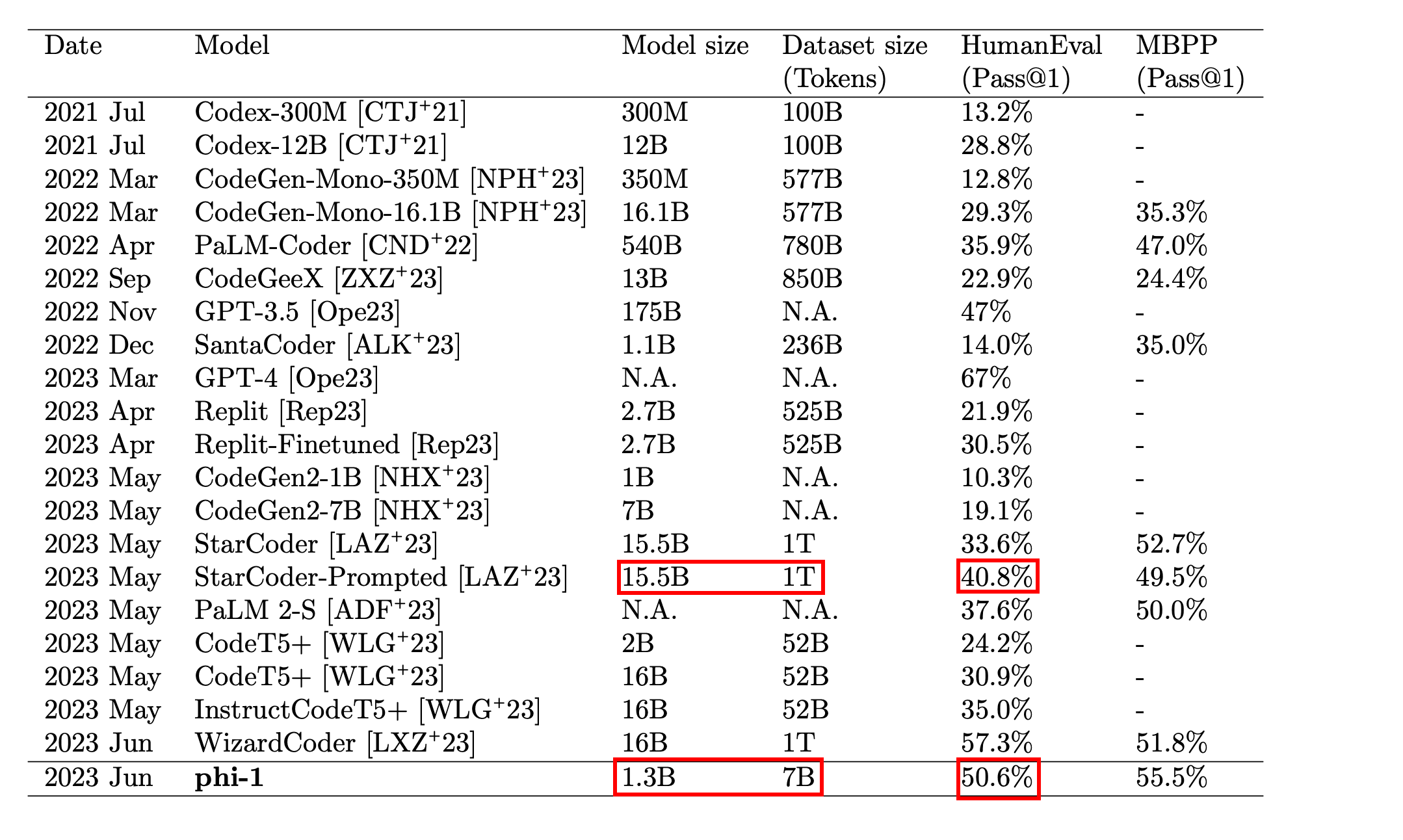

在他们的研究中,Gunasekar等人(2023)(在新标签页中打开)强调了高质量训练数据在他们模型中的重要性。他们认为,如果语言模型在类似于备受推崇的“教科书”特性的材料上进行训练,将会更加有效:清晰、全面、信息丰富且无偏见。

这些原则构成了创建用于训练LLM的半合成数据集Phi-1的基础。主要评估任务是生成一个遵循给定文本描述或文档字符串的Python函数。模型的质量使用HumanEval基准进行评估(Chen et al., 2021 (在新标签页中打开))。

作者强调了在这种方法中多样性的重要性,原因有几个。它使语言模型接触到各种编码表达和问题解决方法,减少了过拟合或依赖特定模式的风险,并提高了模型处理不熟悉或创新任务的能力。

为了解决代码编写挑战,作者们创建了类似教科书的文档,这些文档专注于促进推理和基本算法技能的主题。他们通过以下限制实现了多样性:

- 主题

- 目标受众

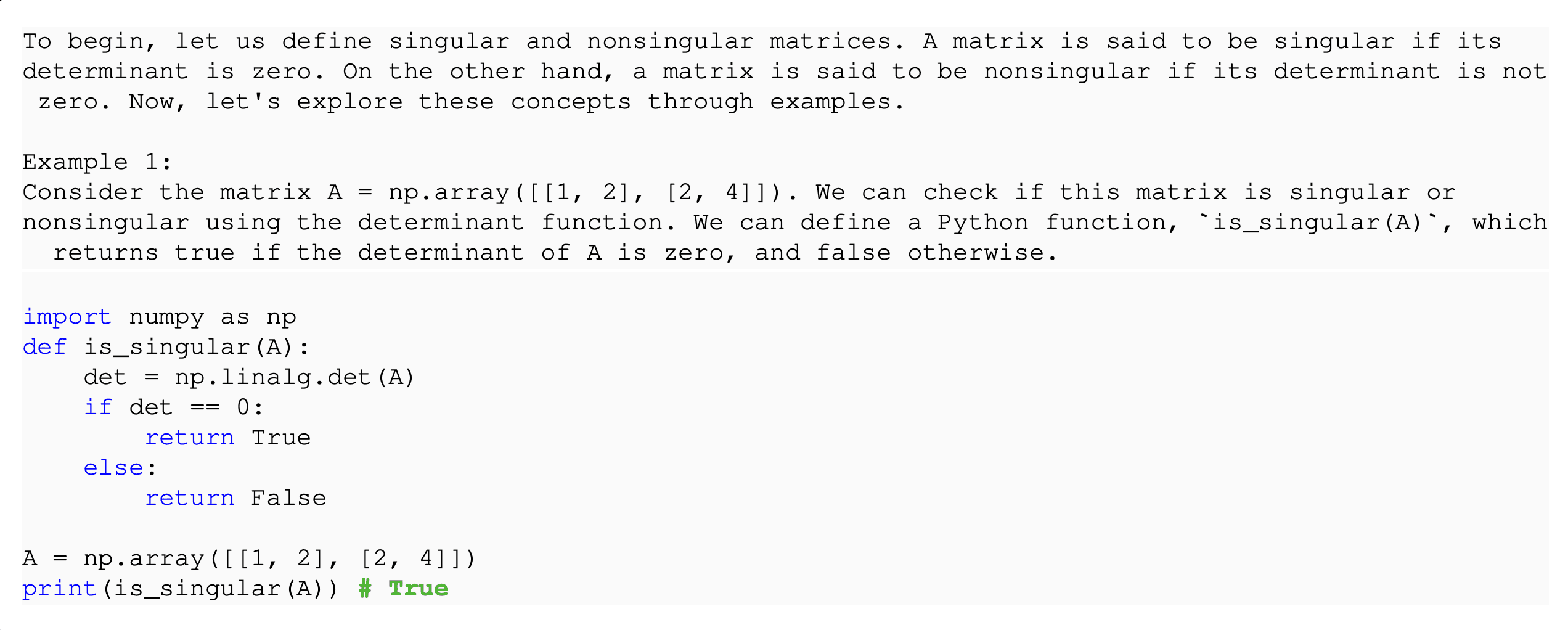

遗憾的是,作者没有提供用于生成合成数据的提示模板的具体信息。然而,他们确实展示了生成的结果。他们选择使用ChatGPT(GPT-3.5)而不是GPT-4,即使这种策略也通过使用合成数据微调模型产生了出色的结果。

图片来源:Gunasekar et al. (2023) (在新标签页中打开)

关注目标受众的限制是至关重要的。指定目标受众是增强合成数据多样性的绝佳方法。高中生、计算机科学本科生和博士生在解释和处理信息时肯定有不同的表达方式,这使得生成的数据更加多样化。让我们尝试重新创建提示。

提示:

Write an extract from a Computer Science textbook for a 1st-year bachelor. The coding language is Python 3.6.

This is an extract from the middle of the following topic: Singular matrices.

The extract starts with a high-level overview of the topic. Then, it presents an example and describes the solution in natural language. After that, it provides 1-2 code snippets, following the example. Each snippet has no more than 10 rows. There should be no text after code snippets.

Keep in mind that the extract should be concise and explain only one concept at a time. The code should represent a Python function & its call. All the common ML/DS libraries are available.

Don't be too verbose. The expected length of the extract is 2 paragraphs at most.输出:

**Singular Matrices**

In linear algebra, a matrix is termed as "singular" if it doesn't have an inverse. Another equivalent definition is when the determinant of the matrix is zero. Singular matrices are crucial because they represent systems of linear equations that do not have a unique solution.

Consider a 2x2 matrix `[[a, b], [c, d]]`. If the product of `ad` and `bc` is the same (i.e., `ad = bc`), then this matrix is singular. Let's explore this with an example: given the matrix `[[2, 4], [1, 2]]`, you'll find that the product of the diagonals yields the same result (2 * 2 = 4 * 1), thus it's a singular matrix.

import numpy as np

def is_singular(matrix):

determinant = np.linalg.det(matrix)

return abs(determinant) < 1e-9

matrix_example = np.array([[2, 4], [1, 2]])

print(is_singular(matrix_example)) # returns True非常接近!

总的来说,作者生成了10亿个标记来扩充模型的训练集,使得一个较小的模型(仅有15亿个参数)能够与规模是其十倍的模型相媲美(详情请参阅文章Gunasekar等人(2023年)(在新标签页中打开))。

图片来源:Gunasekar et al. (2023) (在新标签页中打开)

对于你的任务,你可能不需要如此大量的合成数据(因为作者研究了预训练,这需要大量资源)。然而,即使作为估计,以每1k个标记$0.002的价格(标准ChatGPT定价),生成的标记将花费$2000,提示部分也将花费大约相同的金额。

请记住,随着领域变得更加细分,尤其是在语言偏离英语(以及其他因素)的情况下,对合成数据进行微调变得更加有价值。此外,这种方法与思维链(CoT)(在新标签页中打开)配合得很好,有助于本地模型提高其推理能力。其他提示技术也有效。别忘了,像Alpaca(Taori等人,(2023)(在新标签页中打开))和Vicuna(Zheng等人,(2023)(在新标签页中打开))这样的开源模型通过对合成数据进行微调表现出色。