备注

转到末尾 以下载完整的示例代码。或者通过 Binder 在浏览器中运行此示例。

使用 Haar-like 特征描述符进行人脸分类#

Haar-like 特征描述符成功地用于实现第一个实时人脸检测器 [1]。受此应用启发,我们提出了一个示例,说明如何提取、选择和分类 Haar-like 特征以检测人脸与非人脸。

注释#

此示例依赖于 scikit-learn 进行特征选择和分类。

参考文献#

from time import time

import numpy as np

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score

from skimage.data import lfw_subset

from skimage.transform import integral_image

from skimage.feature import haar_like_feature

from skimage.feature import haar_like_feature_coord

from skimage.feature import draw_haar_like_feature

从图像中提取 Haar-like 特征的过程相对简单。首先,定义一个感兴趣区域(ROI)。其次,计算该 ROI 内的积分图像。最后,使用积分图像提取特征。

def extract_feature_image(img, feature_type, feature_coord=None):

"""Extract the haar feature for the current image"""

ii = integral_image(img)

return haar_like_feature(

ii,

0,

0,

ii.shape[0],

ii.shape[1],

feature_type=feature_type,

feature_coord=feature_coord,

)

我们使用CBCL数据集的一个子集,该子集由100张人脸图像和100张非人脸图像组成。每张图像已调整为19×19像素的感兴趣区域(ROI)。我们从每组中选择75张图像来训练分类器并确定最显著的特征。每个类别剩余的25张图像用于评估分类器的性能。

images = lfw_subset()

# To speed up the example, extract the two types of features only

feature_types = ['type-2-x', 'type-2-y']

# Compute the result

t_start = time()

X = [extract_feature_image(img, feature_types) for img in images]

X = np.stack(X)

time_full_feature_comp = time() - t_start

# Label images (100 faces and 100 non-faces)

y = np.array([1] * 100 + [0] * 100)

X_train, X_test, y_train, y_test = train_test_split(

X, y, train_size=150, random_state=0, stratify=y

)

# Extract all possible features

feature_coord, feature_type = haar_like_feature_coord(

width=images.shape[2], height=images.shape[1], feature_type=feature_types

)

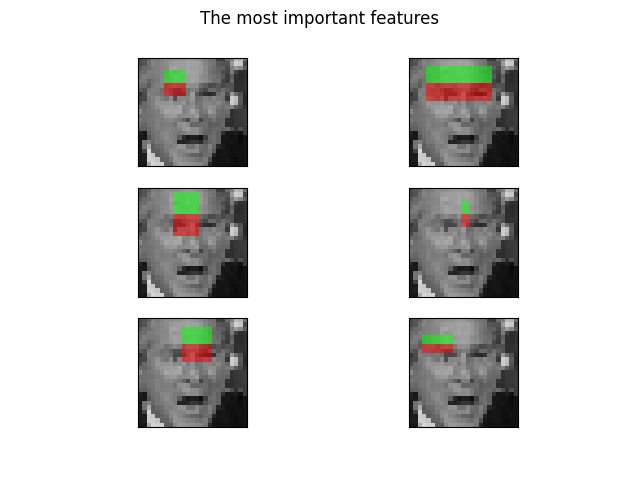

随机森林分类器可以被训练以选择最显著的特征,特别是用于人脸分类。其想法是确定哪些特征最常被树的集合使用。通过仅在后续步骤中使用最显著的特征,我们可以在保持准确性的同时大幅加快计算速度。

# Train a random forest classifier and assess its performance

clf = RandomForestClassifier(

n_estimators=1000, max_depth=None, max_features=100, n_jobs=-1, random_state=0

)

t_start = time()

clf.fit(X_train, y_train)

time_full_train = time() - t_start

auc_full_features = roc_auc_score(y_test, clf.predict_proba(X_test)[:, 1])

# Sort features in order of importance and plot the six most significant

idx_sorted = np.argsort(clf.feature_importances_)[::-1]

fig, axes = plt.subplots(3, 2)

for idx, ax in enumerate(axes.ravel()):

image = images[0]

image = draw_haar_like_feature(

image, 0, 0, images.shape[2], images.shape[1], [feature_coord[idx_sorted[idx]]]

)

ax.imshow(image)

ax.set_xticks([])

ax.set_yticks([])

_ = fig.suptitle('The most important features')

我们可以通过检查特征重要性的累积和来选择最重要的特征。在这个例子中,我们保留了代表累积值70%的特征(这相当于仅使用总特征数量的3%)。

cdf_feature_importances = np.cumsum(clf.feature_importances_[idx_sorted])

cdf_feature_importances /= cdf_feature_importances[-1] # divide by max value

sig_feature_count = np.count_nonzero(cdf_feature_importances < 0.7)

sig_feature_percent = round(sig_feature_count / len(cdf_feature_importances) * 100, 1)

print(

f'{sig_feature_count} features, or {sig_feature_percent}%, '

f'account for 70% of branch points in the random forest.'

)

# Select the determined number of most informative features

feature_coord_sel = feature_coord[idx_sorted[:sig_feature_count]]

feature_type_sel = feature_type[idx_sorted[:sig_feature_count]]

# Note: it is also possible to select the features directly from the matrix X,

# but we would like to emphasize the usage of `feature_coord` and `feature_type`

# to recompute a subset of desired features.

# Compute the result

t_start = time()

X = [extract_feature_image(img, feature_type_sel, feature_coord_sel) for img in images]

X = np.stack(X)

time_subs_feature_comp = time() - t_start

y = np.array([1] * 100 + [0] * 100)

X_train, X_test, y_train, y_test = train_test_split(

X, y, train_size=150, random_state=0, stratify=y

)

712 features, or 0.7%, account for 70% of branch points in the random forest.

一旦特征被提取,我们就可以训练和测试一个新的分类器。

t_start = time()

clf.fit(X_train, y_train)

time_subs_train = time() - t_start

auc_subs_features = roc_auc_score(y_test, clf.predict_proba(X_test)[:, 1])

summary = (

f'Computing the full feature set took '

f'{time_full_feature_comp:.3f}s, '

f'plus {time_full_train:.3f}s training, '

f'for an AUC of {auc_full_features:.2f}. '

f'Computing the restricted feature set took '

f'{time_subs_feature_comp:.3f}s, plus {time_subs_train:.3f}s '

f'training, for an AUC of {auc_subs_features:.2f}.'

)

print(summary)

plt.show()

Computing the full feature set took 16.979s, plus 0.658s training, for an AUC of 1.00. Computing the restricted feature set took 0.066s, plus 0.532s training, for an AUC of 1.00.

脚本总运行时间: (0 分钟 20.080 秒)