备注

前往结尾 下载完整示例代码。或在 Binder 中通过浏览器运行此示例。

基本矩阵估计#

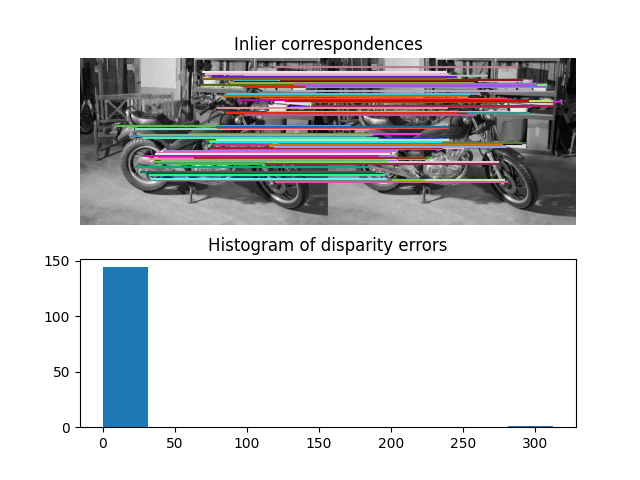

此示例展示了如何使用稀疏的ORB特征对应关系来稳健地估计 对极几何 (立体视觉的几何)。

基本矩阵 关联一对未校准图像之间的对应点。该矩阵将一个图像中的齐次图像点转换为另一个图像中的极线。

未校准意味着两个相机的内参校准(焦距、像素偏斜、主点)是未知的。因此,基础矩阵能够实现捕获场景的投影3D重建。如果校准已知,估计本质矩阵能够实现捕获场景的度量3D重建。

Number of matches: 223

Number of inliers: 162

/Users/cw/baidu/code/fin_tool/github/scikit-image/doc/examples/transform/plot_fundamental_matrix.py:82: FutureWarning:

`plot_matches` is deprecated since version 0.23 and will be removed in version 0.25. Use `skimage.feature.plot_matched_features` instead.

import numpy as np

from skimage import data

from skimage.color import rgb2gray

from skimage.feature import match_descriptors, ORB, plot_matches

from skimage.measure import ransac

from skimage.transform import FundamentalMatrixTransform

import matplotlib.pyplot as plt

img_left, img_right, groundtruth_disp = data.stereo_motorcycle()

img_left, img_right = map(rgb2gray, (img_left, img_right))

# Find sparse feature correspondences between left and right image.

descriptor_extractor = ORB()

descriptor_extractor.detect_and_extract(img_left)

keypoints_left = descriptor_extractor.keypoints

descriptors_left = descriptor_extractor.descriptors

descriptor_extractor.detect_and_extract(img_right)

keypoints_right = descriptor_extractor.keypoints

descriptors_right = descriptor_extractor.descriptors

matches = match_descriptors(descriptors_left, descriptors_right, cross_check=True)

print(f'Number of matches: {matches.shape[0]}')

# Estimate the epipolar geometry between the left and right image.

random_seed = 9

rng = np.random.default_rng(random_seed)

model, inliers = ransac(

(keypoints_left[matches[:, 0]], keypoints_right[matches[:, 1]]),

FundamentalMatrixTransform,

min_samples=8,

residual_threshold=1,

max_trials=5000,

rng=rng,

)

inlier_keypoints_left = keypoints_left[matches[inliers, 0]]

inlier_keypoints_right = keypoints_right[matches[inliers, 1]]

print(f'Number of inliers: {inliers.sum()}')

# Compare estimated sparse disparities to the dense ground-truth disparities.

disp = inlier_keypoints_left[:, 1] - inlier_keypoints_right[:, 1]

disp_coords = np.round(inlier_keypoints_left).astype(np.int64)

disp_idxs = np.ravel_multi_index(disp_coords.T, groundtruth_disp.shape)

disp_error = np.abs(groundtruth_disp.ravel()[disp_idxs] - disp)

disp_error = disp_error[np.isfinite(disp_error)]

# Visualize the results.

fig, ax = plt.subplots(nrows=2, ncols=1)

plt.gray()

plot_matches(

ax[0],

img_left,

img_right,

keypoints_left,

keypoints_right,

matches[inliers],

only_matches=True,

)

ax[0].axis("off")

ax[0].set_title("Inlier correspondences")

ax[1].hist(disp_error)

ax[1].set_title("Histogram of disparity errors")

plt.show()

脚本总运行时间: (0 分钟 0.998 秒)