备注

Ray 2.10.0 引入了 RLlib 的“新 API 栈”的 alpha 阶段。Ray 团队计划将算法、示例脚本和文档迁移到新的代码库中,从而在 Ray 3.0 之前的后续小版本中逐步替换“旧 API 栈”(例如,ModelV2、Policy、RolloutWorker)。

然而,请注意,到目前为止,只有 PPO(单代理和多代理)和 SAC(仅单代理)支持“新 API 堆栈”,并且默认情况下继续使用旧 API 运行。您可以继续使用现有的自定义(旧堆栈)类。

请参阅此处 以获取有关如何使用新API堆栈的更多详细信息。

模型、预处理器和动作分布#

以下图表提供了RLlib中不同组件之间数据流的概念概述。我们从 Environment 开始,它 - 给定一个动作 - 产生一个观察。观察在发送到神经网络 Model 之前,由 Preprocessor 和 Filter (例如用于运行平均归一化)进行预处理。模型输出随后由 ActionDistribution 解释,以确定下一个动作。

绿色高亮的部分可以用用户自定义的实现来替换,如接下来的章节所述。紫色部分是 RLlib 内部的组件,这意味着它们只能通过修改算法源代码来修改。

默认行为#

内置预处理器#

RLlib 尝试根据环境的观察空间选择其内置的预处理器之一。因此,以下简单规则适用:

离散观测值被独热编码,例如

Discrete(3) 且 value=1 -> [0, 1, 0]。MultiDiscrete 观测值通过将每个离散元素进行独热编码,然后将相应的独热编码向量连接起来进行编码。例如

MultiDiscrete([3, 4]) 和 value=[1, 3] -> [0 1 0 0 0 0 1]因为第一个1被编码为[0 1 0],第二个3被编码为[0 0 0 1];这两个向量随后被连接为[0 1 0 0 0 0 1]。元组和字典观察结果被展平,因此,离散和多离散子空间按照上述方式处理。此外,原始的字典/元组观察结果仍然可以通过以下方式访问:a) 在模型中通过输入字典的“obs”键(展平的观察结果在“obs_flat”中),以及 b) 通过策略中的以下代码行(例如,将其放入你的损失函数中以访问原始观察结果:

dict_or_tuple_obs = restore_original_dimensions(input_dict["obs"], self.obs_space, "tf|torch"))。

对于Atari观察空间,RLlib默认使用 DeepMind预处理器 (preprocessor_pref=deepmind)。然而,如果算法的配置键 preprocessor_pref 被设置为“rllib”,则适用于Atari类型观察空间的映射如下:

形状为

(210, 160, 3)的图像会被缩小到dim x dim,其中dim是一个模型配置键(见下文的默认模型配置)。此外,你可以设置grayscale=True以将颜色通道减少到1,或者设置zero_mean=True以生成 -1.0 到 1.0 的值(而不是默认的 0.0 到 1.0 的值)。雅达利RAM观察结果(形状为

(128, )的1D空间)是零均值的(值介于-1.0和1.0之间)。

在所有其他情况下,不会使用预处理器,环境中的原始观测数据将直接发送到您的模型中。

默认模型配置设置#

在接下来的段落中,我们将首先描述 RLlib 的默认行为,即自动构建模型(如果你没有设置自定义模型),然后深入探讨如何通过更改这些设置或编写自己的模型类来自定义你的模型。

默认情况下,RLlib 将使用以下配置设置您的模型。这些包括 FullyConnectedNetworks 的选项(fcnet_hiddens 和 fcnet_activation)、VisionNetworks 的选项(conv_filters 和 conv_activation)、自动 RNN 包装、自动注意力(GTrXL)包装,以及一些针对 Atari 环境的特殊选项:

MODEL_DEFAULTS: ModelConfigDict = {

# Experimental flag.

# If True, user specified no preprocessor to be created

# (via config._disable_preprocessor_api=True). If True, observations

# will arrive in model as they are returned by the env.

"_disable_preprocessor_api": False,

# Experimental flag.

# If True, RLlib will no longer flatten the policy-computed actions into

# a single tensor (for storage in SampleCollectors/output files/etc..),

# but leave (possibly nested) actions as-is. Disabling flattening affects:

# - SampleCollectors: Have to store possibly nested action structs.

# - Models that have the previous action(s) as part of their input.

# - Algorithms reading from offline files (incl. action information).

"_disable_action_flattening": False,

# === Built-in options ===

# FullyConnectedNetwork (tf and torch): rllib.models.tf|torch.fcnet.py

# These are used if no custom model is specified and the input space is 1D.

# Number of hidden layers to be used.

"fcnet_hiddens": [256, 256],

# Activation function descriptor.

# Supported values are: "tanh", "relu", "swish" (or "silu", which is the same),

# "linear" (or None).

"fcnet_activation": "tanh",

# Initializer function or class descriptor for encoder weigths.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"fcnet_weights_initializer": None,

# Initializer configuration for encoder weights.

# This configuration is passed to the initializer defined in

# `fcnet_weights_initializer`.

"fcnet_weights_initializer_config": None,

# Initializer function or class descriptor for encoder bias.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"fcnet_bias_initializer": None,

# Initializer configuration for encoder bias.

# This configuration is passed to the initializer defined in

# `fcnet_bias_initializer`.

"fcnet_bias_initializer_config": None,

# VisionNetwork (tf and torch): rllib.models.tf|torch.visionnet.py

# These are used if no custom model is specified and the input space is 2D.

# Filter config: List of [out_channels, kernel, stride] for each filter.

# Example:

# Use None for making RLlib try to find a default filter setup given the

# observation space.

"conv_filters": None,

# Activation function descriptor.

# Supported values are: "tanh", "relu", "swish" (or "silu", which is the same),

# "linear" (or None).

"conv_activation": "relu",

# Initializer function or class descriptor for CNN encoder kernel.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"conv_kernel_initializer": None,

# Initializer configuration for CNN encoder kernel.

# This configuration is passed to the initializer defined in

# `conv_weights_initializer`.

"conv_kernel_initializer_config": None,

# Initializer function or class descriptor for CNN encoder bias.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"conv_bias_initializer": None,

# Initializer configuration for CNN encoder bias.

# This configuration is passed to the initializer defined in

# `conv_bias_initializer`.

"conv_bias_initializer_config": None,

# Initializer function or class descriptor for CNN head (pi, Q, V) kernel.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"conv_transpose_kernel_initializer": None,

# Initializer configuration for CNN head (pi, Q, V) kernel.

# This configuration is passed to the initializer defined in

# `conv_transpose_weights_initializer`.

"conv_transpose_kernel_initializer_config": None,

# Initializer function or class descriptor for CNN head (pi, Q, V) bias.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"conv_transpose_bias_initializer": None,

# Initializer configuration for CNN head (pi, Q, V) bias.

# This configuration is passed to the initializer defined in

# `conv_transpose_bias_initializer`.

"conv_transpose_bias_initializer_config": None,

# Some default models support a final FC stack of n Dense layers with given

# activation:

# - Complex observation spaces: Image components are fed through

# VisionNets, flat Boxes are left as-is, Discrete are one-hot'd, then

# everything is concated and pushed through this final FC stack.

# - VisionNets (CNNs), e.g. after the CNN stack, there may be

# additional Dense layers.

# - FullyConnectedNetworks will have this additional FCStack as well

# (that's why it's empty by default).

"post_fcnet_hiddens": [],

"post_fcnet_activation": "relu",

# Initializer function or class descriptor for head (pi, Q, V) weights.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"post_fcnet_weights_initializer": None,

# Initializer configuration for head (pi, Q, V) weights.

# This configuration is passed to the initializer defined in

# `post_fcnet_weights_initializer`.

"post_fcnet_weights_initializer_config": None,

# Initializer function or class descriptor for head (pi, Q, V) bias.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"post_fcnet_bias_initializer": None,

# Initializer configuration for head (pi, Q, V) bias.

# This configuration is passed to the initializer defined in

# `post_fcnet_bias_initializer`.

"post_fcnet_bias_initializer_config": None,

# For DiagGaussian action distributions, make the second half of the model

# outputs floating bias variables instead of state-dependent. This only

# has an effect is using the default fully connected net.

"free_log_std": False,

# Whether to skip the final linear layer used to resize the hidden layer

# outputs to size `num_outputs`. If True, then the last hidden layer

# should already match num_outputs.

"no_final_linear": False,

# Whether layers should be shared for the value function.

"vf_share_layers": True,

# == LSTM ==

# Whether to wrap the model with an LSTM.

"use_lstm": False,

# Max seq len for training the LSTM, defaults to 20.

"max_seq_len": 20,

# Size of the LSTM cell.

"lstm_cell_size": 256,

# Whether to feed a_{t-1} to LSTM (one-hot encoded if discrete).

"lstm_use_prev_action": False,

# Whether to feed r_{t-1} to LSTM.

"lstm_use_prev_reward": False,

# Initializer function or class descriptor for LSTM weights.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"lstm_weights_initializer": None,

# Initializer configuration for LSTM weights.

# This configuration is passed to the initializer defined in

# `lstm_weights_initializer`.

"lstm_weights_initializer_config": None,

# Initializer function or class descriptor for LSTM bias.

# Supported values are the initializer names (str), classes or functions listed

# by the frameworks (`tf2``, `torch`). See

# https://pytorch.org/docs/stable/nn.init.html for `torch` and

# https://www.tensorflow.org/api_docs/python/tf/keras/initializers for `tf2`.

# Note, if `None`, the default initializer defined by `torch` or `tf2` is used.

"lstm_bias_initializer": None,

# Initializer configuration for LSTM bias.

# This configuration is passed to the initializer defined in

# `lstm_bias_initializer`.

"lstm_bias_initializer_config": None,

# Whether the LSTM is time-major (TxBx..) or batch-major (BxTx..).

"_time_major": False,

# == Attention Nets (experimental: torch-version is untested) ==

# Whether to use a GTrXL ("Gru transformer XL"; attention net) as the

# wrapper Model around the default Model.

"use_attention": False,

# The number of transformer units within GTrXL.

# A transformer unit in GTrXL consists of a) MultiHeadAttention module and

# b) a position-wise MLP.

"attention_num_transformer_units": 1,

# The input and output size of each transformer unit.

"attention_dim": 64,

# The number of attention heads within the MultiHeadAttention units.

"attention_num_heads": 1,

# The dim of a single head (within the MultiHeadAttention units).

"attention_head_dim": 32,

# The memory sizes for inference and training.

"attention_memory_inference": 50,

"attention_memory_training": 50,

# The output dim of the position-wise MLP.

"attention_position_wise_mlp_dim": 32,

# The initial bias values for the 2 GRU gates within a transformer unit.

"attention_init_gru_gate_bias": 2.0,

# Whether to feed a_{t-n:t-1} to GTrXL (one-hot encoded if discrete).

"attention_use_n_prev_actions": 0,

# Whether to feed r_{t-n:t-1} to GTrXL.

"attention_use_n_prev_rewards": 0,

# == Atari ==

# Set to True to enable 4x stacking behavior.

"framestack": True,

# Final resized frame dimension

"dim": 84,

# (deprecated) Converts ATARI frame to 1 Channel Grayscale image

"grayscale": False,

# (deprecated) Changes frame to range from [-1, 1] if true

"zero_mean": True,

# === Options for custom models ===

# Name of a custom model to use

"custom_model": None,

# Extra options to pass to the custom classes. These will be available to

# the Model's constructor in the model_config field. Also, they will be

# attempted to be passed as **kwargs to ModelV2 models. For an example,

# see rllib/models/[tf|torch]/attention_net.py.

"custom_model_config": {},

# Name of a custom action distribution to use.

"custom_action_dist": None,

# Custom preprocessors are deprecated. Please use a wrapper class around

# your environment instead to preprocess observations.

"custom_preprocessor": None,

# === Options for ModelConfigs in RLModules ===

# The latent dimension to encode into.

# Since most RLModules have an encoder and heads, this establishes an agreement

# on the dimensionality of the latent space they share.

# This has no effect for models outside RLModule.

# If None, model_config['fcnet_hiddens'][-1] value will be used to guarantee

# backward compatibility to old configs. This yields different models than past

# versions of RLlib.

"encoder_latent_dim": None,

# Whether to always check the inputs and outputs of RLlib's default models for

# their specifications. Input specifications are checked on failed forward passes

# of the models regardless of this flag. If this flag is set to `True`, inputs and

# outputs are checked on every call. This leads to a slow-down and should only be

# used for debugging. Note that this flag is only relevant for instances of

# RLlib's Model class. These are commonly generated from ModelConfigs in RLModules.

"always_check_shapes": False,

# Deprecated keys:

# Use `lstm_use_prev_action` or `lstm_use_prev_reward` instead.

"lstm_use_prev_action_reward": DEPRECATED_VALUE,

# Deprecated in anticipation of RLModules API

"_use_default_native_models": DEPRECATED_VALUE,

}

上面的字典(或其覆盖的子集)通过主配置字典中的 model 键传递给算法,如下所示:

algo_config = {

# All model-related settings go into this sub-dict.

"model": {

# By default, the MODEL_DEFAULTS dict above will be used.

# Change individual keys in that dict by overriding them, e.g.

"fcnet_hiddens": [512, 512, 512],

"fcnet_activation": "relu",

},

# ... other Algorithm config keys, e.g. "lr" ...

"lr": 0.00001,

}

内置模型#

在预处理(如果适用)原始环境输出后,处理后的观察结果通过策略的模型进行处理。如果没有指定自定义模型(参见下文如何自定义模型),RLlib 将根据简单的启发式方法选择一个默认模型:

这些默认模型类型可以通过算法配置中的 model 配置键进一步配置(如上所述)。可用设置在 上面列出 ,并且在 模型目录文件 中也有文档说明。

请注意,对于视觉网络的情况,如果您的环境观测具有自定义大小,您可能需要配置 conv_filters。例如,对于 42x42 的观测,可以使用 "model": {"dim": 42, "conv_filters": [[16, [4, 4], 2], [32, [4, 4], 2], [512, [11, 11], 1]]}。因此,请始终确保最后一个 Conv2D 输出的形状为 [B, 1, 1, X]``(对于 PyTorch 为 ``[B, X, 1, 1]),其中 B=批次,X=最后一个 Conv2D 层的过滤器数量,以便 RLlib 可以将其展平。如果情况并非如此,将会抛出一个信息性的错误。

内置的自动-LSTM,以及自动-Attention 包装器#

此外,如果您在模型配置中设置 "use_lstm": True 或 "use_attention": True ,您的模型输出将分别由一个 LSTM 单元(TF 或 Torch)或一个注意力(GTrXL)网络(TF 或 Torch)进一步处理。更广泛地说,RLlib 支持在其所有策略梯度算法(A3C、PPO、PG、IMPALA)中使用循环/注意力模型,并且必要的序列处理支持已内置于其策略评估工具中。

关于如何使用额外的配置键来更详细地配置这两个自动包装器,请参见上文(例如,您可以通过 lstm_cell_size 指定 LSTM 层的尺寸,或通过 attention_dim 指定注意力维度)。

对于完全自定义的 RNN/LSTM/Attention-Net 设置,请参见下面的 循环模型 和 注意力网络/Transformer 部分。

备注

同时使用自动包装器(lstm 和 attention)是不可能的。这样做会导致错误。

自定义预处理器和模型#

自定义预处理器和环境过滤器#

警告

自定义预处理器已被完全弃用,因为它们有时会与处理复杂观察空间的内置预处理器发生冲突。请改用 包装类 围绕您的环境,而不是预处理器。请注意,上述内置的 默认 预处理器仍将被使用,不会被弃用。

与其使用已弃用的自定义预处理器,您应该使用 gym.Wrappers 来预处理您的环境输出(观察和奖励),以及在将模型计算的动作发送回环境之前对其进行预处理。

例如,要操作你的环境的观察或奖励,请执行以下操作:

import gymnasium as gym

from ray.rllib.utils.numpy import one_hot

class OneHotEnv(gym.core.ObservationWrapper):

# Override `observation` to custom process the original observation

# coming from the env.

def observation(self, observation):

# E.g. one-hotting a float obs [0.0, 5.0[.

return one_hot(observation, depth=5)

class ClipRewardEnv(gym.core.RewardWrapper):

def __init__(self, env, min_, max_):

super().__init__(env)

self.min = min_

self.max = max_

# Override `reward` to custom process the original reward coming

# from the env.

def reward(self, reward):

# E.g. simple clipping between min and max.

return np.clip(reward, self.min, self.max)

自定义模型:实现自己的前向逻辑#

如果你想提供自己的模型逻辑(而不是使用 RLlib 的内置默认值),你可以子类化 ``TFModelV2``(适用于 TensorFlow)或 ``TorchModelV2``(适用于 PyTorch),然后在配置中注册并指定你的子类,如下所示:

自定义 TensorFlow 模型#

自定义 TensorFlow 模型应继承 TFModelV2 并实现 __init__() 和 forward() 方法。forward() 接受一个张量输入的字典(映射字符串到张量类型),其键和值取决于模型的视图需求。通常,此输入字典仅包含当前观察 obs 和一个 is_training 布尔标志,以及一个可选的 RNN 状态列表。forward() 应返回模型输出(大小为 self.num_outputs),以及 - 如果适用 - 一个新的内部状态列表(在 RNN 或注意力网络的情况下)。您还可以重写模型的额外方法,例如 value_function,以实现自定义价值分支。

可以通过 TFModelV2.custom_loss 方法添加额外的监督/自监督损失:

- class ray.rllib.models.tf.tf_modelv2.TFModelV2(obs_space: gymnasium.spaces.Space, action_space: gymnasium.spaces.Space, num_outputs: int, model_config: dict, name: str)[源代码]

ModelV2 的 TF 版本,应包含一个 tf keras 模型。

请注意,除非你在子类中实现 forward(),否则这个类本身不是一个有效的模型。

- context() AbstractContextManager[源代码]

返回当前 TF 图的上下文管理器。

- update_ops() List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor][源代码]

返回此模型的更新操作列表。

例如,这应该包括任何 BatchNorm 更新操作。

- register_variables(variables: List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor]) None[源代码]

将给定的变量列表注册到此模型中。

- variables(as_dict: bool = False) List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor] | Dict[str, numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor][源代码]

返回此模型的变量列表(或字典)。

- 参数:

as_dict – 变量是否应作为字典值返回(使用描述性的字符串键)。

- 返回:

此 ModelV2 的所有变量的列表(如果

as_dict为 True,则为字典)。

- trainable_variables(as_dict: bool = False) List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor] | Dict[str, numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor][源代码]

返回此模型的可训练变量列表。

- 参数:

as_dict – 变量是否应作为字典值返回(使用描述性键)。

- 返回:

此 ModelV2 的所有可训练(tf)/requires_grad(torch)变量的列表(如果

as_dict为 True,则为字典)。

一旦实现,您的 TF 模型就可以被注册并在内置默认模型的地方使用:

import ray

import ray.rllib.algorithms.ppo as ppo

from ray.rllib.models import ModelCatalog

from ray.rllib.models.tf.tf_modelv2 import TFModelV2

class MyModelClass(TFModelV2):

def __init__(self, obs_space, action_space, num_outputs, model_config, name): ...

def forward(self, input_dict, state, seq_lens): ...

def value_function(self): ...

ModelCatalog.register_custom_model("my_tf_model", MyModelClass)

ray.init()

algo = ppo.PPO(env="CartPole-v1", config={

"model": {

"custom_model": "my_tf_model",

# Extra kwargs to be passed to your model's c'tor.

"custom_model_config": {},

},

})

查看 keras 模型示例 以获取一个完整的 TF 自定义模型示例。

更多关于如何实现自定义元组/字典处理模型(也可查看 这个测试案例)、自定义RNN、自定义模型API(基于默认模型之上)的示例和解释将在下面进一步介绍。

自定义 PyTorch 模型#

同样地,你可以通过子类化 TorchModelV2 来创建和注册自定义的 PyTorch 模型,并实现 __init__() 和 forward() 方法。forward() 方法接收一个张量输入的字典(映射字符串到 PyTorch 张量类型),其键和值取决于模型的视图需求。通常,该字典仅包含当前观察 obs 和一个 is_training 布尔标志,以及一个可选的 RNN 状态列表。forward() 方法应返回模型输出(大小为 self.num_outputs),以及 - 如果适用 - 一个新的内部状态列表(在 RNN 或注意力网络的情况下)。你还可以重写模型的额外方法,如 value_function,以实现自定义的价值分支。

可以通过 TorchModelV2.custom_loss 方法添加额外的监督/自监督损失:

查看这些 全连接 、 卷积 和 循环 的 torch 模型示例。

- class ray.rllib.models.torch.torch_modelv2.TorchModelV2(obs_space: gymnasium.spaces.Space, action_space: gymnasium.spaces.Space, num_outputs: int, model_config: dict, name: str)[源代码]

ModelV2 的 Torch 版本。

请注意,除非你从 nn.Module 继承并在子类中实现 forward(),否则这个类本身不是一个有效的模型。

- variables(as_dict: bool = False) List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor] | Dict[str, numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor][源代码]

返回此模型的变量列表(或字典)。

- 参数:

as_dict – 变量是否应作为字典值返回(使用描述性的字符串键)。

- 返回:

此 ModelV2 的所有变量的列表(如果

as_dict为 True,则为字典)。

- trainable_variables(as_dict: bool = False) List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor] | Dict[str, numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor][源代码]

返回此模型的可训练变量列表。

- 参数:

as_dict – 变量是否应作为字典值返回(使用描述性键)。

- 返回:

此 ModelV2 的所有可训练(tf)/requires_grad(torch)变量的列表(如果

as_dict为 True,则为字典)。

一旦实现,您的 PyTorch 模型就可以被注册并在内置模型的地方使用:

import torch.nn as nn

import ray

from ray.rllib.algorithms import ppo

from ray.rllib.models import ModelCatalog

from ray.rllib.models.torch.torch_modelv2 import TorchModelV2

class CustomTorchModel(TorchModelV2):

def __init__(self, obs_space, action_space, num_outputs, model_config, name): ...

def forward(self, input_dict, state, seq_lens): ...

def value_function(self): ...

ModelCatalog.register_custom_model("my_torch_model", CustomTorchModel)

ray.init()

algo = ppo.PPO(env="CartPole-v1", config={

"framework": "torch",

"model": {

"custom_model": "my_torch_model",

# Extra kwargs to be passed to your model's c'tor.

"custom_model_config": {},

},

})

查看 torch 模型示例 以获取如何构建自定义 PyTorch 模型(包括循环模型)的各种示例。

更多关于如何实现自定义元组/字典处理模型(也可查看 这个测试案例)、自定义RNN、自定义模型API(基于默认模型之上)的示例和解释将在下面进一步介绍。

实现自定义循环网络#

与其使用 use_lstm: True 选项,可能更倾向于使用自定义的循环模型。这提供了对LSTM输出后处理的更多控制,并且还可以允许使用多个LSTM单元来处理输入的不同部分。对于RNN模型,建议子类化 RecurrentNetwork``(可以是 `TF <https://github.com/ray-project/ray/blob/master/rllib/models/tf/recurrent_net.py>`__ 或 `PyTorch <https://github.com/ray-project/ray/blob/master/rllib/models/torch/recurrent_net.py>`__ 版本),然后实现 ``__init__()、get_initial_state() 和 forward_rnn()。

- class ray.rllib.models.tf.recurrent_net.RecurrentNetwork(obs_space: gymnasium.spaces.Space, action_space: gymnasium.spaces.Space, num_outputs: int, model_config: dict, name: str)[源代码]#

帮助类,用于简化使用 TFModelV2 实现 RNN 模型。

你可以实现 forward_rnn() 而不是 forward(),forward_rnn() 接受已经添加了时间维度的批次。

以下是一个子类

MyRNNClass(RecurrentNetwork)的示例实现:def __init__(self, *args, **kwargs): super(MyModelClass, self).__init__(*args, **kwargs) cell_size = 256 # Define input layers input_layer = tf.keras.layers.Input( shape=(None, obs_space.shape[0])) state_in_h = tf.keras.layers.Input(shape=(256, )) state_in_c = tf.keras.layers.Input(shape=(256, )) seq_in = tf.keras.layers.Input(shape=(), dtype=tf.int32) # Send to LSTM cell lstm_out, state_h, state_c = tf.keras.layers.LSTM( cell_size, return_sequences=True, return_state=True, name="lstm")( inputs=input_layer, mask=tf.sequence_mask(seq_in), initial_state=[state_in_h, state_in_c]) output_layer = tf.keras.layers.Dense(...)(lstm_out) # Create the RNN model self.rnn_model = tf.keras.Model( inputs=[input_layer, seq_in, state_in_h, state_in_c], outputs=[output_layer, state_h, state_c]) self.rnn_model.summary()

- __init__(obs_space: gymnasium.spaces.Space, action_space: gymnasium.spaces.Space, num_outputs: int, model_config: dict, name: str)#

初始化一个 TFModelV2 实例。

以下是一个子类

MyModelClass(TFModelV2)的实现示例:def __init__(self, *args, **kwargs): super(MyModelClass, self).__init__(*args, **kwargs) input_layer = tf.keras.layers.Input(...) hidden_layer = tf.keras.layers.Dense(...)(input_layer) output_layer = tf.keras.layers.Dense(...)(hidden_layer) value_layer = tf.keras.layers.Dense(...)(hidden_layer) self.base_model = tf.keras.Model( input_layer, [output_layer, value_layer])

- get_initial_state() List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor][源代码]#

获取模型的初始递归状态值。

- 返回:

np.array 对象的列表,如果有的话

MyRNNClass示例的实现:def get_initial_state(self): return [ np.zeros(self.cell_size, np.float32), np.zeros(self.cell_size, np.float32), ]

- forward_rnn(inputs: numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor, state: List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor], seq_lens: numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor) Tuple[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor, List[numpy.array | jnp.ndarray | tf.Tensor | torch.Tensor]][源代码]#

使用给定的输入张量和状态调用模型。

- 参数:

inputs – 形状为 [B, T, obs_size] 的观测张量。

state – 状态张量列表,每个张量的形状为 [B, T, size]。

seq_lens – 1d 张量,保存输入序列的长度。

- 返回:

模型输出张量的形状为 [B, T, num_outputs],以及每个新状态张量的形状为 [B, size] 的列表。

- 返回类型:

(outputs, new_state)

MyRNNClass示例的实现:def forward_rnn(self, inputs, state, seq_lens): model_out, h, c = self.rnn_model([inputs, seq_lens] + state) return model_out, [h, c]

注意,进入 forward_rnn 的 inputs 参数已经是一个时间排序的单一张量(不是 input_dict!),形状为 (B x T x ...)。如果你进一步想要自定义并且需要更直接地访问完整的(非时间排序的) input_dict,你也可以直接重写你的模型的 forward 方法(就像你对非RNN的ModelV2所做的那样)。不过在这种情况下,你需要负责更改输入并添加时间维度到传入的数据(通常你只需要重塑)。

你可以查看 rnn_model.py 模型作为实现你自己的模型(无论是TF还是Torch)的例子。

实现自定义注意力网络#

类似于上面描述的RNN情况,你也可以实现自己的基于注意力的网络,而不是在你的模型配置中使用 use_attention: True 标志。

查看 RLlib 的 GTrXL (Attention Net) 实现(适用于 TF 和 PyTorch)以更好地了解如何编写此类型的自定义模型。这些是我们用作包装器的模型,当 use_attention=True 时使用。

这个 测试案例 确认了它们在 PPO 和 IMPALA 中的学习能力。

批量归一化#

你可以使用 tf.layers.batch_normalization(x, training=input_dict["is_training"]) 来为你的自定义模型添加批量归一化层。请参见这个 代码示例 。RLlib 会在优化过程中自动运行批量归一化层的更新操作(参见 tf_policy.py 和 multi_gpu_learner_thread.py 以了解这些更新的具体处理方式)。

如果 RLlib 未能正确检测到您的自定义模型的更新操作,您可以重写 update_ops() 方法以返回要运行的操作列表以进行更新。

自定义模型API(基于默认或自定义模型)#

到目前为止,我们讨论了a) RLlib内置的默认模型,如果你在算法的配置中没有指定任何内容,这些模型会自动提供;以及b) 自定义模型,通过这些模型你可以定义任何任意的正向传递。

另一个典型的需要自定义模型的场景是,添加算法学习所需的新API,例如在策略模型之上添加一个Q值计算头。为了扩展模型的API,只需在您的TF-或TorchModelV2子类中定义并实现一个新方法(例如 get_q_values())。

你现在可以将这个新API包装在RLlib的默认模型周围,或者包装在你的自定义(覆盖``forward()``方法的)模型类周围。以下是两个示例,展示了如何做到这一点:

Q-head API: 在默认的 RLlib 模型上添加一个 dueling 层。

以下代码向自动选择的默认模型(例如,如果观察空间是1D Box或Discrete,则为``FullyConnectedNetwork``)添加了一个``get_q_values()``方法:

class DuelingQModel(TFModelV2): # or: TorchModelV2

"""A simple, hard-coded dueling head model."""

def __init__(self, obs_space, action_space, num_outputs, model_config, name):

# Pass num_outputs=None into super constructor (so that no action/

# logits output layer is built).

# Alternatively, you can pass in num_outputs=[last layer size of

# config[model][fcnet_hiddens]] AND set no_last_linear=True, but

# this seems more tedious as you will have to explain users of this

# class that num_outputs is NOT the size of your Q-output layer.

super(DuelingQModel, self).__init__(

obs_space, action_space, None, model_config, name

)

# Now: self.num_outputs contains the last layer's size, which

# we can use to construct the dueling head (see torch: SlimFC

# below).

# Construct advantage head ...

self.A = tf.keras.layers.Dense(num_outputs)

# torch:

# self.A = SlimFC(

# in_size=self.num_outputs, out_size=num_outputs)

# ... and value head.

self.V = tf.keras.layers.Dense(1)

# torch:

# self.V = SlimFC(in_size=self.num_outputs, out_size=1)

def get_q_values(self, underlying_output):

# Calculate q-values following dueling logic:

v = self.V(underlying_output) # value

a = self.A(underlying_output) # advantages (per action)

advantages_mean = tf.reduce_mean(a, 1)

advantages_centered = a - tf.expand_dims(advantages_mean, 1)

return v + advantages_centered # q-values

现在,对于需要使用此模型API才能正常工作的算法(例如DQN),您可以使用以下代码通过 ModelCatalog.get_model_v2 工厂函数构建完整的最终模型(代码在此):

my_dueling_model = ModelCatalog.get_model_v2(

obs_space=obs_space,

action_space=action_space,

num_outputs=action_space.n,

model_config=MODEL_DEFAULTS,

framework=args.framework,

# Providing the `model_interface` arg will make the factory

# wrap the chosen default model with our new model API class

# (DuelingQModel). This way, both `forward` and `get_q_values`

# are available in the returned class.

model_interface=DuelingQModel

if args.framework != "torch"

else TorchDuelingQModel,

name="dueling_q_model",

)

通过上述构建的模型对象,你可以通过直接调用 my_dueling_model 来获取底层的中间输出(在 dueling head 之前)(out = my_dueling_model([input_dict])),然后将 out 传递到你的自定义 get_q_values 方法中:q_values = my_dueling_model.get_q_values(out)。

SAC 的单一 Q 值 API。

我们上面的DQN模型接受一个观察并输出每个(离散)动作的一个Q值。连续的SAC——另一方面——使用计算单个Q值的模型,仅针对给定观察和特定动作的单个(连续)动作。

让我们来看看如何构建这个API并将其封装在一个自定义模型周围:

class TorchContActionQModel(TorchModelV2):

"""A simple, q-value-from-cont-action model (for e.g. SAC type algos)."""

def __init__(self, obs_space, action_space, num_outputs, model_config, name):

nn.Module.__init__(self)

# Pass num_outputs=None into super constructor (so that no action/

# logits output layer is built).

# Alternatively, you can pass in num_outputs=[last layer size of

# config[model][fcnet_hiddens]] AND set no_last_linear=True, but

# this seems more tedious as you will have to explain users of this

# class that num_outputs is NOT the size of your Q-output layer.

super(TorchContActionQModel, self).__init__(

obs_space, action_space, None, model_config, name

)

# Now: self.num_outputs contains the last layer's size, which

# we can use to construct the single q-value computing head.

# Nest an RLlib FullyConnectedNetwork (torch or tf) into this one here

# to be used for Q-value calculation.

# Use the current value of self.num_outputs, which is the wrapped

# model's output layer size.

combined_space = Box(-1.0, 1.0, (self.num_outputs + action_space.shape[0],))

self.q_head = TorchFullyConnectedNetwork(

combined_space, action_space, 1, model_config, "q_head"

)

# Missing here: Probably still have to provide action output layer

# and value layer and make sure self.num_outputs is correctly set.

def get_single_q_value(self, underlying_output, action):

# Calculate the q-value after concating the underlying output with

# the given action.

input_ = torch.cat([underlying_output, action], dim=-1)

# Construct a simple input_dict (needed for self.q_head as it's an

# RLlib ModelV2).

input_dict = {"obs": input_}

# Ignore state outputs.

q_values, _ = self.q_head(input_dict)

return q_values

现在,对于需要使用此模型API才能正常工作的算法(例如SAC),您可以使用以下代码通过 ModelCatalog.get_model_v2 工厂函数构建完整的最终模型(代码在此):

my_cont_action_q_model = ModelCatalog.get_model_v2(

obs_space=obs_space,

action_space=action_space,

num_outputs=2,

model_config=MODEL_DEFAULTS,

framework=args.framework,

# Providing the `model_interface` arg will make the factory

# wrap the chosen default model with our new model API class

# (DuelingQModel). This way, both `forward` and `get_q_values`

# are available in the returned class.

model_interface=ContActionQModel

if args.framework != "torch"

else TorchContActionQModel,

name="cont_action_q_model",

)

通过上述构建的模型对象,你可以通过直接调用 my_cont_action_q_model 来获取底层的中间输出(在q-head之前)(out = my_cont_action_q_model([input_dict])),然后将 out 和一些动作传递到你的自定义 get_single_q_value 方法中:q_value = my_cont_action_q_model.get_signle_q_value(out, action)。

构建自定义模型的更多示例#

适用于元组观察空间的多输入模型(用于PPO)

RLlib 对 Tuple 和 Dict 空间的默认预处理器是将传入的观察结果展平为一个扁平的 1D 数组,然后选择一个全连接网络(默认情况下)来处理这个展平的向量。如果你的观察结果中只有 1D Box 或 Discrete/MultiDiscrete 子空间,这通常是可以的。

然而,如果你有一个复杂的观察空间,其中包含一个或多个图像组件(除了1D Box和离散空间)。你可能希望使用一些卷积网络对每个图像组件进行预处理,然后将它们的输出与剩余的非图像(扁平)输入(1D Box和离散/独热组件)连接起来。

看看这个模型示例,它正是这样做的:

@OldAPIStack

class ComplexInputNetwork(TFModelV2):

"""TFModelV2 concat'ing CNN outputs to flat input(s), followed by FC(s).

Note: This model should be used for complex (Dict or Tuple) observation

spaces that have one or more image components.

The data flow is as follows:

`obs` (e.g. Tuple[img0, img1, discrete0]) -> `CNN0 + CNN1 + ONE-HOT`

`CNN0 + CNN1 + ONE-HOT` -> concat all flat outputs -> `out`

`out` -> (optional) FC-stack -> `out2`

`out2` -> action (logits) and vaulue heads.

"""

def __init__(self, obs_space, action_space, num_outputs, model_config, name):

self.original_space = (

obs_space.original_space

if hasattr(obs_space, "original_space")

else obs_space

)

self.processed_obs_space = (

self.original_space

if model_config.get("_disable_preprocessor_api")

else obs_space

)

super().__init__(

self.original_space, action_space, num_outputs, model_config, name

)

self.flattened_input_space = flatten_space(self.original_space)

# Build the CNN(s) given obs_space's image components.

self.cnns = {}

self.one_hot = {}

self.flatten_dims = {}

self.flatten = {}

concat_size = 0

for i, component in enumerate(self.flattened_input_space):

# Image space.

if len(component.shape) == 3 and isinstance(component, Box):

config = {

"conv_filters": model_config["conv_filters"]

if "conv_filters" in model_config

else get_filter_config(component.shape),

"conv_activation": model_config.get("conv_activation"),

"post_fcnet_hiddens": [],

}

self.cnns[i] = ModelCatalog.get_model_v2(

component,

action_space,

num_outputs=None,

model_config=config,

framework="tf",

name="cnn_{}".format(i),

)

concat_size += int(self.cnns[i].num_outputs)

# Discrete|MultiDiscrete inputs -> One-hot encode.

elif isinstance(component, (Discrete, MultiDiscrete)):

if isinstance(component, Discrete):

size = component.n

else:

size = np.sum(component.nvec)

config = {

"fcnet_hiddens": model_config["fcnet_hiddens"],

"fcnet_activation": model_config.get("fcnet_activation"),

"post_fcnet_hiddens": [],

}

self.one_hot[i] = ModelCatalog.get_model_v2(

Box(-1.0, 1.0, (size,), np.float32),

action_space,

num_outputs=None,

model_config=config,

framework="tf",

name="one_hot_{}".format(i),

)

concat_size += int(self.one_hot[i].num_outputs)

# Everything else (1D Box).

else:

size = int(np.prod(component.shape))

config = {

"fcnet_hiddens": model_config["fcnet_hiddens"],

"fcnet_activation": model_config.get("fcnet_activation"),

"post_fcnet_hiddens": [],

}

self.flatten[i] = ModelCatalog.get_model_v2(

Box(-1.0, 1.0, (size,), np.float32),

action_space,

num_outputs=None,

model_config=config,

framework="tf",

name="flatten_{}".format(i),

)

self.flatten_dims[i] = size

concat_size += int(self.flatten[i].num_outputs)

# Optional post-concat FC-stack.

post_fc_stack_config = {

"fcnet_hiddens": model_config.get("post_fcnet_hiddens", []),

"fcnet_activation": model_config.get("post_fcnet_activation", "relu"),

}

self.post_fc_stack = ModelCatalog.get_model_v2(

Box(float("-inf"), float("inf"), shape=(concat_size,), dtype=np.float32),

self.action_space,

None,

post_fc_stack_config,

framework="tf",

name="post_fc_stack",

)

# Actions and value heads.

self.logits_and_value_model = None

self._value_out = None

if num_outputs:

# Action-distribution head.

concat_layer = tf.keras.layers.Input((self.post_fc_stack.num_outputs,))

logits_layer = tf.keras.layers.Dense(

num_outputs,

activation=None,

kernel_initializer=normc_initializer(0.01),

name="logits",

)(concat_layer)

# Create the value branch model.

value_layer = tf.keras.layers.Dense(

1,

activation=None,

kernel_initializer=normc_initializer(0.01),

name="value_out",

)(concat_layer)

self.logits_and_value_model = tf.keras.models.Model(

concat_layer, [logits_layer, value_layer]

)

else:

self.num_outputs = self.post_fc_stack.num_outputs

@override(ModelV2)

def forward(self, input_dict, state, seq_lens):

if SampleBatch.OBS in input_dict and "obs_flat" in input_dict:

orig_obs = input_dict[SampleBatch.OBS]

else:

orig_obs = restore_original_dimensions(

input_dict[SampleBatch.OBS], self.processed_obs_space, tensorlib="tf"

)

# Push image observations through our CNNs.

outs = []

for i, component in enumerate(tree.flatten(orig_obs)):

if i in self.cnns:

cnn_out, _ = self.cnns[i](SampleBatch({SampleBatch.OBS: component}))

outs.append(cnn_out)

elif i in self.one_hot:

if "int" in component.dtype.name:

one_hot_in = {

SampleBatch.OBS: one_hot(

component, self.flattened_input_space[i]

)

}

else:

one_hot_in = {SampleBatch.OBS: component}

one_hot_out, _ = self.one_hot[i](SampleBatch(one_hot_in))

outs.append(one_hot_out)

else:

nn_out, _ = self.flatten[i](

SampleBatch(

{

SampleBatch.OBS: tf.cast(

tf.reshape(component, [-1, self.flatten_dims[i]]),

tf.float32,

)

}

)

)

outs.append(nn_out)

# Concat all outputs and the non-image inputs.

out = tf.concat(outs, axis=1)

# Push through (optional) FC-stack (this may be an empty stack).

out, _ = self.post_fc_stack(SampleBatch({SampleBatch.OBS: out}))

# No logits/value branches.

if not self.logits_and_value_model:

return out, []

# Logits- and value branches.

logits, values = self.logits_and_value_model(out)

self._value_out = tf.reshape(values, [-1])

return logits, []

@override(ModelV2)

def value_function(self):

return self._value_out

使用轨迹视图API:将最后n个动作(或奖励或观察)作为输入传递给自定义模型

在学习过程中,有时不仅查看当前的观察结果来计算下一个动作,而且查看过去的 n 个观察结果是有帮助的。在其他情况下,您可能还想将最近的奖励或动作提供给模型(就像我们在指定 use_lstm=True 和 lstm_use_prev_action/reward=True 时使用的 LSTM 包装器那样)。即使在不使用部分可观察环境(PO-MDPs)和/或 RNN/注意力模型的情况下,这些也可能是有用的,例如在经典的 Atari 运行中,我们通常使用最后四个观察到的图像的帧堆叠。

轨迹视图API允许您的模型指定这些更复杂的“视图需求”。

这是一个简单的(非RNN/Attention)模型示例,它以最后3个观测值作为输入(非常类似于在Atari环境中学习时推荐的“帧堆叠”方法):

class FrameStackingCartPoleModel(TFModelV2):

"""A simple FC model that takes the last n observations as input."""

def __init__(

self, obs_space, action_space, num_outputs, model_config, name, num_frames=3

):

super(FrameStackingCartPoleModel, self).__init__(

obs_space, action_space, None, model_config, name

)

self.num_frames = num_frames

self.num_outputs = num_outputs

# Construct actual (very simple) FC model.

assert len(obs_space.shape) == 1

obs = tf.keras.layers.Input(shape=(self.num_frames, obs_space.shape[0]))

obs_reshaped = tf.keras.layers.Reshape([obs_space.shape[0] * self.num_frames])(

obs

)

rewards = tf.keras.layers.Input(shape=(self.num_frames))

rewards_reshaped = tf.keras.layers.Reshape([self.num_frames])(rewards)

actions = tf.keras.layers.Input(shape=(self.num_frames, self.action_space.n))

actions_reshaped = tf.keras.layers.Reshape([action_space.n * self.num_frames])(

actions

)

input_ = tf.keras.layers.Concatenate(axis=-1)(

[obs_reshaped, actions_reshaped, rewards_reshaped]

)

layer1 = tf.keras.layers.Dense(256, activation=tf.nn.relu)(input_)

layer2 = tf.keras.layers.Dense(256, activation=tf.nn.relu)(layer1)

out = tf.keras.layers.Dense(self.num_outputs)(layer2)

values = tf.keras.layers.Dense(1)(layer1)

self.base_model = tf.keras.models.Model([obs, actions, rewards], [out, values])

self._last_value = None

self.view_requirements["prev_n_obs"] = ViewRequirement(

data_col="obs", shift="-{}:0".format(num_frames - 1), space=obs_space

)

self.view_requirements["prev_n_rewards"] = ViewRequirement(

data_col="rewards", shift="-{}:-1".format(self.num_frames)

)

self.view_requirements["prev_n_actions"] = ViewRequirement(

data_col="actions",

shift="-{}:-1".format(self.num_frames),

space=self.action_space,

)

def forward(self, input_dict, states, seq_lens):

obs = tf.cast(input_dict["prev_n_obs"], tf.float32)

rewards = tf.cast(input_dict["prev_n_rewards"], tf.float32)

actions = one_hot(input_dict["prev_n_actions"], self.action_space)

out, self._last_value = self.base_model([obs, actions, rewards])

return out, []

def value_function(self):

return tf.squeeze(self._last_value, -1)

上述模型的 PyTorch 版本也在 同一个文件中提供。

自定义操作分布#

类似于自定义模型和预处理器,您还可以如下指定一个自定义动作分布类。动作分布类传递了对 model 的引用,您可以使用它来访问 model.model_config 或其他模型属性。这通常用于实现 自回归动作输出。

import ray

import ray.rllib.algorithms.ppo as ppo

from ray.rllib.models import ModelCatalog

from ray.rllib.models.preprocessors import Preprocessor

class MyActionDist(ActionDistribution):

@staticmethod

def required_model_output_shape(action_space, model_config):

return 7 # controls model output feature vector size

def __init__(self, inputs, model):

super(MyActionDist, self).__init__(inputs, model)

assert model.num_outputs == 7

def sample(self): ...

def logp(self, actions): ...

def entropy(self): ...

ModelCatalog.register_custom_action_dist("my_dist", MyActionDist)

ray.init()

algo = ppo.PPO(env="CartPole-v1", config={

"model": {

"custom_action_dist": "my_dist",

},

})

监督模型损失#

你可以通过自定义模型将监督损失混合到任何 RLlib 算法中。例如,你可以在专家经验上添加模仿学习损失,或在模型内部添加自监督的自动编码器损失。这些损失可以定义在策略评估输入上,或者从 离线存储 读取的数据上。

TensorFlow:要向自定义 TF 模型添加监督损失,您需要重写 custom_loss() 方法。该方法接收算法的现有策略损失,您可以在返回之前向其添加自己的监督损失。为了调试,您还可以在 metrics() 方法中返回标量张量的字典。以下是一个 可运行的示例,展示了如何在 CartPole 训练中添加模仿损失,该损失定义在 离线数据集 上。

PyTorch:在自定义的 PyTorch 模型中,没有明确的 API 来添加损失。但是,你可以直接在策略定义中修改损失。与 TF 模型类似,可以通过创建输入读取器并在损失前向传递中调用 reader.next() 来整合离线数据集。

自监督模型损失#

你也可以使用 custom_loss() API 来添加自监督损失,例如 VAE 重构损失和 L2 正则化。

可变长度 / 复杂观察空间#

RLlib 支持复杂和可变长度的观测空间,包括 gym.spaces.Tuple、gym.spaces.Dict 和 rllib.utils.spaces.Repeated。这些空间的处理对用户来说是透明的。RLlib 内部会插入预处理器,为重复元素插入填充,在传输过程中将复杂观测展平为固定大小的向量,并在将其发送到模型之前将向量解包为结构化张量。展平后的观测可以通过 input_dict["obs_flat"] 访问,解包后的观测可以通过 input_dict["obs"] 访问。

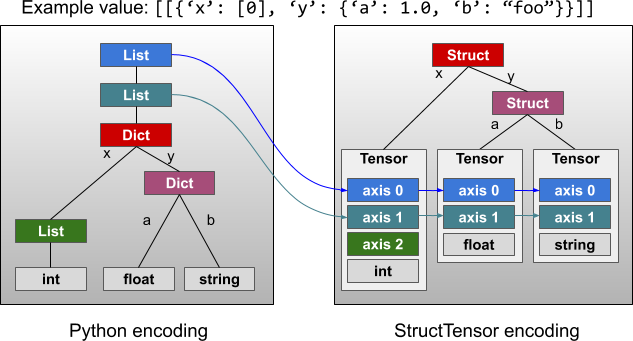

要启用结构观察的批处理,RLlib 以 StructTensor 格式 解包它们。简而言之,重复的字段被“下推”,并成为张量批的外部维度,如图中所示的 StructTensor RFC 中的图示。

- 关于复杂的观测空间,请参阅:

一个使用 重复结构字段 的自定义环境和模型。

Repeated space 的 pydoc。

批处理的 重复值张量 的 pydoc。

单元测试 用于 Tuple 和 Dict 空间。

可变长度 / 参数化动作空间#

自定义模型可以用于处理以下环境:(1) 有效动作集 每步变化,和/或 (2) 有效动作的数量 非常大。其基本思想是,动作的含义可以完全取决于观察,即,Q(s, a) 中的 a 仅作为 [0, MAX_AVAIL_ACTIONS) 中的一个标记,其意义仅在 s 的上下文中存在。这适用于 DQN 和策略梯度家族 中的算法,并且可以按如下方式实现:

环境应在每个步骤的观察中返回一个掩码和/或有效动作嵌入列表。为了启用批处理,动作的数量可以从1到某个最大数量不等:

class MyParamActionEnv(gym.Env):

def __init__(self, max_avail_actions):

self.action_space = Discrete(max_avail_actions)

self.observation_space = Dict({

"action_mask": Box(0, 1, shape=(max_avail_actions, )),

"avail_actions": Box(-1, 1, shape=(max_avail_actions, action_embedding_sz)),

"real_obs": ...,

})

可以定义一个自定义模型,该模型可以解释观察中的

action_mask和avail_actions部分。在这里,模型通过某些网络输出与每个动作嵌入的点积来计算动作对数。可以通过将概率缩放为零来从softmax中屏蔽无效动作:

class ParametricActionsModel(TFModelV2):

def __init__(self,

obs_space,

action_space,

num_outputs,

model_config,

name,

true_obs_shape=(4,),

action_embed_size=2):

super(ParametricActionsModel, self).__init__(

obs_space, action_space, num_outputs, model_config, name)

self.action_embed_model = FullyConnectedNetwork(...)

def forward(self, input_dict, state, seq_lens):

# Extract the available actions tensor from the observation.

avail_actions = input_dict["obs"]["avail_actions"]

action_mask = input_dict["obs"]["action_mask"]

# Compute the predicted action embedding

action_embed, _ = self.action_embed_model({

"obs": input_dict["obs"]["cart"]

})

# Expand the model output to [BATCH, 1, EMBED_SIZE]. Note that the

# avail actions tensor is of shape [BATCH, MAX_ACTIONS, EMBED_SIZE].

intent_vector = tf.expand_dims(action_embed, 1)

# Batch dot product => shape of logits is [BATCH, MAX_ACTIONS].

action_logits = tf.reduce_sum(avail_actions * intent_vector, axis=2)

# Mask out invalid actions (use tf.float32.min for stability)

inf_mask = tf.maximum(tf.log(action_mask), tf.float32.min)

return action_logits + inf_mask, state

根据你的使用情况,可能使用 just the masking、just action embeddings 或 both 是合理的。关于“仅动作嵌入”的可运行代码示例,请查看 examples/parametric_actions_cartpole.py。

请注意,由于掩码引入了 tf.float32.min 值到模型输出中,这种技术可能不适用于所有算法选项。例如,如果算法错误地处理 tf.float32.min 值,它们可能会崩溃。cartpole 示例为 DQN(必须设置 hiddens=[])、PPO(必须禁用运行均值并设置 model.vf_share_layers=True)以及几种其他算法提供了可行的配置。并非所有算法都支持参数化动作;请参阅 算法概述。

自回归动作分布#

在具有多个组件的动作空间中(例如,Tuple(a1, a2)),您可能希望 a2 取决于 a1 的采样值,即 a2_sampled ~ P(a2 | a1_sampled, obs)。通常,a1 和 a2 会独立采样,这会降低策略的表达能力。

要做到这一点,你需要一个实现自回归模式的定制模型,以及一个利用该模型的自定义动作分布类。autoregressive_action_dist.py 示例展示了如何为简单的二元动作空间实现这一点。对于更复杂的动作空间,建议使用更高效的架构,如 MADE。请注意,采样一个 N部分 动作需要通过模型的 N 次前向传递,然而计算动作的对数概率可以在一次传递中完成:

class BinaryAutoregressiveOutput(ActionDistribution):

"""Action distribution P(a1, a2) = P(a1) * P(a2 | a1)"""

@staticmethod

def required_model_output_shape(self, model_config):

return 16 # controls model output feature vector size

def sample(self):

# first, sample a1

a1_dist = self._a1_distribution()

a1 = a1_dist.sample()

# sample a2 conditioned on a1

a2_dist = self._a2_distribution(a1)

a2 = a2_dist.sample()

# return the action tuple

return TupleActions([a1, a2])

def logp(self, actions):

a1, a2 = actions[:, 0], actions[:, 1]

a1_vec = tf.expand_dims(tf.cast(a1, tf.float32), 1)

a1_logits, a2_logits = self.model.action_model([self.inputs, a1_vec])

return (Categorical(a1_logits, None).logp(a1) + Categorical(

a2_logits, None).logp(a2))

def _a1_distribution(self):

BATCH = tf.shape(self.inputs)[0]

a1_logits, _ = self.model.action_model(

[self.inputs, tf.zeros((BATCH, 1))])

a1_dist = Categorical(a1_logits, None)

return a1_dist

def _a2_distribution(self, a1):

a1_vec = tf.expand_dims(tf.cast(a1, tf.float32), 1)

_, a2_logits = self.model.action_model([self.inputs, a1_vec])

a2_dist = Categorical(a2_logits, None)

return a2_dist

class AutoregressiveActionsModel(TFModelV2):

"""Implements the `.action_model` branch required above."""

def __init__(self, obs_space, action_space, num_outputs, model_config,

name):

super(AutoregressiveActionsModel, self).__init__(

obs_space, action_space, num_outputs, model_config, name)

if action_space != Tuple([Discrete(2), Discrete(2)]):

raise ValueError(

"This model only supports the [2, 2] action space")

# Inputs

obs_input = tf.keras.layers.Input(

shape=obs_space.shape, name="obs_input")

a1_input = tf.keras.layers.Input(shape=(1, ), name="a1_input")

ctx_input = tf.keras.layers.Input(

shape=(num_outputs, ), name="ctx_input")

# Output of the model (normally 'logits', but for an autoregressive

# dist this is more like a context/feature layer encoding the obs)

context = tf.keras.layers.Dense(

num_outputs,

name="hidden",

activation=tf.nn.tanh,

kernel_initializer=normc_initializer(1.0))(obs_input)

# P(a1 | obs)

a1_logits = tf.keras.layers.Dense(

2,

name="a1_logits",

activation=None,

kernel_initializer=normc_initializer(0.01))(ctx_input)

# P(a2 | a1)

# --note: typically you'd want to implement P(a2 | a1, obs) as follows:

# a2_context = tf.keras.layers.Concatenate(axis=1)(

# [ctx_input, a1_input])

a2_context = a1_input

a2_hidden = tf.keras.layers.Dense(

16,

name="a2_hidden",

activation=tf.nn.tanh,

kernel_initializer=normc_initializer(1.0))(a2_context)

a2_logits = tf.keras.layers.Dense(

2,

name="a2_logits",

activation=None,

kernel_initializer=normc_initializer(0.01))(a2_hidden)

# Base layers

self.base_model = tf.keras.Model(obs_input, context)

self.register_variables(self.base_model.variables)

self.base_model.summary()

# Autoregressive action sampler

self.action_model = tf.keras.Model([ctx_input, a1_input],

[a1_logits, a2_logits])

self.action_model.summary()

self.register_variables(self.action_model.variables)

备注

并非所有算法都支持自回归动作分布;更多信息请参见 算法概览表。