Note

Go to the end to download the full example code. or to run this example in your browser via Binder

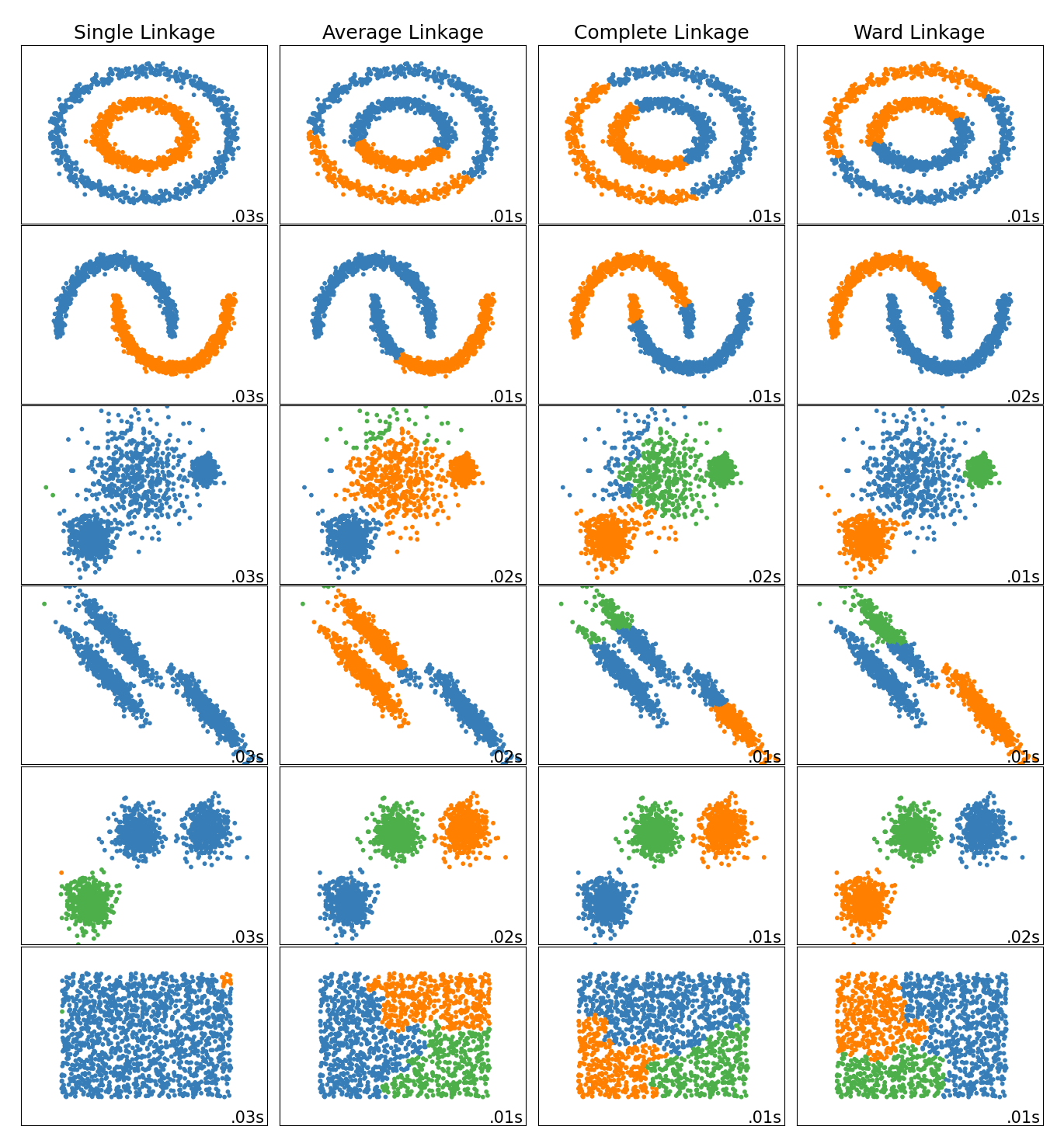

对比不同层次聚类方法在玩具数据集上的表现#

这个示例展示了不同层次聚类方法在“有趣”但仍然是二维的数据集上的特性。

主要的观察点是:

单链接法速度快,并且在非球状数据上表现良好,但在有噪声的情况下表现较差。

平均链接法和完全链接法在干净分离的球状簇上表现良好,但在其他情况下结果不一。

Ward方法在有噪声的数据上最为有效。

虽然这些示例提供了一些关于算法的直观理解,但这种直观理解可能不适用于非常高维的数据。

import time

import warnings

from itertools import cycle, islice

import matplotlib.pyplot as plt

import numpy as np

from sklearn import cluster, datasets

from sklearn.preprocessing import StandardScaler

生成数据集。我们选择足够大的规模来观察算法的可扩展性,但不会太大以避免运行时间过长。

n_samples = 1500

noisy_circles = datasets.make_circles(

n_samples=n_samples, factor=0.5, noise=0.05, random_state=170

)

noisy_moons = datasets.make_moons(n_samples=n_samples, noise=0.05, random_state=170)

blobs = datasets.make_blobs(n_samples=n_samples, random_state=170)

rng = np.random.RandomState(170)

no_structure = rng.rand(n_samples, 2), None

# 各向异性分布的数据

X, y = datasets.make_blobs(n_samples=n_samples, random_state=170)

transformation = [[0.6, -0.6], [-0.4, 0.8]]

X_aniso = np.dot(X, transformation)

aniso = (X_aniso, y)

# 方差不同的斑点

varied = datasets.make_blobs(

n_samples=n_samples, cluster_std=[1.0, 2.5, 0.5], random_state=170

)

运行聚类并绘图

# 设置集群参数

plt.figure(figsize=(9 * 1.3 + 2, 14.5))

plt.subplots_adjust(

left=0.02, right=0.98, bottom=0.001, top=0.96, wspace=0.05, hspace=0.01

)

plot_num = 1

default_base = {"n_neighbors": 10, "n_clusters": 3}

datasets = [

(noisy_circles, {"n_clusters": 2}),

(noisy_moons, {"n_clusters": 2}),

(varied, {"n_neighbors": 2}),

(aniso, {"n_neighbors": 2}),

(blobs, {}),

(no_structure, {}),

]

for i_dataset, (dataset, algo_params) in enumerate(datasets):

# 使用数据集特定的值更新参数

params = default_base.copy()

params.update(algo_params)

X, y = dataset

# 规范化数据集以便于参数选择

X = StandardScaler().fit_transform(X)

# ============

# 创建集群对象

# ============

ward = cluster.AgglomerativeClustering(

n_clusters=params["n_clusters"], linkage="ward"

)

complete = cluster.AgglomerativeClustering(

n_clusters=params["n_clusters"], linkage="complete"

)

average = cluster.AgglomerativeClustering(

n_clusters=params["n_clusters"], linkage="average"

)

single = cluster.AgglomerativeClustering(

n_clusters=params["n_clusters"], linkage="single"

)

clustering_algorithms = (

("Single Linkage", single),

("Average Linkage", average),

("Complete Linkage", complete),

("Ward Linkage", ward),

)

for name, algorithm in clustering_algorithms:

t0 = time.time()

# 捕捉与 kneighbors_graph 相关的警告

with warnings.catch_warnings():

warnings.filterwarnings(

"ignore",

message="the number of connected components of the "

+ "connectivity matrix is [0-9]{1,2}"

+ " > 1. Completing it to avoid stopping the tree early.",

category=UserWarning,

)

algorithm.fit(X)

t1 = time.time()

if hasattr(algorithm, "labels_"):

y_pred = algorithm.labels_.astype(int)

else:

y_pred = algorithm.predict(X)

plt.subplot(len(datasets), len(clustering_algorithms), plot_num)

if i_dataset == 0:

plt.title(name, size=18)

colors = np.array(

list(

islice(

cycle(

[

"#377eb8",

"#ff7f00",

"#4daf4a",

"#f781bf",

"#a65628",

"#984ea3",

"#999999",

"#e41a1c",

"#dede00",

]

),

int(max(y_pred) + 1),

)

)

)

plt.scatter(X[:, 0], X[:, 1], s=10, color=colors[y_pred])

plt.xlim(-2.5, 2.5)

plt.ylim(-2.5, 2.5)

plt.xticks(())

plt.yticks(())

plt.text(

0.99,

0.01,

("%.2fs" % (t1 - t0)).lstrip("0"),

transform=plt.gca().transAxes,

size=15,

horizontalalignment="right",

)

plot_num += 1

plt.show()

Total running time of the script: (0 minutes 1.012 seconds)

Related examples