Note

Go to the end to download the full example code. or to run this example in your browser via Binder

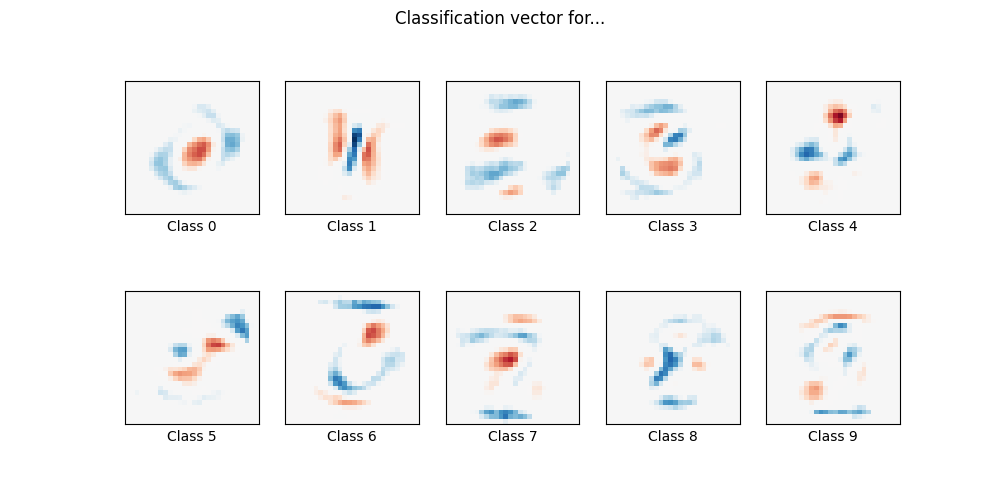

使用多项逻辑回归和L1正则化进行MNIST分类#

在这里,我们在MNIST数字分类任务的一个子集上拟合一个带有L1惩罚的多项逻辑回归。我们使用SAGA算法来实现这一目的:当样本数量显著大于特征数量时,这是一种快速的求解器,并且能够精细优化非平滑目标函数,这正是L1惩罚的情况。测试准确率达到> 0.8,同时权重向量保持*稀疏*,因此更容易*解释*。

请注意,这种L1惩罚的线性模型的准确性显著低于在该数据集上使用L2惩罚的线性模型或非线性多层感知器模型所能达到的准确性。

Sparsity with L1 penalty: 76.53%

Test score with L1 penalty: 0.8382

Example run in 11.855 s

# 作者:scikit-learn 开发者

# SPDX-License-Identifier: BSD-3-Clause

import time

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import fetch_openml

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.utils import check_random_state

# 降低以加快收敛速度

t0 = time.time()

train_samples = 5000

# 从 https://www.openml.org/d/554 加载数据

X, y = fetch_openml("mnist_784", version=1, return_X_y=True, as_frame=False)

random_state = check_random_state(0)

permutation = random_state.permutation(X.shape[0])

X = X[permutation]

y = y[permutation]

X = X.reshape((X.shape[0], -1))

X_train, X_test, y_train, y_test = train_test_split(

X, y, train_size=train_samples, test_size=10000

)

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

# 提高容忍度以加快收敛速度

clf = LogisticRegression(C=50.0 / train_samples, penalty="l1", solver="saga", tol=0.1)

clf.fit(X_train, y_train)

sparsity = np.mean(clf.coef_ == 0) * 100

score = clf.score(X_test, y_test)

# print('Best C % .4f' % clf.C_)

print("Sparsity with L1 penalty: %.2f%%" % sparsity)

print("Test score with L1 penalty: %.4f" % score)

coef = clf.coef_.copy()

plt.figure(figsize=(10, 5))

scale = np.abs(coef).max()

for i in range(10):

l1_plot = plt.subplot(2, 5, i + 1)

l1_plot.imshow(

coef[i].reshape(28, 28),

interpolation="nearest",

cmap=plt.cm.RdBu,

vmin=-scale,

vmax=scale,

)

l1_plot.set_xticks(())

l1_plot.set_yticks(())

l1_plot.set_xlabel("Class %i" % i)

plt.suptitle("Classification vector for...")

run_time = time.time() - t0

print("Example run in %.3f s" % run_time)

plt.show()

Total running time of the script: (0 minutes 11.899 seconds)

Related examples