Note

Go to the end to download the full example code. or to run this example in your browser via Binder

梯度提升袋外估计#

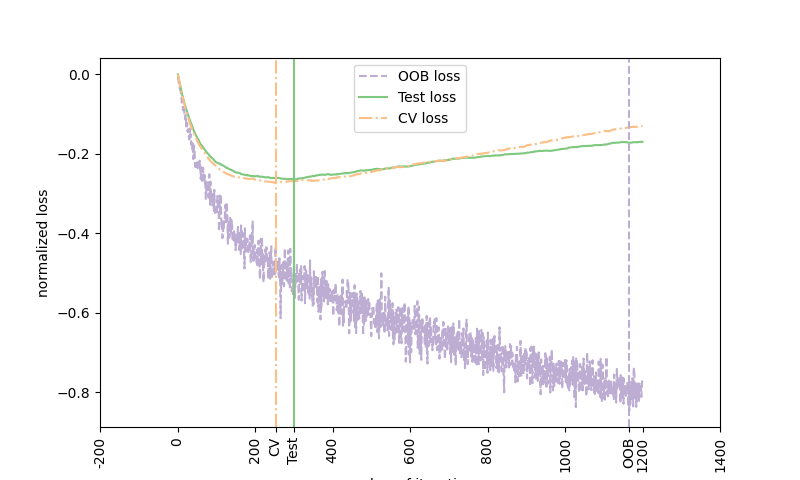

袋外(OOB)估计可以作为一种有用的启发式方法来估计“最佳”提升迭代次数。

OOB估计与交叉验证估计几乎相同,但可以即时计算,无需重复模型拟合。

OOB估计仅适用于随机梯度提升(即 subsample < 1.0 ),这些估计是基于未包含在自助样本中的示例(即所谓的袋外示例)的损失改进得出的。

OOB估计是对真实测试损失的悲观估计,但对于少量树来说仍然是一个相当好的近似。

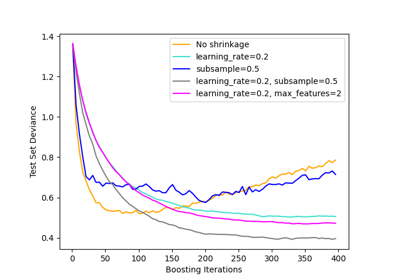

图中显示了负OOB改进的累积和作为提升迭代函数的变化情况。正如你所见,它在前一百次迭代中跟踪测试损失,但随后以悲观的方式偏离。

图中还显示了3折交叉验证的性能,通常它能更好地估计测试损失,但计算要求更高。

Accuracy: 0.6860

# 作者:scikit-learn 开发者

# SPDX-License-Identifier: BSD-3-Clause

import matplotlib.pyplot as plt

import numpy as np

from scipy.special import expit

from sklearn import ensemble

from sklearn.metrics import log_loss

from sklearn.model_selection import KFold, train_test_split

# 生成数据(改编自G. Ridgeway的gbm示例)

n_samples = 1000

random_state = np.random.RandomState(13)

x1 = random_state.uniform(size=n_samples)

x2 = random_state.uniform(size=n_samples)

x3 = random_state.randint(0, 4, size=n_samples)

p = expit(np.sin(3 * x1) - 4 * x2 + x3)

y = random_state.binomial(1, p, size=n_samples)

X = np.c_[x1, x2, x3]

X = X.astype(np.float32)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.5, random_state=9)

# 使用袋外估计拟合分类器

params = {

"n_estimators": 1200,

"max_depth": 3,

"subsample": 0.5,

"learning_rate": 0.01,

"min_samples_leaf": 1,

"random_state": 3,

}

clf = ensemble.GradientBoostingClassifier(**params)

clf.fit(X_train, y_train)

acc = clf.score(X_test, y_test)

print("Accuracy: {:.4f}".format(acc))

n_estimators = params["n_estimators"]

x = np.arange(n_estimators) + 1

def heldout_score(clf, X_test, y_test):

"""计算 ``X_test`` 和 ``y_test`` 的偏差分数。"""

score = np.zeros((n_estimators,), dtype=np.float64)

for i, y_proba in enumerate(clf.staged_predict_proba(X_test)):

score[i] = 2 * log_loss(y_test, y_proba[:, 1])

return score

def cv_estimate(n_splits=None):

cv = KFold(n_splits=n_splits)

cv_clf = ensemble.GradientBoostingClassifier(**params)

val_scores = np.zeros((n_estimators,), dtype=np.float64)

for train, test in cv.split(X_train, y_train):

cv_clf.fit(X_train[train], y_train[train])

val_scores += heldout_score(cv_clf, X_train[test], y_train[test])

val_scores /= n_splits

return val_scores

# 使用交叉验证估计最佳 n_estimator

cv_score = cv_estimate(3)

# 计算测试数据的最佳 n_estimator

test_score = heldout_score(clf, X_test, y_test)

# 负的oob改进累积和

cumsum = -np.cumsum(clf.oob_improvement_)

# 根据OOB的最小损失

oob_best_iter = x[np.argmin(cumsum)]

# 根据测试的最小损失(归一化使得第一个损失为0)

test_score -= test_score[0]

test_best_iter = x[np.argmin(test_score)]

# 根据交叉验证的最小损失(归一化使得第一个损失为0)

cv_score -= cv_score[0]

cv_best_iter = x[np.argmin(cv_score)]

# 三条曲线的颜色酿造

oob_color = list(map(lambda x: x / 256.0, (190, 174, 212)))

test_color = list(map(lambda x: x / 256.0, (127, 201, 127)))

cv_color = list(map(lambda x: x / 256.0, (253, 192, 134)))

# 三条曲线的线型

oob_line = "dashed"

test_line = "solid"

cv_line = "dashdot"

# 绘制最佳迭代的曲线和垂直线

plt.figure(figsize=(8, 4.8))

plt.plot(x, cumsum, label="OOB loss", color=oob_color, linestyle=oob_line)

plt.plot(x, test_score, label="Test loss", color=test_color, linestyle=test_line)

plt.plot(x, cv_score, label="CV loss", color=cv_color, linestyle=cv_line)

plt.axvline(x=oob_best_iter, color=oob_color, linestyle=oob_line)

plt.axvline(x=test_best_iter, color=test_color, linestyle=test_line)

plt.axvline(x=cv_best_iter, color=cv_color, linestyle=cv_line)

# 在xticks上添加三条垂直线

xticks = plt.xticks()

xticks_pos = np.array(

xticks[0].tolist() + [oob_best_iter, cv_best_iter, test_best_iter]

)

xticks_label = np.array(list(map(lambda t: int(t), xticks[0])) + ["OOB", "CV", "Test"])

ind = np.argsort(xticks_pos)

xticks_pos = xticks_pos[ind]

xticks_label = xticks_label[ind]

plt.xticks(xticks_pos, xticks_label, rotation=90)

plt.legend(loc="upper center")

plt.ylabel("normalized loss")

plt.xlabel("number of iterations")

plt.show()

Total running time of the script: (0 minutes 4.863 seconds)

Related examples

sphx_glr_auto_examples_ensemble_plot_gradient_boosting_regularization.py

梯度提升正则化