Note

Go to the end to download the full example code. or to run this example in your browser via Binder

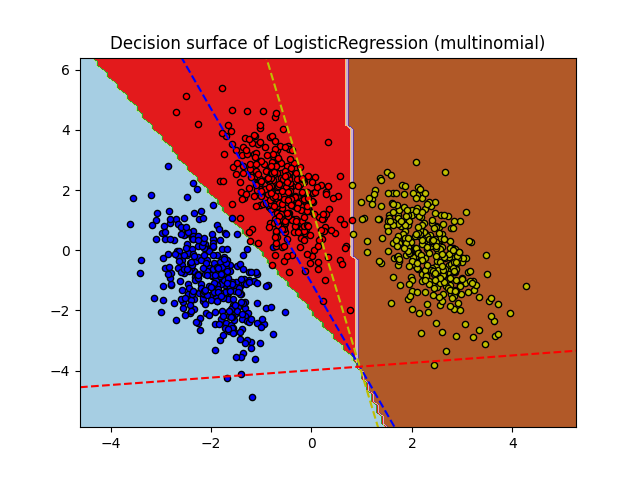

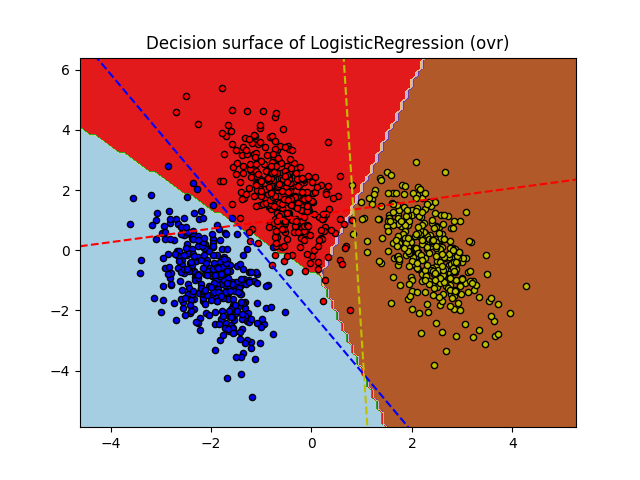

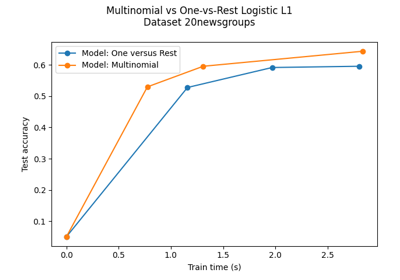

绘制多项式和一对其余逻辑回归#

绘制多项式和一对其余逻辑回归的决策面。 与三个一对其余(OVR)分类器对应的超平面用虚线表示。

training score : 0.995 (multinomial)

training score : 0.976 (ovr)

# 作者:scikit-learn 开发者

# SPDX-License-Identifier: BSD-3-Clause

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import make_blobs

from sklearn.inspection import DecisionBoundaryDisplay

from sklearn.linear_model import LogisticRegression

from sklearn.multiclass import OneVsRestClassifier

# 制作用于分类的3类数据集

centers = [[-5, 0], [0, 1.5], [5, -1]]

X, y = make_blobs(n_samples=1000, centers=centers, random_state=40)

transformation = [[0.4, 0.2], [-0.4, 1.2]]

X = np.dot(X, transformation)

for multi_class in ("multinomial", "ovr"):

clf = LogisticRegression(solver="sag", max_iter=100, random_state=42)

if multi_class == "ovr":

clf = OneVsRestClassifier(clf)

clf.fit(X, y)

# 打印训练分数

print("training score : %.3f (%s)" % (clf.score(X, y), multi_class))

_, ax = plt.subplots()

DecisionBoundaryDisplay.from_estimator(

clf, X, response_method="predict", cmap=plt.cm.Paired, ax=ax

)

plt.title("Decision surface of LogisticRegression (%s)" % multi_class)

plt.axis("tight")

# 还要绘制训练点

colors = "bry"

for i, color in zip(clf.classes_, colors):

idx = np.where(y == i)

plt.scatter(X[idx, 0], X[idx, 1], c=color, edgecolor="black", s=20)

# 绘制三个一对多分类器

xmin, xmax = plt.xlim()

ymin, ymax = plt.ylim()

if multi_class == "ovr":

coef = np.concatenate([est.coef_ for est in clf.estimators_])

intercept = np.concatenate([est.intercept_ for est in clf.estimators_])

else:

coef = clf.coef_

intercept = clf.intercept_

def plot_hyperplane(c, color):

def line(x0):

return (-(x0 * coef[c, 0]) - intercept[c]) / coef[c, 1]

plt.plot([xmin, xmax], [line(xmin), line(xmax)], ls="--", color=color)

for i, color in zip(clf.classes_, colors):

plot_hyperplane(i, color)

plt.show()

Total running time of the script: (0 minutes 0.104 seconds)

Related examples