Note

Go to the end to download the full example code. or to run this example in your browser via Binder

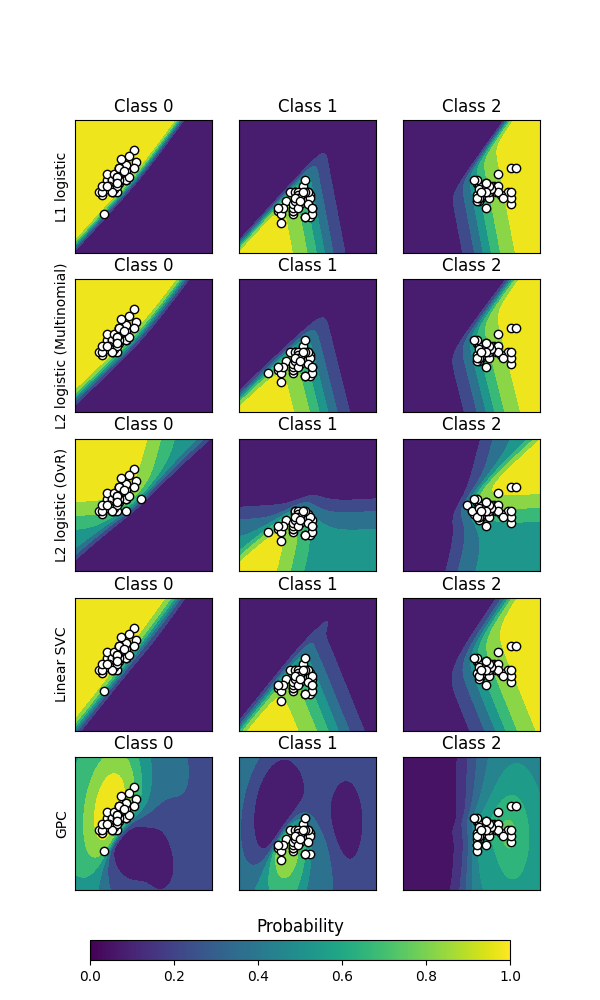

绘制分类概率#

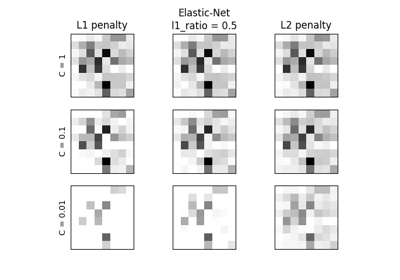

绘制不同分类器的分类概率。我们使用一个包含3个类别的数据集,并使用支持向量分类器、L1和L2惩罚的逻辑回归(多项式多类)、基于逻辑回归的一对多版本以及高斯过程分类来对其进行分类。

线性SVC默认不是一个概率分类器,但在此示例中启用了内置校准选项( probability=True )。

一对多逻辑回归默认不是一个多类分类器。因此,它在区分类别2和类别3时比其他估计器更困难。

Accuracy (train) for L1 logistic: 83.3%

Accuracy (train) for L2 logistic (Multinomial): 82.7%

Accuracy (train) for L2 logistic (OvR): 79.3%

Accuracy (train) for Linear SVC: 82.0%

Accuracy (train) for GPC: 82.7%

# 作者:scikit-learn 开发者

# SPDX-License-Identifier:BSD-3-Clause

import matplotlib.pyplot as plt

import numpy as np

from matplotlib import cm

from sklearn import datasets

from sklearn.gaussian_process import GaussianProcessClassifier

from sklearn.gaussian_process.kernels import RBF

from sklearn.inspection import DecisionBoundaryDisplay

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

from sklearn.multiclass import OneVsRestClassifier

from sklearn.svm import SVC

iris = datasets.load_iris()

X = iris.data[:, 0:2] # we only take the first two features for visualization

y = iris.target

n_features = X.shape[1]

C = 10

kernel = 1.0 * RBF([1.0, 1.0]) # for GPC

# 创建不同的分类器。

classifiers = {

"L1 logistic": LogisticRegression(C=C, penalty="l1", solver="saga", max_iter=10000),

"L2 logistic (Multinomial)": LogisticRegression(

C=C, penalty="l2", solver="saga", max_iter=10000

),

"L2 logistic (OvR)": OneVsRestClassifier(

LogisticRegression(C=C, penalty="l2", solver="saga", max_iter=10000)

),

"Linear SVC": SVC(kernel="linear", C=C, probability=True, random_state=0),

"GPC": GaussianProcessClassifier(kernel),

}

n_classifiers = len(classifiers)

fig, axes = plt.subplots(

nrows=n_classifiers,

ncols=len(iris.target_names),

figsize=(3 * 2, n_classifiers * 2),

)

for classifier_idx, (name, classifier) in enumerate(classifiers.items()):

y_pred = classifier.fit(X, y).predict(X)

accuracy = accuracy_score(y, y_pred)

print(f"Accuracy (train) for {name}: {accuracy:0.1%}")

for label in np.unique(y):

# 绘制分类器提供的概率估计

disp = DecisionBoundaryDisplay.from_estimator(

classifier,

X,

response_method="predict_proba",

class_of_interest=label,

ax=axes[classifier_idx, label],

vmin=0,

vmax=1,

)

axes[classifier_idx, label].set_title(f"Class {label}")

# 绘制预测属于给定类别的数据

mask_y_pred = y_pred == label

axes[classifier_idx, label].scatter(

X[mask_y_pred, 0], X[mask_y_pred, 1], marker="o", c="w", edgecolor="k"

)

axes[classifier_idx, label].set(xticks=(), yticks=())

axes[classifier_idx, 0].set_ylabel(name)

ax = plt.axes([0.15, 0.04, 0.7, 0.02])

plt.title("Probability")

_ = plt.colorbar(

cm.ScalarMappable(norm=None, cmap="viridis"), cax=ax, orientation="horizontal"

)

plt.show()

Total running time of the script: (0 minutes 4.540 seconds)

Related examples