Note

Go to the end to download the full example code. or to run this example in your browser via Binder

20类新闻组数据集上的多分类稀疏逻辑回归#

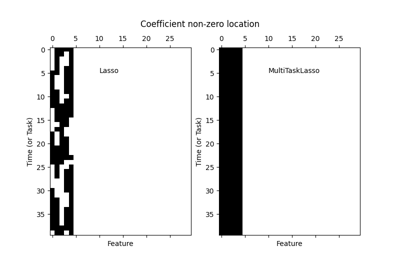

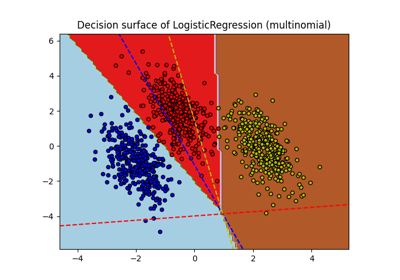

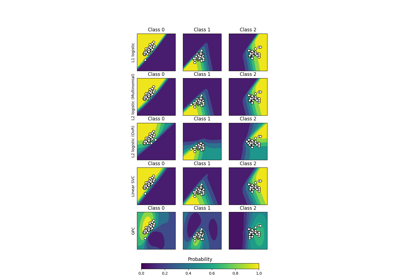

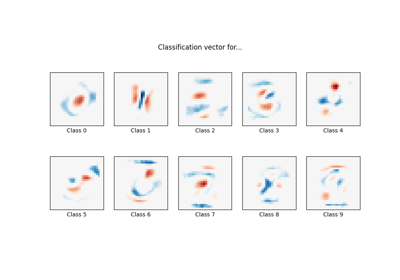

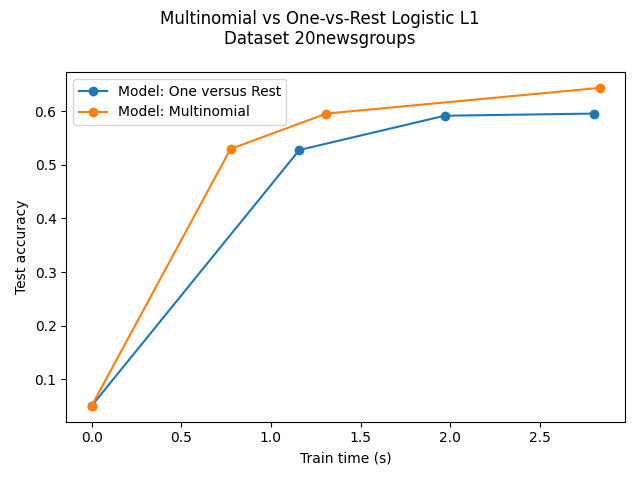

对比多项逻辑回归L1与一对多L1逻辑回归在newgroups20数据集上对文档进行分类的效果。多项逻辑回归在大规模数据集上训练速度更快且结果更准确。

在这里,我们使用L1稀疏性来将不具信息量的特征权重修剪为零。如果目标是提取每个类别的强区分性词汇,这是有益的。如果目标是获得最佳预测准确性,则最好使用不引入稀疏性的L2惩罚。

一种更传统(且可能更好)的在稀疏输入特征子集上进行预测的方法是使用单变量特征选择,然后使用传统的(L2惩罚的)逻辑回归模型。

Dataset 20newsgroup, train_samples=4500, n_features=130107, n_classes=20

[model=One versus Rest, solver=saga] Number of epochs: 1

[model=One versus Rest, solver=saga] Number of epochs: 2

[model=One versus Rest, solver=saga] Number of epochs: 3

Test accuracy for model ovr: 0.5960

% non-zero coefficients for model ovr, per class:

[0.26593496 0.43348936 0.26362917 0.31973683 0.37815029 0.2928359

0.27054655 0.62717609 0.19522393 0.30897646 0.34586917 0.28207552

0.34125758 0.29898468 0.34279478 0.59489497 0.38353048 0.35278655

0.19829832 0.14603365]

Run time (3 epochs) for model ovr:2.80

[model=Multinomial, solver=saga] Number of epochs: 1

[model=Multinomial, solver=saga] Number of epochs: 2

[model=Multinomial, solver=saga] Number of epochs: 5

Test accuracy for model multinomial: 0.6440

% non-zero coefficients for model multinomial, per class:

[0.36047253 0.1268187 0.10606655 0.17985197 0.5395559 0.07993421

0.06686804 0.21443888 0.11528972 0.2075215 0.10914094 0.11144673

0.13988486 0.09684337 0.26286057 0.11682692 0.55800226 0.17370318

0.11452112 0.14603365]

Run time (5 epochs) for model multinomial:2.83

Example run in 11.676 s

# Author: Arthur Mensch

import timeit

import warnings

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import fetch_20newsgroups_vectorized

from sklearn.exceptions import ConvergenceWarning

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.multiclass import OneVsRestClassifier

warnings.filterwarnings("ignore", category=ConvergenceWarning, module="sklearn")

t0 = timeit.default_timer()

# 我们使用SAGA求解器

solver = "saga"

# 降低以加快运行时间

n_samples = 5000

X, y = fetch_20newsgroups_vectorized(subset="all", return_X_y=True)

X = X[:n_samples]

y = y[:n_samples]

X_train, X_test, y_train, y_test = train_test_split(

X, y, random_state=42, stratify=y, test_size=0.1

)

train_samples, n_features = X_train.shape

n_classes = np.unique(y).shape[0]

print(

"Dataset 20newsgroup, train_samples=%i, n_features=%i, n_classes=%i"

% (train_samples, n_features, n_classes)

)

models = {

"ovr": {"name": "One versus Rest", "iters": [1, 2, 3]},

"multinomial": {"name": "Multinomial", "iters": [1, 2, 5]},

}

for model in models:

# 添加初始机会水平值以用于绘图目的

accuracies = [1 / n_classes]

times = [0]

densities = [1]

model_params = models[model]

# 少量的训练周期以加快运行时间

for this_max_iter in model_params["iters"]:

print(

"[model=%s, solver=%s] Number of epochs: %s"

% (model_params["name"], solver, this_max_iter)

)

clf = LogisticRegression(

solver=solver,

penalty="l1",

max_iter=this_max_iter,

random_state=42,

)

if model == "ovr":

clf = OneVsRestClassifier(clf)

t1 = timeit.default_timer()

clf.fit(X_train, y_train)

train_time = timeit.default_timer() - t1

y_pred = clf.predict(X_test)

accuracy = np.sum(y_pred == y_test) / y_test.shape[0]

if model == "ovr":

coef = np.concatenate([est.coef_ for est in clf.estimators_])

else:

coef = clf.coef_

density = np.mean(coef != 0, axis=1) * 100

accuracies.append(accuracy)

densities.append(density)

times.append(train_time)

models[model]["times"] = times

models[model]["densities"] = densities

models[model]["accuracies"] = accuracies

print("Test accuracy for model %s: %.4f" % (model, accuracies[-1]))

print(

"%% non-zero coefficients for model %s, per class:\n %s"

% (model, densities[-1])

)

print(

"Run time (%i epochs) for model %s:%.2f"

% (model_params["iters"][-1], model, times[-1])

)

fig = plt.figure()

ax = fig.add_subplot(111)

for model in models:

name = models[model]["name"]

times = models[model]["times"]

accuracies = models[model]["accuracies"]

ax.plot(times, accuracies, marker="o", label="Model: %s" % name)

ax.set_xlabel("Train time (s)")

ax.set_ylabel("Test accuracy")

ax.legend()

fig.suptitle("Multinomial vs One-vs-Rest Logistic L1\nDataset %s" % "20newsgroups")

fig.tight_layout()

fig.subplots_adjust(top=0.85)

run_time = timeit.default_timer() - t0

print("Example run in %.3f s" % run_time)

plt.show()

Total running time of the script: (0 minutes 11.717 seconds)

Related examples