Note

Go to the end to download the full example code. or to run this example in your browser via Binder

scikit-learn 0.23 版本发布亮点#

我们很高兴地宣布发布 scikit-learn 0.23!此次更新包含了许多错误修复和改进,以及一些新的关键功能。以下是本次发布的一些主要功能。 有关所有更改的详尽列表 ,请参阅 发布说明 。

要安装最新版本(使用 pip):

pip install --upgrade scikit-learn

或使用 conda:

conda install -c conda-forge scikit-learn

广义线性模型和用于梯度提升的泊松损失#

期待已久的具有非正态损失函数的广义线性模型现已可用。特别是,三个新的回归器已实现:

PoissonRegressor ,

GammaRegressor ,和

TweedieRegressor 。泊松回归器可用于建模正整数计数或相对频率。更多内容请参阅

用户指南 。此外,

HistGradientBoostingRegressor 也支持新的 ‘poisson’ 损失。

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.linear_model import PoissonRegressor

from sklearn.ensemble import HistGradientBoostingRegressor

n_samples, n_features = 1000, 20

rng = np.random.RandomState(0)

X = rng.randn(n_samples, n_features)

# 与X[:, 5]相关的正整数,包含许多零:

y = rng.poisson(lam=np.exp(X[:, 5]) / 2)

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=rng)

glm = PoissonRegressor()

gbdt = HistGradientBoostingRegressor(loss="poisson", learning_rate=0.01)

glm.fit(X_train, y_train)

gbdt.fit(X_train, y_train)

print(glm.score(X_test, y_test))

print(gbdt.score(X_test, y_test))

0.35776189065725805

0.42425183539869415

丰富的估计器可视化表示#

现在可以通过启用 display='diagram' 选项在笔记本中可视化估计器。这对于总结管道和其他复合估计器的结构特别有用,并具有提供详细信息的交互性。点击下面的示例图像以展开管道元素。有关如何使用此功能,请参见 复合估计器的可视化 。

from sklearn import set_config

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import OneHotEncoder, StandardScaler

from sklearn.impute import SimpleImputer

from sklearn.compose import make_column_transformer

from sklearn.linear_model import LogisticRegression

set_config(display="diagram")

num_proc = make_pipeline(SimpleImputer(strategy="median"), StandardScaler())

cat_proc = make_pipeline(

SimpleImputer(strategy="constant", fill_value="missing"),

OneHotEncoder(handle_unknown="ignore"),

)

preprocessor = make_column_transformer(

(num_proc, ("feat1", "feat3")), (cat_proc, ("feat0", "feat2"))

)

clf = make_pipeline(preprocessor, LogisticRegression())

clf

可扩展性和稳定性改进的KMeans#

KMeans 估计器进行了全面的重新设计,现在显著更快且更稳定。此外,Elkan算法现在兼容稀疏矩阵。该估计器使用基于OpenMP的并行处理,而不再依赖于joblib,因此 n_jobs 参数不再有效。有关如何控制线程数量的更多详细信息,请参阅我们的 并行性 说明。

import scipy

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.cluster import KMeans

from sklearn.datasets import make_blobs

from sklearn.metrics import completeness_score

rng = np.random.RandomState(0)

X, y = make_blobs(random_state=rng)

X = scipy.sparse.csr_matrix(X)

X_train, X_test, _, y_test = train_test_split(X, y, random_state=rng)

kmeans = KMeans(n_init="auto").fit(X_train)

print(completeness_score(kmeans.predict(X_test), y_test))

0.7462070982205808

基于直方图的梯度提升估计器的改进#

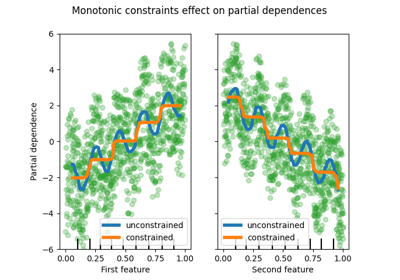

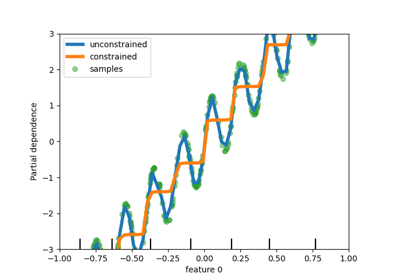

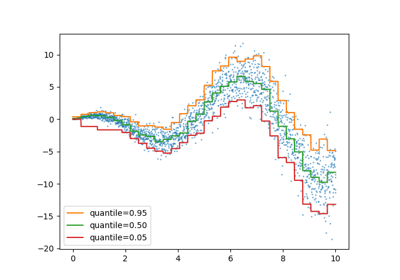

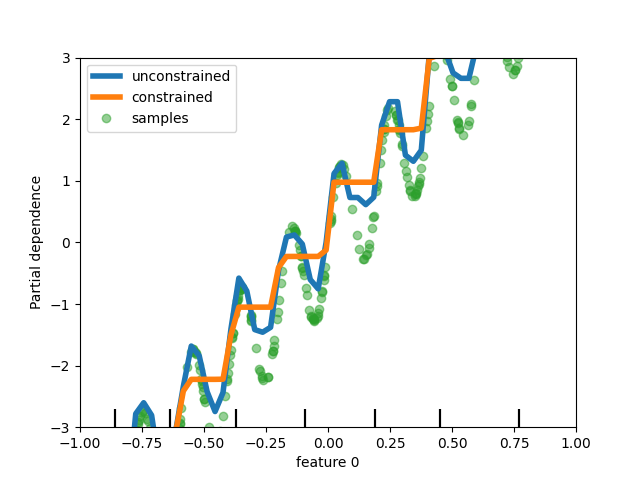

对 HistGradientBoostingClassifier 和 HistGradientBoostingRegressor 进行了多项改进。除了上面提到的泊松损失,这些估计器现在还支持 样本权重 。此外,还添加了一个自动提前停止标准:当样本数量超过 10k 时,默认启用提前停止。最后,用户现在可以定义 单调约束 ,以根据特定特征的变化约束预测。在以下示例中,我们构建了一个通常与第一个特征正相关的目标,并带有一些噪声。应用单调约束可以使预测捕捉到第一个特征的全局效应,而不是拟合噪声。有关用例示例,请参见 直方图梯度提升树的特性 。

import numpy as np

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

#

# from sklearn.inspection import plot_partial_dependence

#

from sklearn.inspection import PartialDependenceDisplay

from sklearn.ensemble import HistGradientBoostingRegressor

n_samples = 500

rng = np.random.RandomState(0)

X = rng.randn(n_samples, 2)

noise = rng.normal(loc=0.0, scale=0.01, size=n_samples)

y = 5 * X[:, 0] + np.sin(10 * np.pi * X[:, 0]) - noise

gbdt_no_cst = HistGradientBoostingRegressor().fit(X, y)

gbdt_cst = HistGradientBoostingRegressor(monotonic_cst=[1, 0]).fit(X, y)

# plot_partial_dependence 在版本 1.2 中已被移除。从 1.2 开始,请使用 PartialDependenceDisplay 代替。

# disp = plot_partial_dependence(

disp = PartialDependenceDisplay.from_estimator(

gbdt_no_cst,

X,

features=[0],

feature_names=["feature 0"],

line_kw={"linewidth": 4, "label": "unconstrained", "color": "tab:blue"},

)

# plot_partial_dependence(

PartialDependenceDisplay.from_estimator(

gbdt_cst,

X,

features=[0],

line_kw={"linewidth": 4, "label": "constrained", "color": "tab:orange"},

ax=disp.axes_,

)

disp.axes_[0, 0].plot(

X[:, 0], y, "o", alpha=0.5, zorder=-1, label="samples", color="tab:green"

)

disp.axes_[0, 0].set_ylim(-3, 3)

disp.axes_[0, 0].set_xlim(-1, 1)

plt.legend()

plt.show()

对Lasso和ElasticNet的样本权重支持#

现在,两个线性回归器:Lasso 和

ElasticNet 支持样本权重。

from sklearn.model_selection import train_test_split

from sklearn.datasets import make_regression

from sklearn.linear_model import Lasso

import numpy as np

n_samples, n_features = 1000, 20

rng = np.random.RandomState(0)

X, y = make_regression(n_samples, n_features, random_state=rng)

sample_weight = rng.rand(n_samples)

X_train, X_test, y_train, y_test, sw_train, sw_test = train_test_split(

X, y, sample_weight, random_state=rng

)

reg = Lasso()

reg.fit(X_train, y_train, sample_weight=sw_train)

print(reg.score(X_test, y_test, sw_test))

0.999791942438998

Total running time of the script: (0 minutes 0.812 seconds)

Related examples