Note

Go to the end to download the full example code. or to run this example in your browser via Binder

在 XOR 数据集上展示高斯过程分类 (GPC)#

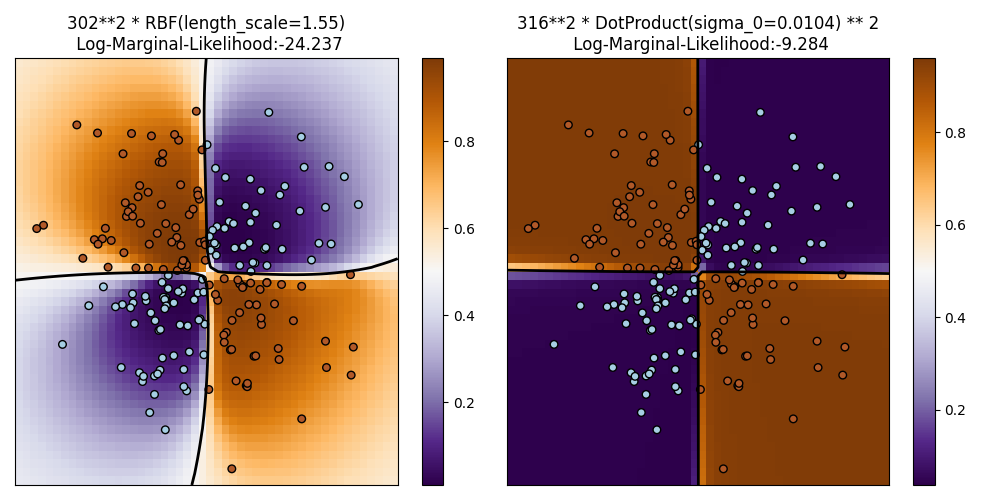

此示例展示了在 XOR 数据集上应用 GPC。比较了一个平稳的各向同性核 (RBF) 和一个非平稳核 (DotProduct)。在这个特定的数据集上,DotProduct 核获得了显著更好的结果,因为类边界是线性的,并且与坐标轴重合。通常情况下,平稳核往往能获得更好的结果。

/app/scikit-learn-main-origin/sklearn/gaussian_process/kernels.py:431: ConvergenceWarning:

The optimal value found for dimension 0 of parameter k1__constant_value is close to the specified upper bound 100000.0. Increasing the bound and calling fit again may find a better value.

# 作者:scikit-learn 开发者

# SPDX-License-Identifier: BSD-3-Clause

import matplotlib.pyplot as plt

import numpy as np

from sklearn.gaussian_process import GaussianProcessClassifier

from sklearn.gaussian_process.kernels import RBF, DotProduct

xx, yy = np.meshgrid(np.linspace(-3, 3, 50), np.linspace(-3, 3, 50))

rng = np.random.RandomState(0)

X = rng.randn(200, 2)

Y = np.logical_xor(X[:, 0] > 0, X[:, 1] > 0)

# 拟合模型

plt.figure(figsize=(10, 5))

kernels = [1.0 * RBF(length_scale=1.15), 1.0 * DotProduct(sigma_0=1.0) ** 2]

for i, kernel in enumerate(kernels):

clf = GaussianProcessClassifier(kernel=kernel, warm_start=True).fit(X, Y)

# 为网格上的每个数据点绘制决策函数

Z = clf.predict_proba(np.vstack((xx.ravel(), yy.ravel())).T)[:, 1]

Z = Z.reshape(xx.shape)

plt.subplot(1, 2, i + 1)

image = plt.imshow(

Z,

interpolation="nearest",

extent=(xx.min(), xx.max(), yy.min(), yy.max()),

aspect="auto",

origin="lower",

cmap=plt.cm.PuOr_r,

)

contours = plt.contour(xx, yy, Z, levels=[0.5], linewidths=2, colors=["k"])

plt.scatter(X[:, 0], X[:, 1], s=30, c=Y, cmap=plt.cm.Paired, edgecolors=(0, 0, 0))

plt.xticks(())

plt.yticks(())

plt.axis([-3, 3, -3, 3])

plt.colorbar(image)

plt.title(

"%s\n Log-Marginal-Likelihood:%.3f"

% (clf.kernel_, clf.log_marginal_likelihood(clf.kernel_.theta)),

fontsize=12,

)

plt.tight_layout()

plt.show()

Total running time of the script: (0 minutes 1.844 seconds)

Related examples

sphx_glr_auto_examples_exercises_plot_iris_exercise.py

SVM 练习