Note

Go to the end to download the full example code. or to run this example in your browser via Binder

使用Pipeline和GridSearchCV选择降维方法#

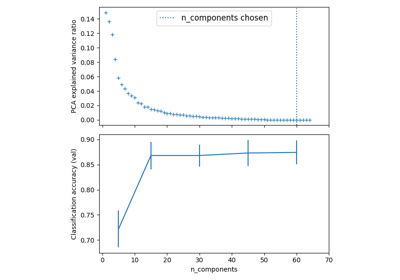

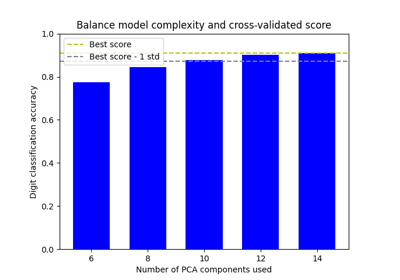

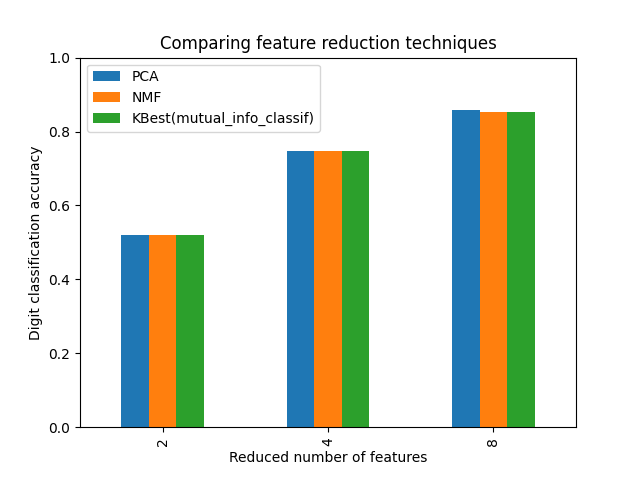

本示例构建了一个管道,先进行降维,然后使用支持向量分类器进行预测。它展示了如何使用 GridSearchCV 和 Pipeline 在一次交叉验证运行中优化不同类别的估计器——在网格搜索期间,无监督的 PCA 和 NMF 降维方法与单变量特征选择进行了比较。

此外,可以使用 memory 参数实例化 Pipeline 以对管道中的转换器进行缓存,避免重复拟合相同的转换器。

请注意,当转换器的拟合成本较高时,使用 memory 启用缓存会变得很有意义。

# 作者:罗伯特·麦吉本

# 乔尔·诺斯曼

# 纪尧姆·勒梅特

Pipeline 和 GridSearchCV 的示例

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import load_digits

from sklearn.decomposition import NMF, PCA

from sklearn.feature_selection import SelectKBest, mutual_info_classif

from sklearn.model_selection import GridSearchCV

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import MinMaxScaler

from sklearn.svm import LinearSVC

X, y = load_digits(return_X_y=True)

pipe = Pipeline(

[

("scaling", MinMaxScaler()),

# reduce_dim阶段由param_grid填充

("reduce_dim", "passthrough"),

("classify", LinearSVC(dual=False, max_iter=10000)),

]

)

N_FEATURES_OPTIONS = [2, 4, 8]

C_OPTIONS = [1, 10, 100, 1000]

param_grid = [

{

"reduce_dim": [PCA(iterated_power=7), NMF(max_iter=1_000)],

"reduce_dim__n_components": N_FEATURES_OPTIONS,

"classify__C": C_OPTIONS,

},

{

"reduce_dim": [SelectKBest(mutual_info_classif)],

"reduce_dim__k": N_FEATURES_OPTIONS,

"classify__C": C_OPTIONS,

},

]

reducer_labels = ["PCA", "NMF", "KBest(mutual_info_classif)"]

grid = GridSearchCV(pipe, n_jobs=1, param_grid=param_grid)

grid.fit(X, y)

import pandas as pd

mean_scores = np.array(grid.cv_results_["mean_test_score"])

# 分数按param_grid迭代的顺序排列,即按字母顺序排列

mean_scores = mean_scores.reshape(len(C_OPTIONS), -1, len(N_FEATURES_OPTIONS))

# 选择最佳C的分数

mean_scores = mean_scores.max(axis=0)

# 创建一个数据框以简化绘图

mean_scores = pd.DataFrame(

mean_scores.T, index=N_FEATURES_OPTIONS, columns=reducer_labels

)

ax = mean_scores.plot.bar()

ax.set_title("Comparing feature reduction techniques")

ax.set_xlabel("Reduced number of features")

ax.set_ylabel("Digit classification accuracy")

ax.set_ylim((0, 1))

ax.legend(loc="upper left")

plt.show()

在 Pipeline 中缓存转换器

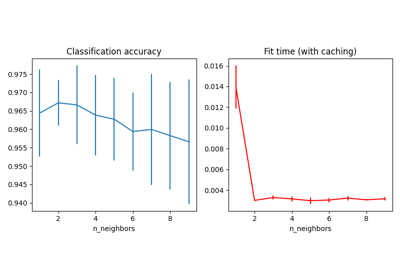

有时存储特定转换器的状态是值得的,因为它可能会再次使用。在 GridSearchCV 中使用管道会触发这种情况。因此,我们使用参数 memory 来启用缓存。

请注意,这个例子只是一个说明,因为在这种特定情况下,拟合PCA不一定比加载缓存慢。因此,当变换器的拟合代价较高时,请使用 memory 构造参数。

from shutil import rmtree

from joblib import Memory

# 创建一个临时文件夹来存储流水线的转换器

location = "cachedir"

memory = Memory(location=location, verbose=10)

cached_pipe = Pipeline(

[("reduce_dim", PCA()), ("classify", LinearSVC(dual=False, max_iter=10000))],

memory=memory,

)

# 这次将在网格搜索中使用缓存的管道

# 退出前删除临时缓存

memory.clear(warn=False)

rmtree(location)

PCA 拟合仅在评估 LinearSVC 分类器的第一个 C 参数配置时计算。其他 C 参数配置将触发加载缓存的 PCA 估计器数据,从而节省处理时间。因此,当拟合变换器成本较高时,使用 memory 缓存管道非常有利。

Total running time of the script: (0 minutes 40.968 seconds)

Related examples