Note

Go to the end to download the full example code. or to run this example in your browser via Binder

使用树集成进行特征转换#

将您的特征转换为更高维度的稀疏空间。然后在这些特征上训练一个线性模型。

首先在训练集上拟合一个树集成(完全随机树、随机森林或梯度提升树)。然后,集成中每棵树的每个叶子在一个新的特征空间中被分配一个固定的任意特征索引。这些叶子索引随后以独热编码的方式进行编码。

每个样本通过集成中每棵树的决策,并最终在每棵树中落入一个叶子。通过将这些叶子的特征值设置为1,其他特征值设置为0来对样本进行编码。

由此产生的转换器便学习到了数据的监督、稀疏、高维度的类别嵌入。

# 作者:scikit-learn 开发者

# SPDX-License-Identifier: BSD-3-Clause

首先,我们将创建一个大型数据集并将其拆分为三个集合:

一个用于训练集成方法的数据集,这些方法随后用作特征工程转换器;

一个用于训练线性模型的数据集;

一个用于测试线性模型的数据集。

重要的是以避免因数据泄露而导致过拟合的方式划分数据。

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

X, y = make_classification(n_samples=80_000, random_state=10)

X_full_train, X_test, y_full_train, y_test = train_test_split(

X, y, test_size=0.5, random_state=10

)

X_train_ensemble, X_train_linear, y_train_ensemble, y_train_linear = train_test_split(

X_full_train, y_full_train, test_size=0.5, random_state=10

)

对于每个集成方法,我们将使用10个估计器和最大深度为3级。

n_estimators = 10

max_depth = 3

首先,我们将在分离的训练集上训练随机森林和梯度提升模型。

from sklearn.ensemble import GradientBoostingClassifier, RandomForestClassifier

random_forest = RandomForestClassifier(

n_estimators=n_estimators, max_depth=max_depth, random_state=10

)

random_forest.fit(X_train_ensemble, y_train_ensemble)

gradient_boosting = GradientBoostingClassifier(

n_estimators=n_estimators, max_depth=max_depth, random_state=10

)

_ = gradient_boosting.fit(X_train_ensemble, y_train_ensemble)

请注意,HistGradientBoostingClassifier 比 GradientBoostingClassifier 快得多,尤其是在处理中等规模的数据集( n_samples >= 10_000 )时,但这不适用于当前的示例。

RandomTreesEmbedding 是一种无监督方法,因此不需要单独进行训练。

from sklearn.ensemble import RandomTreesEmbedding

random_tree_embedding = RandomTreesEmbedding(

n_estimators=n_estimators, max_depth=max_depth, random_state=0

)

现在,我们将创建三个管道,这些管道将使用上述嵌入作为预处理阶段。

随机树嵌入可以直接与逻辑回归进行流水线处理,因为它是一个标准的scikit-learn转换器。

from sklearn.linear_model import LogisticRegression

from sklearn.pipeline import make_pipeline

rt_model = make_pipeline(random_tree_embedding, LogisticRegression(max_iter=1000))

rt_model.fit(X_train_linear, y_train_linear)

然后,我们可以将随机森林或梯度提升与逻辑回归进行流水线处理。然而,特征转换将通过调用方法 apply 来实现。scikit-learn 中的流水线期望调用 transform 。因此,我们将对 apply 的调用包装在 FunctionTransformer 中。

from sklearn.preprocessing import FunctionTransformer, OneHotEncoder

def rf_apply(X, model):

return model.apply(X)

rf_leaves_yielder = FunctionTransformer(rf_apply, kw_args={"model": random_forest})

rf_model = make_pipeline(

rf_leaves_yielder,

OneHotEncoder(handle_unknown="ignore"),

LogisticRegression(max_iter=1000),

)

rf_model.fit(X_train_linear, y_train_linear)

def gbdt_apply(X, model):

return model.apply(X)[:, :, 0]

gbdt_leaves_yielder = FunctionTransformer(

gbdt_apply, kw_args={"model": gradient_boosting}

)

gbdt_model = make_pipeline(

gbdt_leaves_yielder,

OneHotEncoder(handle_unknown="ignore"),

LogisticRegression(max_iter=1000),

)

gbdt_model.fit(X_train_linear, y_train_linear)

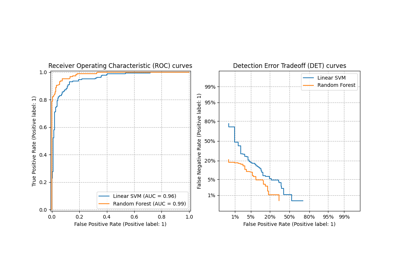

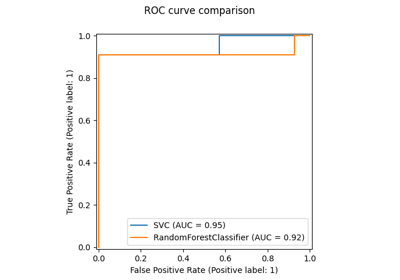

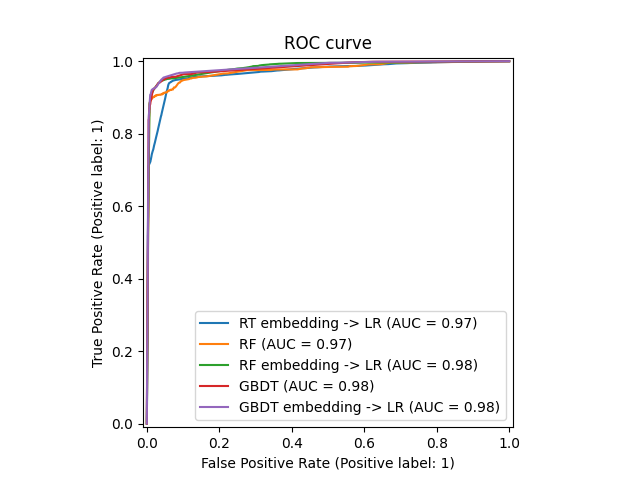

我们终于可以展示所有模型的不同ROC曲线了。

import matplotlib.pyplot as plt

from sklearn.metrics import RocCurveDisplay

_, ax = plt.subplots()

models = [

("RT embedding -> LR", rt_model),

("RF", random_forest),

("RF embedding -> LR", rf_model),

("GBDT", gradient_boosting),

("GBDT embedding -> LR", gbdt_model),

]

model_displays = {}

for name, pipeline in models:

model_displays[name] = RocCurveDisplay.from_estimator(

pipeline, X_test, y_test, ax=ax, name=name

)

_ = ax.set_title("ROC curve")

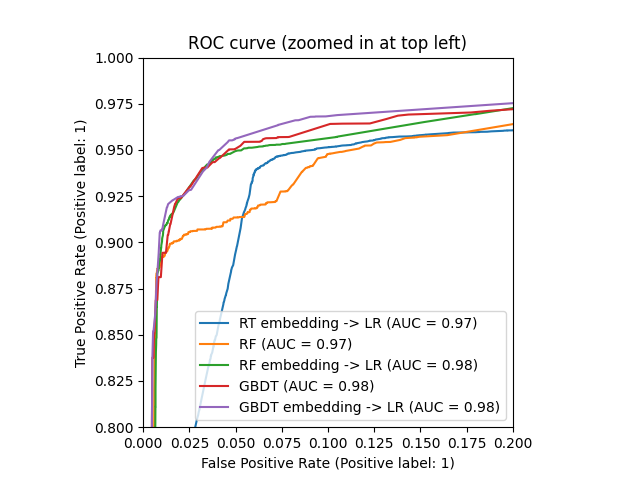

_, ax = plt.subplots()

for name, pipeline in models:

model_displays[name].plot(ax=ax)

ax.set_xlim(0, 0.2)

ax.set_ylim(0.8, 1)

_ = ax.set_title("ROC curve (zoomed in at top left)")

Total running time of the script: (0 minutes 4.538 seconds)

Related examples